Ok, new to ProxMox, Ceph and Linux!

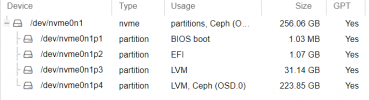

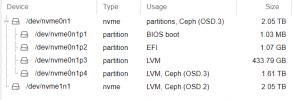

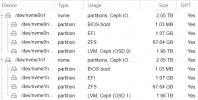

I have (3) servers with (2) SSDs in each. ProxMox and CEPH are installed. ProxMox has the entire first SSD and Ceph has the second on each server.

My question is can I reduce the ProxMox partition on the first SSD and give the balance to CEPH? Or at least re-install ProxMox and use only a small part of the first SSD and again give the balance to Ceph?

Thanks!

I have (3) servers with (2) SSDs in each. ProxMox and CEPH are installed. ProxMox has the entire first SSD and Ceph has the second on each server.

My question is can I reduce the ProxMox partition on the first SSD and give the balance to CEPH? Or at least re-install ProxMox and use only a small part of the first SSD and again give the balance to Ceph?

Thanks!