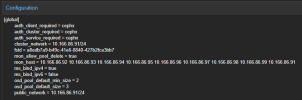

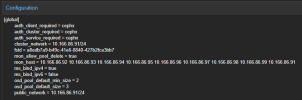

Hello I am new to proxmox and need help figuring out how to change my ceph traffic to use a different network.

Bond1 and Bond2 are what I want my ceph traffic to use as each of those bonds is 4x25gb.

Bond 1 and 2 share a switch that is seperated from everything else

I tried just changing the cluster_network but that didn't work.

Bond1 and Bond2 are what I want my ceph traffic to use as each of those bonds is 4x25gb.

Bond 1 and 2 share a switch that is seperated from everything else

I tried just changing the cluster_network but that didn't work.

Last edited: