Hi,

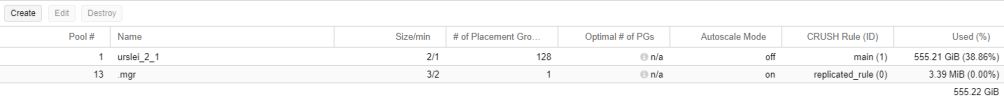

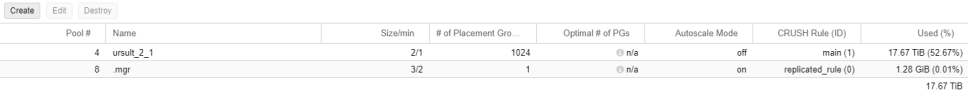

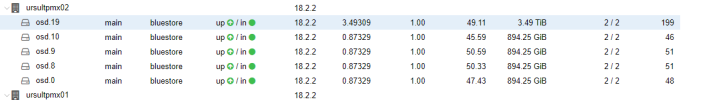

I am not happy with current ceph balancer as there is too big difference in number of PG per OSDs.

I would like to try upmap-read but all clients must be reef, however they are luminous.

Why Proxmox use luminous clients for reef ceph? Can I change it to reef and activate upmap-read balancer?

I am not happy with current ceph balancer as there is too big difference in number of PG per OSDs.

I would like to try upmap-read but all clients must be reef, however they are luminous.

Why Proxmox use luminous clients for reef ceph? Can I change it to reef and activate upmap-read balancer?

Code:

ceph version

ceph version 18.2.2 (e9fe820e7fffd1b7cde143a9f77653b73fcec748) reef (stable)

Code:

ceph features

{

"mon": [

{

"features": "0x3f01cfbffffdffff",

"release": "luminous",

"num": 3

}

],

"osd": [

{

"features": "0x3f01cfbffffdffff",

"release": "luminous",

"num": 30

}

],

"client": [

{

"features": "0x2f018fb87aa4aafe",

"release": "luminous",

"num": 5

},

{

"features": "0x3f01cfbffffdffff",

"release": "luminous",

"num": 5

}

],

"mgr": [

{

"features": "0x3f01cfbffffdffff",

"release": "luminous",

"num": 3

}

]

}