Hello,

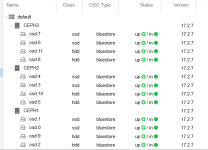

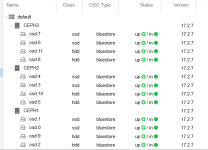

I am using CEPH 17.2.7 with 3 Hosts ( CEPH01 CEPH02 CEPH03 ) and only 1 POOL ( named rpool in my example) .

These POOL use the default crush rule . All my disks ( x12 ) were only SATA HDD.

Now i want to have 2 new POOLS :

- SATAPOOL ( for slow storage)

- SSDPOOL ( for fast storage)

1/ So Recently, Physically I replaced 6 HDD disks by 6 SSD disk ( 2 by hosts)

2 / I have created 2 new crush rules by replicated the default one .

"rule_sata"

"rule_ssd"

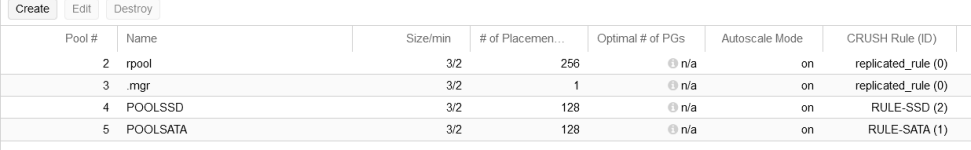

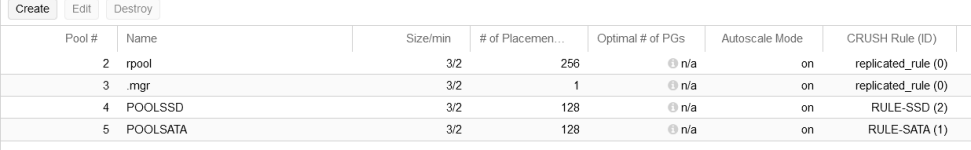

3 / I have created 2 new pools :

"POOLSATA" matching the rule_sata

"POOLSSD" matching the rule_ssd

4/ At these point , what is the best practice to dispatch all my vm’s on the 2 differents storage.

- Move all the vm’s disk from my historical « RPOOL » to the new « POOLSATA »

( How Ceph is able to recacul the pools size ? The « rpool » size will be decreasing and satapool increasing , during the vm’ disk migration ) ?

or

- Change the RPOOL crush rule from « default_crush_rule » to « rule_sata » crush rule ?

In this way, all my data will be stored on the HDD disk . Then, i will migrate some prefered Vm’ to the SSD Pool.

Thanks for your replied. I post here my actual ceph configuration :

I am using CEPH 17.2.7 with 3 Hosts ( CEPH01 CEPH02 CEPH03 ) and only 1 POOL ( named rpool in my example) .

These POOL use the default crush rule . All my disks ( x12 ) were only SATA HDD.

Now i want to have 2 new POOLS :

- SATAPOOL ( for slow storage)

- SSDPOOL ( for fast storage)

1/ So Recently, Physically I replaced 6 HDD disks by 6 SSD disk ( 2 by hosts)

2 / I have created 2 new crush rules by replicated the default one .

"rule_sata"

"rule_ssd"

3 / I have created 2 new pools :

"POOLSATA" matching the rule_sata

"POOLSSD" matching the rule_ssd

4/ At these point , what is the best practice to dispatch all my vm’s on the 2 differents storage.

- Move all the vm’s disk from my historical « RPOOL » to the new « POOLSATA »

( How Ceph is able to recacul the pools size ? The « rpool » size will be decreasing and satapool increasing , during the vm’ disk migration ) ?

or

- Change the RPOOL crush rule from « default_crush_rule » to « rule_sata » crush rule ?

In this way, all my data will be stored on the HDD disk . Then, i will migrate some prefered Vm’ to the SSD Pool.

Thanks for your replied. I post here my actual ceph configuration :