Hi all,

I am currently testing a Ceph 19.2 on a 3/2 test cluster, which has worked properly so far. Now I wanted to try CephFS, but failed because the pool cannot be mounted. I followed the documentation: https://pve.proxmox.com/pve-docs/chapter-pveceph.html#pveceph_fs

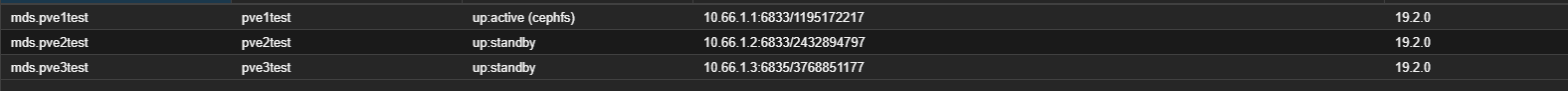

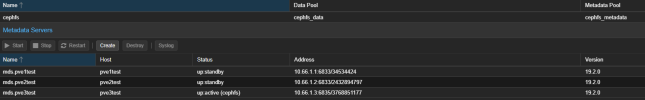

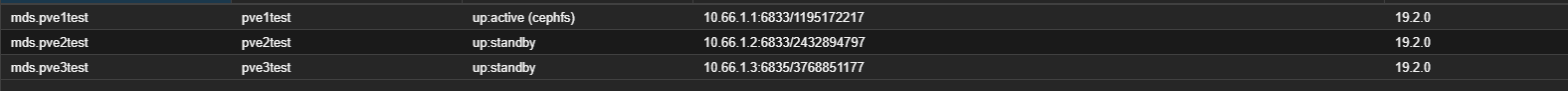

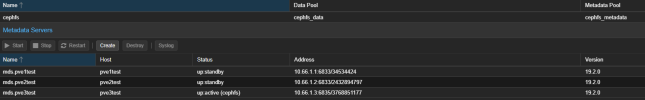

The metadata servers are created normally and are in standby mode

If I now want to create the CephFS pool, it aborts every time with the error message “adding storage for CephFS ‘cephfs’ failed”

The description says that the MDS server does not switch to active status, but this is not true. This means that CephFS will not be mounted. Can someone help me with this or explain how to mount CephFS manually?

I am currently testing a Ceph 19.2 on a 3/2 test cluster, which has worked properly so far. Now I wanted to try CephFS, but failed because the pool cannot be mounted. I followed the documentation: https://pve.proxmox.com/pve-docs/chapter-pveceph.html#pveceph_fs

The metadata servers are created normally and are in standby mode

If I now want to create the CephFS pool, it aborts every time with the error message “adding storage for CephFS ‘cephfs’ failed”

Code:

creating data pool 'cephfs_data'...

pool cephfs_data: applying application = cephfs

skipping 'pg_num', did not change

creating metadata pool 'cephfs_metadata'...

skipping 'pg_num', did not change

configuring new CephFS 'cephfs'

Successfully create CephFS 'cephfs'

Adding 'cephfs' to storage configuration...

Waiting for an MDS to become active

Waiting for an MDS to become active

TASK ERROR: adding storage for CephFS 'cephfs' failed, check log and add manually! create storage failed: mount error: Job failed. See "journalctl -xe" for details.

Code:

Nov 08 08:06:07 pve1test login[1133173]: ROOT LOGIN on '/dev/pts/0'

Nov 08 08:06:07 pve1test kernel: libceph: mon1 (1)10.66.1.2:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:07 pve1test kernel: libceph: mon1 (1)10.66.1.2:6789 missing required protocol features

Nov 08 08:06:08 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:08 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 missing required protocol features

Nov 08 08:06:08 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:08 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 missing required protocol features

Nov 08 08:06:09 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:09 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 missing required protocol features

Nov 08 08:06:10 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:10 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 missing required protocol features

Nov 08 08:06:11 pve1test kernel: libceph: mon1 (1)10.66.1.2:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:11 pve1test kernel: libceph: mon1 (1)10.66.1.2:6789 missing required protocol features

Nov 08 08:06:12 pve1test kernel: libceph: mon1 (1)10.66.1.2:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:12 pve1test kernel: libceph: mon1 (1)10.66.1.2:6789 missing required protocol features

Nov 08 08:06:12 pve1test kernel: libceph: mon1 (1)10.66.1.2:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:12 pve1test kernel: libceph: mon1 (1)10.66.1.2:6789 missing required protocol features

Nov 08 08:06:13 pve1test kernel: libceph: mon1 (1)10.66.1.2:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:13 pve1test kernel: libceph: mon1 (1)10.66.1.2:6789 missing required protocol features

Nov 08 08:06:14 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:14 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 missing required protocol features

Nov 08 08:06:15 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 feature set mismatch, my 2f018fb87aa4aafe < server's 2f018fb8faa4aafe, missing 80000000

Nov 08 08:06:15 pve1test kernel: libceph: mon2 (1)10.66.1.3:6789 missing required protocol features

Nov 08 08:06:15 pve1test kernel: ceph: No mds server is up or the cluster is laggy

Nov 08 08:06:15 pve1test mount[1132880]: mount error: no mds (Metadata Server) is up. The cluster might be laggy, or you may not be authorized

Nov 08 08:06:15 pve1test systemd[1]: mnt-pve-CephFS.mount: Mount process exited, code=exited, status=32/n/a

Code:

Nov 08 08:06:15 pve1test systemd[1]: mnt-pve-CephFS.mount: Failed with result 'exit-code'.

Nov 08 08:06:15 pve1test systemd[1]: Failed to mount mnt-pve-CephFS.mount - /mnt/pve/CephFS.

Code:

proxmox-ve: 8.2.0 (running kernel: 6.8.12-3-pve)

pve-manager: 8.2.7 (running version: 8.2.7/3e0176e6bb2ade3b)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.12-3

proxmox-kernel-6.8.12-3-pve-signed: 6.8.12-3

proxmox-kernel-6.8.12-2-pve-signed: 6.8.12-2

proxmox-kernel-6.8.12-1-pve-signed: 6.8.12-1

proxmox-kernel-6.8.4-2-pve-signed: 6.8.4-2

ceph: 19.2.0-pve2

ceph-fuse: 19.2.0-pve2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx9

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.4

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.8

libpve-cluster-perl: 8.0.8

libpve-common-perl: 8.2.5

libpve-guest-common-perl: 5.1.4

libpve-http-server-perl: 5.1.2

libpve-network-perl: 0.9.8

libpve-rs-perl: 0.8.10

libpve-storage-perl: 8.2.5

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-4

proxmox-backup-client: 3.2.7-1

proxmox-backup-file-restore: 3.2.7-1

proxmox-firewall: 0.5.0

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.7

proxmox-widget-toolkit: 4.2.4

pve-cluster: 8.0.8

pve-container: 5.2.0

pve-docs: 8.2.3

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.2

pve-firewall: 5.0.7

pve-firmware: 3.14-1

pve-ha-manager: 4.0.5

pve-i18n: 3.2.4

pve-qemu-kvm: 9.0.2-3

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.4

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.6-pve1The description says that the MDS server does not switch to active status, but this is not true. This means that CephFS will not be mounted. Can someone help me with this or explain how to mount CephFS manually?

Last edited: