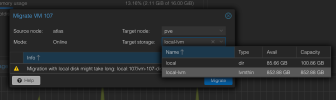

Trying to migrate a VM from one node to another on a 2-node cluster.

Google seems to indicate that the error means that the `vm-107-disk-0.qcow2` file was deleted, but I don't think thats the case. I can restart the entire host and the VM will start just fine. I seem to get this error on every VM I try to migrate

Code:

2024-12-29 20:49:59 starting migration of VM 107 to node 'pve' (192.168.0.51)

2024-12-29 20:49:59 starting VM 107 on remote node 'pve'

2024-12-29 20:50:00 [pve] volume 'local:107/vm-107-disk-0.qcow2' does not exist

2024-12-29 20:50:00 ERROR: online migrate failure - remote command failed with exit code 255

2024-12-29 20:50:00 aborting phase 2 - cleanup resources

2024-12-29 20:50:00 migrate_cancel

2024-12-29 20:50:01 ERROR: migration finished with problems (duration 00:00:03)

TASK ERROR: migration problems

Code:

root@atlas:~# qm config 107

agent: 1

boot: order=scsi0;net0

cores: 12

cpu: x86-64-v2-AES

memory: 16384

meta: creation-qemu=9.0.2,ctime=1728358264

name: cb-api

net0: virtio=BC:24:11:5C:E9:90,bridge=vmbr0,firewall=1

numa: 0

onboot: 1

ostype: l26

scsi0: local:107/vm-107-disk-0.qcow2,discard=on,iothread=1,size=128G,ssd=1

scsihw: virtio-scsi-single

smbios1: uuid=771badef-b8bc-4739-a9ba-389390429b3d

sockets: 1

vmgenid: 9524c0e9-7569-41f4-bb99-3394cfc295c8Google seems to indicate that the error means that the `vm-107-disk-0.qcow2` file was deleted, but I don't think thats the case. I can restart the entire host and the VM will start just fine. I seem to get this error on every VM I try to migrate