Hi,

I had a situation, where (it looks like, cannot quite pinpoint the problem) one VM (Win2011 SBS Std) will start using close to 80-90% of CPU and lockup the proxmox server (server load was 30 (max)). Everything becomes VERY slow and unresponsive. It was so bad, that I could not open a console (noVNC or SPICE) to investigate. No backup was running at that time.

In order for me to get the server back to working condition, I decided to just hard STOP the VM (not a clean shutdown). After that, everything settled down and seems to work fine now. Than I tried to START the VM again. No luck. I get this here:

I tried to restart the pve-cluster and pvestatd. I also unlocked the VM. All the restart and unlock commands worked and did not return an error but the VM will still not start.

This is the output of pveversion -v:

I have not restarted the node, however, the server is in production and I would have to wait until I can restart it. I am hoping to resolve the issue without a restart.

If you guys need any other info, please let me know.

THANK YOU!!!

I had a situation, where (it looks like, cannot quite pinpoint the problem) one VM (Win2011 SBS Std) will start using close to 80-90% of CPU and lockup the proxmox server (server load was 30 (max)). Everything becomes VERY slow and unresponsive. It was so bad, that I could not open a console (noVNC or SPICE) to investigate. No backup was running at that time.

In order for me to get the server back to working condition, I decided to just hard STOP the VM (not a clean shutdown). After that, everything settled down and seems to work fine now. Than I tried to START the VM again. No luck. I get this here:

Code:

Failed to create message: Input/output error

TASK ERROR: start failed: command '/usr/bin/systemd-run --scope --slice qemu --unit 100 -p 'CPUShares=1000' /usr/bin/kvm -id 100 -chardev 'socket,id=qmp,path=/var/run/qemu-server/100.qmp,server,nowait' -mon 'chardev=qmp,mode=control' -vnc unix:/var/run/qemu-server/100.vnc,x509,password -pidfile /var/run/qemu-server/100.pid -daemonize -name WinSBS2011Std -smp '4,sockets=2,cores=2,maxcpus=4' -nodefaults -boot 'menu=on,strict=on,reboot-timeout=1000' -vga qxl -no-hpet -cpu 'kvm64,hv_spinlocks=0x1fff,hv_vapic,hv_time,hv_relaxed,+lahf_lm,+sep,+kvm_pv_unhalt,+kvm_pv_eoi,-kvm_steal_time,enforce' -m 32768 -k en-us -device 'pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e' -device 'pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f' -device 'piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2' -spice 'tls-port=61002,addr=localhost,tls-ciphers=DES-CBC3-SHA,seamless-migration=on' -device 'virtio-serial,id=spice,bus=pci.0,addr=0x9' -chardev 'spicevmc,id=vdagent,name=vdagent' -device 'virtserialport,chardev=vdagent,name=com.redhat.spice.0' -device 'virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3' -iscsi 'initiator-name=iqn.1993-08.org.debian:01:4827fbaece2b' -device 'virtio-scsi-pci,id=scsihw0,bus=pci.0,addr=0x5' -drive 'file=/dev/zvol/storage/vms/vm-100-disk-2,if=none,id=drive-scsi1,format=raw,cache=none,aio=native,detect-zeroes=on' -device 'scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=1,drive=drive-scsi1,id=scsi1' -drive 'file=/dev/zvol/storage/vms/vm-100-disk-1,if=none,id=drive-scsi0,format=raw,cache=none,aio=native,detect-zeroes=on' -device 'scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,bootindex=101' -drive 'if=none,id=drive-ide2,media=cdrom,aio=threads' -device 'ide-cd,bus=ide.1,unit=0,drive=drive-ide2,id=ide2,bootindex=200' -netdev 'type=tap,id=net0,ifname=tap100i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on' -device 'virtio-net-pci,mac=XX:XX:XX:XX:XX:XX,netdev=net0,bus=pci.0,addr=0x12,id=net0,bootindex=300' -rtc 'driftfix=slew,base=localtime' -global 'kvm-pit.lost_tick_policy=discard'' failed: exit code 1I tried to restart the pve-cluster and pvestatd. I also unlocked the VM. All the restart and unlock commands worked and did not return an error but the VM will still not start.

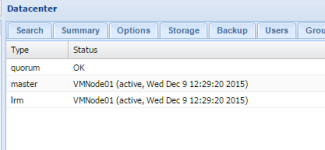

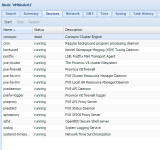

This is the output of pveversion -v:

Code:

root@VMNode01:/# pveversion -v

proxmox-ve: 4.0-22 (running kernel: 4.2.3-2-pve)

pve-manager: 4.0-57 (running version: 4.0-57/cc7c2b53)

pve-kernel-4.2.2-1-pve: 4.2.2-16

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve1

corosync-pve: 2.3.5-1

libqb0: 0.17.2-1

pve-cluster: 4.0-24

qemu-server: 4.0-35

pve-firmware: 1.1-7

libpve-common-perl: 4.0-36

libpve-access-control: 4.0-9

libpve-storage-perl: 4.0-29

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-12

pve-container: 1.0-21

pve-firewall: 2.0-13

pve-ha-manager: 1.0-13

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.4-3

lxcfs: 0.10-pve2

cgmanager: 0.39-pve1

criu: 1.6.0-1

zfsutils: 0.6.5-pve6~jessieI have not restarted the node, however, the server is in production and I would have to wait until I can restart it. I am hoping to resolve the issue without a restart.

If you guys need any other info, please let me know.

THANK YOU!!!

Last edited: