Hi guys,

Been pulling my hair out over a few problem VMs.

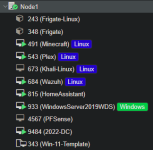

I mounted 2 ISCSI drives for the VMs but stupidly detached and wiped the drives before destroying the VMs, now I cannot destroy them because Proxmomx cannot see the conf files to destroy the VM.

Hope that made sense to someone

Been pulling my hair out over a few problem VMs.

I mounted 2 ISCSI drives for the VMs but stupidly detached and wiped the drives before destroying the VMs, now I cannot destroy them because Proxmomx cannot see the conf files to destroy the VM.

Hope that made sense to someone