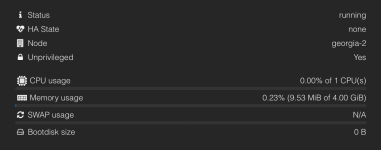

Hello, on one of our nodes we ran into problem with several LXC servers. Proxmox shows bootdisk size 0B on them:

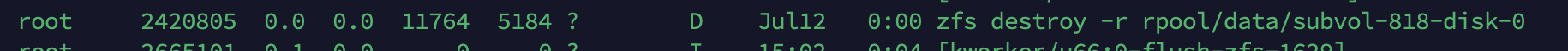

We have not been able to notice any patterns that cause this problem. We can't stop such containers, since shutdown and stop tasks for them take an infinitely long time to complete. Also we tried to kill one container by killing all processes associated with this container. It helped us to stop the container, but now we can't do anything else with it, even destroy, because trying to destroy CT fails due to ZFS error: "cannot destroy 'rpool/data/subvol-855-disk-0': dataset is busy". We use ZFS as storage for container disks on this node. A million attempts to figure out which process is using this dataset and delete it haven't brought any success. What can cause such a problem and how can it be solved?

We have not been able to notice any patterns that cause this problem. We can't stop such containers, since shutdown and stop tasks for them take an infinitely long time to complete. Also we tried to kill one container by killing all processes associated with this container. It helped us to stop the container, but now we can't do anything else with it, even destroy, because trying to destroy CT fails due to ZFS error: "cannot destroy 'rpool/data/subvol-855-disk-0': dataset is busy". We use ZFS as storage for container disks on this node. A million attempts to figure out which process is using this dataset and delete it haven't brought any success. What can cause such a problem and how can it be solved?