Hello,

i have set up Proxmox on ZFS with two pools:

See the following for details:

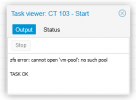

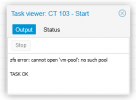

This setup works well. It's just that sometimes some of these containers won't auto start (start on boot), as it seems that "vm-pool" takes some time to mount after booting. The start task of the affected CT says "zfs error: cannot open 'vm-pool': no such pool", which prevents the CT from starting, even though it says "TASK OK" afterwards. If i then manually start the CT, it will just start as expected.

Is there a way to make sure that these CTs just start after the pool got mounted? Or is there a better way to share a ZFS filesystem between CTs, preferably without the use of priviledged containers, and also without straying off too far from a standard Proxmox installation/configuration.

i have set up Proxmox on ZFS with two pools:

- rpool

- vm-pool

See the following for details:

Code:

....

arch: amd64

cores: 4

features: nesting=1

hostname: galago

memory: 2048

mp0: /vm-pool/storage/fileserver/Media/Images/Galago,mp=/Galago

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=0A:FB:4B:3D:23:22,ip=dhcp,ip6=dhcp,type=veth

onboot: 1

ostype: debian

rootfs: local-zfs:subvol-103-disk-0,size=64G

swap: 512

unprivileged: 1

Code:

root@pve-office:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 134G 90.5G 104K /rpool

rpool/ROOT 14.7G 90.5G 96K /rpool/ROOT

rpool/ROOT/pve-1 14.7G 90.5G 13.8G /

rpool/data 111G 90.5G 120K /rpool/data

rpool/data/subvol-102-disk-0 2.81G 5.19G 2.81G /rpool/data/subvol-102-disk-0

rpool/data/subvol-103-disk-0 1.66G 62.3G 1.66G /rpool/data/subvol-103-disk-0

rpool/data/subvol-104-disk-0 2.48G 61.5G 2.48G /rpool/data/subvol-104-disk-0

rpool/data/subvol-105-disk-0 776M 63.2G 776M /rpool/data/subvol-105-disk-0

rpool/data/subvol-107-disk-0 1.16G 90.5G 1.16G /rpool/data/subvol-107-disk-0

rpool/data/subvol-108-disk-0 4.50G 59.5G 4.50G /rpool/data/subvol-108-disk-0

rpool/data/subvol-110-disk-0 857M 31.2G 857M /rpool/data/subvol-110-disk-0

rpool/data/subvol-111-disk-0 2.35G 29.6G 2.35G /rpool/data/subvol-111-disk-0

rpool/data/subvol-112-disk-0 550M 90.5G 550M /rpool/data/subvol-112-disk-0

rpool/data/subvol-114-disk-0 1.23G 62.8G 1.23G /rpool/data/subvol-114-disk-0

rpool/data/subvol-115-disk-0 10.2G 21.8G 10.2G /rpool/data/subvol-115-disk-0

rpool/data/vm-100-disk-0 38.0G 90.5G 38.0G -

rpool/data/vm-109-disk-0 76K 90.5G 76K -

rpool/data/vm-109-disk-1 44.6G 90.5G 44.6G -

vm-pool 6.44T 2.51T 121K /vm-pool

vm-pool/backups 359G 2.51T 359G /vm-pool/backups

vm-pool/base-101-disk-0 17.4G 2.51T 17.4G -

vm-pool/storage 5.67T 2.51T 5.67T /vm-pool/storage

vm-pool/subvol-112-disk-0 368G 1.64T 368G /vm-pool/subvol-112-disk-0

vm-pool/subvol-113-disk-0 2.12G 510G 2.12G /vm-pool/subvol-113-disk-0

vm-pool/vm-106-disk-0 45.2G 2.51T 45.2G -This setup works well. It's just that sometimes some of these containers won't auto start (start on boot), as it seems that "vm-pool" takes some time to mount after booting. The start task of the affected CT says "zfs error: cannot open 'vm-pool': no such pool", which prevents the CT from starting, even though it says "TASK OK" afterwards. If i then manually start the CT, it will just start as expected.

Is there a way to make sure that these CTs just start after the pool got mounted? Or is there a better way to share a ZFS filesystem between CTs, preferably without the use of priviledged containers, and also without straying off too far from a standard Proxmox installation/configuration.