Nothing seems to add up anywhere, I know zfs space reporting correctly is it's own situation, but please let me know if I'm actually in danger or not and how I can free up some space if it is a problem.

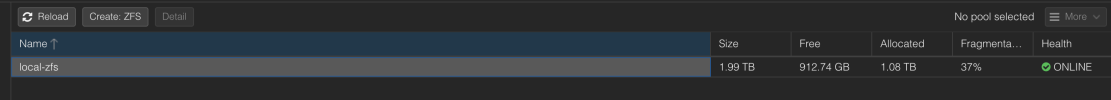

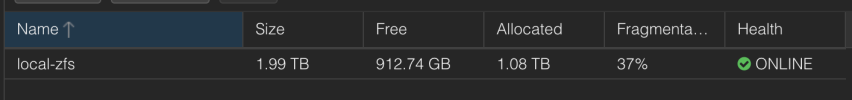

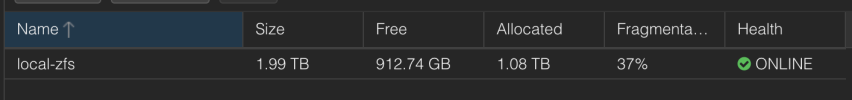

zfs under Disks seems to be happy, (I have 2x 2TB nvme drives in a zfs mirror). Only a lil over 50% used.

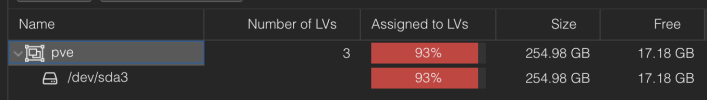

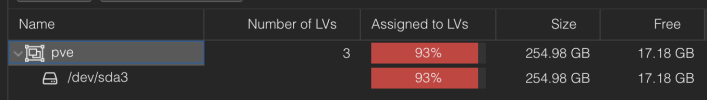

Under Disks I see everything, but looking at my boot drive specifically (that holds proxmox install) (remember this 256GB value):

What does it mean by "Number of LVs" and that high amount of usage? The only thing that should be even on my boot drive should the proxmox host itself.

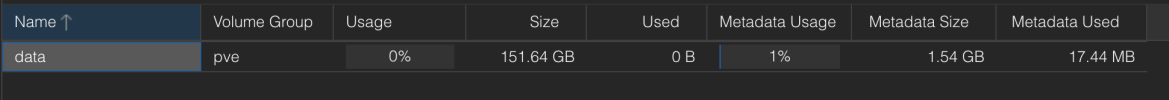

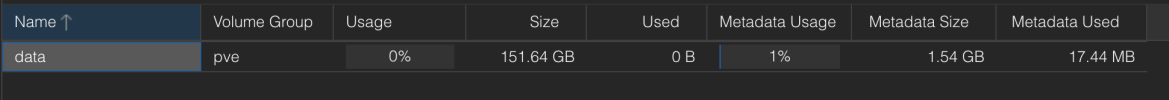

I don't use LVM-thin (AFAIK) yet I have one here with a size of "151GB". Can I tell if anything is actually using it? Can I delete this?

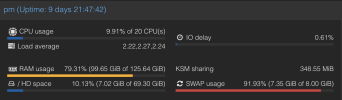

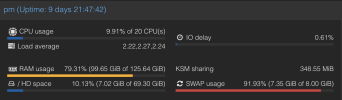

As far as the Summary screen for the entire proxmox host itself it thinks it's only using 7GB of 70GB? 10%? Huh?

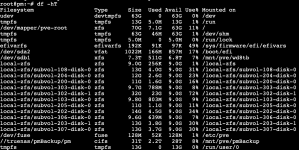

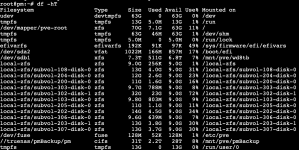

df is reporting ... whatever is going on here. Pretty sure I can ignore all the zfs output correct? And pve-root lines up with the findings from the Summary screen.

Ignore sdb1 and the network share, those are used for backup purposes.

zpool list and zfs list output:

zpool seems to match up with the zfs UI findings above (and assuming some difference in reporting due to TiB/GiB vs TB/GB here?)

Why does zfs list an even smaller available amount of free space?

I did discover that under Datacenter > Storage > local-zfs I did not have thin provision checked. I have since checked it.

Is there a way to convert (or restore) previously thick provisioned disks into thin ones to free space further?

Thank you for any help.

zfs under Disks seems to be happy, (I have 2x 2TB nvme drives in a zfs mirror). Only a lil over 50% used.

Under Disks I see everything, but looking at my boot drive specifically (that holds proxmox install) (remember this 256GB value):

What does it mean by "Number of LVs" and that high amount of usage? The only thing that should be even on my boot drive should the proxmox host itself.

I don't use LVM-thin (AFAIK) yet I have one here with a size of "151GB". Can I tell if anything is actually using it? Can I delete this?

As far as the Summary screen for the entire proxmox host itself it thinks it's only using 7GB of 70GB? 10%? Huh?

df is reporting ... whatever is going on here. Pretty sure I can ignore all the zfs output correct? And pve-root lines up with the findings from the Summary screen.

Ignore sdb1 and the network share, those are used for backup purposes.

zpool list and zfs list output:

zpool seems to match up with the zfs UI findings above (and assuming some difference in reporting due to TiB/GiB vs TB/GB here?)

Why does zfs list an even smaller available amount of free space?

I did discover that under Datacenter > Storage > local-zfs I did not have thin provision checked. I have since checked it.

Is there a way to convert (or restore) previously thick provisioned disks into thin ones to free space further?

Thank you for any help.