sorry for warming up this old thread, but it is appropriate for my question.

My environment:

pve: 7.3-3

ceph: 17.2.5

disk: NVME 120g * 3

Since I am using SATA SSD on the physical machine and may have low test results by then, I used the VMware Nesting Virtualization test pve + Ceph below.

Test environment setup process:

1. Install the pve7.3-3 image normally

2. Install Ceph

3. reconfigure CrushMap. Change

step chooseleaf firstn 0 type host to

step chooseleaf firstn 0 type osd

4. Upload Centos 7.9image

5. Create VM

6. Install fio , fio-cdm(

https://github.com/xlucn/fio-cdm) and miniconda

7. Type

python fio-cdm. Start test.

The fio-cdm default test conf.

Code:

(base) [root@localhost fio-cdm]# ./fio-cdm -f test

(base) [root@localhost fio-cdm]# cat test

[global]

ioengine=libaio

filename=.fio_testmark

directory=/root/fio-cdm

size=1073741824.0

direct=1

runtime=5

refill_buffers

norandommap

randrepeat=0

allrandrepeat=0

group_reporting

[seq-read-1m-q8-t1]

rw=read

bs=1m

rwmixread=0

iodepth=8

numjobs=1

loops=5

stonewall

[seq-write-1m-q8-t1]

rw=write

bs=1m

rwmixread=0

iodepth=8

numjobs=1

loops=5

stonewall

[seq-read-1m-q1-t1]

rw=read

bs=1m

rwmixread=0

iodepth=1

numjobs=1

loops=5

stonewall

[seq-write-1m-q1-t1]

rw=write

bs=1m

rwmixread=0

iodepth=1

numjobs=1

loops=5

stonewall

[rnd-read-4k-q32-t16]

rw=randread

bs=4k

rwmixread=0

iodepth=32

numjobs=16

loops=5

stonewall

[rnd-write-4k-q32-t16]

rw=randwrite

bs=4k

rwmixread=0

iodepth=32

numjobs=16

loops=5

stonewall

[rnd-read-4k-q1-t1]

rw=randread

bs=4k

rwmixread=0

iodepth=1

numjobs=1

loops=5

stonewall

[rnd-write-4k-q1-t1]

rw=randwrite

bs=4k

rwmixread=0

iodepth=1

numjobs=1

loops=5

stonewall

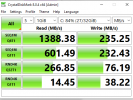

Here are the results of my tests on this machine:

Code:

E:\Programing\PycharmProjects\fio-cdm>python fio-cdm

tests: 5, size: 1.0GiB, target: E:\Programing\PycharmProjects\fio-cdm 639.2GiB/931.2GiB

|Name | Read(MB/s)| Write(MB/s)|

|------------|------------|------------|

|SEQ1M Q8 T1 | 2081.70| 1720.74|

|SEQ1M Q1 T1 | 1196.77| 1332.19|

|RND4K Q32T16| 1678.69| 1687.20|

|. IOPS | 409835.70| 411913.22|

|. latency us| 1233.11| 1022.39|

|RND4K Q1 T1 | 40.58| 97.54|

|. IOPS | 9908.40| 23813.04|

|. latency us| 99.94| 40.53|

Here are the test results for nested virtualization pve + Ceph:

Code:

(base) [root@localhost fio-cdm]# ./fio-cdm

tests: 5, size: 1.0GiB, target: /root/fio-cdm 2.0GiB/27.8GiB

|Name | Read(MB/s)| Write(MB/s)|

|------------|------------|------------|

|SEQ1M Q8 T1 | 1143.50| 4.81|

|SEQ1M Q1 T1 | 504.79| 193.58|

|RND4K Q32T16| 111.63| 28.25|

|. IOPS | 27254.04| 6896.46|

|. latency us| 18699.01| 73813.12|

|RND4K Q1 T1 | 6.18| 2.10|

|. IOPS | 1508.50| 512.79|

|. latency us| 658.65| 1943.99|

From the test results of random 4K 32 queue 16 threads, the gap is huge.

I Can't believe this is an NVME disk.

Other info:

Code:

root@pve:~# ceph osd df

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS

0 ssd 0.11719 1.00000 120 GiB 2.3 GiB 2.2 GiB 0 B 138 MiB 118 GiB 1.95 1.07 24 up

1 ssd 0.11719 1.00000 120 GiB 2.5 GiB 2.3 GiB 0 B 158 MiB 117 GiB 2.09 1.14 25 up

2 ssd 0.11719 1.00000 120 GiB 1.7 GiB 1.6 GiB 0 B 106 MiB 118 GiB 1.44 0.79 17 up

TOTAL 360 GiB 6.6 GiB 6.2 GiB 0 B 402 MiB 353 GiB 1.82

MIN/MAX VAR: 0.79/1.14 STDDEV: 0.28

root@pve:~# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.35155 root default

-3 0.35155 host pve

0 ssd 0.11719 osd.0 up 1.00000 1.00000

1 ssd 0.11719 osd.1 up 1.00000 1.00000

2 ssd 0.11719 osd.2 up 1.00000 1.00000

Even SATA SSD can run 20K + IOPS in the same test configuration if tested in VMware vSAN.