My PBS setup consists of:

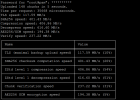

4 x 4T sata (5400 rpm) -> raidz10 PBS + datastore datasate /rpool/datastore1

2 x satadom ssd 64G -> raidz1- special device

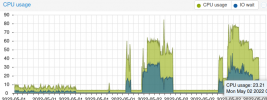

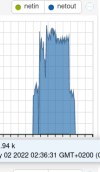

Backup speed gets up to 85-95% 1G wirespeed and this is pretty satisfactory, but restore speed is little questionable.

When restoring just one VM (at a time), restore speed goes around 40% bw -> cca 400-450 Mbs, but when restoring several VMs concurrently (to the same host) , 1G wire gets full utilized !!!

So, PBS in this form is capable of serving out restore-data at full-wire-speed

Is there any reason why one VM (at a time) cannot get restored at higher speed than 400Mbs ?

Thank you very much in advance

BR

Tonci

4 x 4T sata (5400 rpm) -> raidz10 PBS + datastore datasate /rpool/datastore1

2 x satadom ssd 64G -> raidz1- special device

Backup speed gets up to 85-95% 1G wirespeed and this is pretty satisfactory, but restore speed is little questionable.

When restoring just one VM (at a time), restore speed goes around 40% bw -> cca 400-450 Mbs, but when restoring several VMs concurrently (to the same host) , 1G wire gets full utilized !!!

So, PBS in this form is capable of serving out restore-data at full-wire-speed

Is there any reason why one VM (at a time) cannot get restored at higher speed than 400Mbs ?

Thank you very much in advance

BR

Tonci