I'm facing this issue and I'm struggling to solve it.

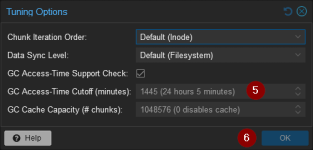

I can't even make Garbage collect as I gives me the same issue too.

I'll try moving some chunk files in order to be able to make a Garbage collect but it isn't a pleasant task and it is very stressfull.

I wish there would be an option to forbid/prevent the backup process to keep on writing on the ZFS storage when the ZFS storage reach something like 5 Gb of free space (forbid to full the disk space) in order to always let some space for GC to be done.

I can't even make Garbage collect as I gives me the same issue too.

I'll try moving some chunk files in order to be able to make a Garbage collect but it isn't a pleasant task and it is very stressfull.

I wish there would be an option to forbid/prevent the backup process to keep on writing on the ZFS storage when the ZFS storage reach something like 5 Gb of free space (forbid to full the disk space) in order to always let some space for GC to be done.

Last edited: