Hello Guys,

I have a question for you.

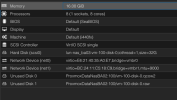

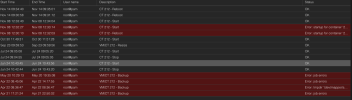

I need to back up a container with a mount point of 1TB.To complete the backup, I have to set the process in stop mode and it will take at least 6 hours to complete.

I have recently updated PVE and PBS to the latest release and chose metadata in mode detection.

Can you help me improve the performance of this backup process? I need to complete it in less time.

I trust your expertise and appreciate any assistance.

Thanks.

I have a question for you.

I need to back up a container with a mount point of 1TB.To complete the backup, I have to set the process in stop mode and it will take at least 6 hours to complete.

I have recently updated PVE and PBS to the latest release and chose metadata in mode detection.

Can you help me improve the performance of this backup process? I need to complete it in less time.

I trust your expertise and appreciate any assistance.

Thanks.