Merry christmas everyone

I'm trying to setup my PVE backup with PBS (currently, I'm just doing a weekly snapshot and also daily rsnapshot backup for OMV). I don't like this way to do, so I installed PBS and it's running quite well for the CT/VM, even if the datastore is a CIFS mount hosted in Hetzner datacenter in Germany.

But unfortunately, I'm having an issue for which I can't find a way to fix it.

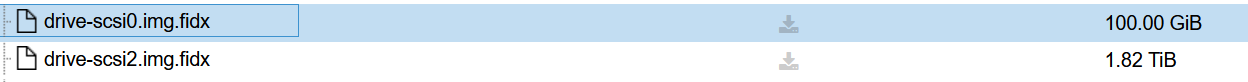

I'm running a Debian VM with OpenMediaVault. It has one disk for OS (100G, maybe 10 used) and another one for data (2T, about 250G used) which is mounted "directly" (using /dev/disk/by-id).

So, I started the backup job but it look its also backing up the empty space :

INFO: 0% (412.0 MiB of 1.9 TiB) in 3s, read: 137.3 MiB/s, write: 97.3 MiB/s

INFO: 1% (19.6 GiB of 1.9 TiB) in 1h 31m 45s, read: 3.6 MiB/s, write: 3.3 MiB/s

According to a few answers found on Google, it looks I need to convert this disk to lvm-thin, is that right ?

I'm trying to setup my PVE backup with PBS (currently, I'm just doing a weekly snapshot and also daily rsnapshot backup for OMV). I don't like this way to do, so I installed PBS and it's running quite well for the CT/VM, even if the datastore is a CIFS mount hosted in Hetzner datacenter in Germany.

But unfortunately, I'm having an issue for which I can't find a way to fix it.

I'm running a Debian VM with OpenMediaVault. It has one disk for OS (100G, maybe 10 used) and another one for data (2T, about 250G used) which is mounted "directly" (using /dev/disk/by-id).

So, I started the backup job but it look its also backing up the empty space :

INFO: 0% (412.0 MiB of 1.9 TiB) in 3s, read: 137.3 MiB/s, write: 97.3 MiB/s

INFO: 1% (19.6 GiB of 1.9 TiB) in 1h 31m 45s, read: 3.6 MiB/s, write: 3.3 MiB/s

According to a few answers found on Google, it looks I need to convert this disk to lvm-thin, is that right ?

Last edited: