New to Proxmox, but not to virtualization. I installed a three node cluster last week, configured Ceph for shared storage, created some VMs and everything seems to work normally. I can migrate VMs from host to host no issue, I can put hosts into maintenance mode and VMs move automatically.

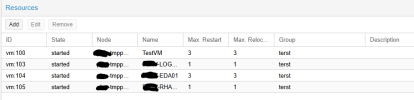

Issue: If I lose power on a node, it takes about 2 minutes for the VMs to automatically reregister on other hosts on the cluster and their OSes never load up. 3 minutes later and the VMs are still offline so I turn the failed host back on and it comes back online and 5 minutes later the VMs finally start up on the host they moved to and are back online. Now I can move them around with no issue.

What am I doing wrong... and what additional info can I give to help? Or is there simply no disaster failover (can't see how that could be)

Issue: If I lose power on a node, it takes about 2 minutes for the VMs to automatically reregister on other hosts on the cluster and their OSes never load up. 3 minutes later and the VMs are still offline so I turn the failed host back on and it comes back online and 5 minutes later the VMs finally start up on the host they moved to and are back online. Now I can move them around with no issue.

What am I doing wrong... and what additional info can I give to help? Or is there simply no disaster failover (can't see how that could be)