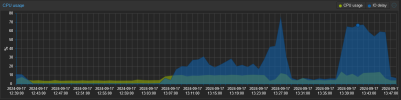

After update today getting high IO delay

- Thread starter masterpete

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Of course, this can have many causes. Your stats only shows one hour. Is the I/O delay currently still in this same state?

You can, for example, use these commands to output information for I/O wait.

With

Maybe new data are read/updated even after the restart/reboot. E.g. from “slocate" or "mlocate" local or from a virtual machine...

You can, for example, use these commands to output information for I/O wait.

Code:

iotop

watch -n1 iostatWith

htop you can also sort processes. Maybe new data are read/updated even after the restart/reboot. E.g. from “slocate" or "mlocate" local or from a virtual machine...

I/O wait is sometimes a bit difficult to debug. Normally, these programs should provide clues as to the cause.

Of course, there is also the journal log:

The priority switch can help you to filter events:

-p, --priority=

Filter output by message priorities or priority ranges. Takes either a single numeric or textual log level (i.e. between 0/"emerg" and 7/"debug"), or a range of numeric/text log levels in the form FROM..TO. The log levels are the usual syslog log levels as documented in syslog(3), i.e. "emerg" (0), "alert" (1), "crit" (2), "err" (3), "warning" (4), "notice" (5), "info" (6), "debug" (7). If a single log level is specified, all messages with this log level or a lower (hence more important) log level are shown. If a range is specified, all messages within the range are shown, including both the start and the end value of the range. This will add "PRIORITY=" matches for the specified priorities.

For example:

Do you have this I/O load even when “no” virtual machine is running?

Of course, there is also the journal log:

man journalctlThe priority switch can help you to filter events:

-p, --priority=

Filter output by message priorities or priority ranges. Takes either a single numeric or textual log level (i.e. between 0/"emerg" and 7/"debug"), or a range of numeric/text log levels in the form FROM..TO. The log levels are the usual syslog log levels as documented in syslog(3), i.e. "emerg" (0), "alert" (1), "crit" (2), "err" (3), "warning" (4), "notice" (5), "info" (6), "debug" (7). If a single log level is specified, all messages with this log level or a lower (hence more important) log level are shown. If a range is specified, all messages within the range are shown, including both the start and the end value of the range. This will add "PRIORITY=" matches for the specified priorities.

For example:

Code:

journalctl -r # Reverse search

journalctl -f # Liveview

journalctl -r -p3

journalctl -r -p4Do you have this I/O load even when “no” virtual machine is running?

can't find real errors. but a lot of this kind of stuff. from my docker lxc with ubuntu - is this bad?

I've heard of kernel errors / problems with 6.8.12-1-pve could there be a problem? can i go back? I don't know which i had before?!

Code:

Sep 18 14:42:39 pve kernel: vethaa647db (unregistering): left promiscuous mode

Sep 18 14:42:39 pve kernel: br-782b7d1c0068: port 1(vethaa647db) entered disabled state

Sep 18 14:41:37 pve kernel: br-782b7d1c0068: port 1(vethfd5a8a8) entered blocking state

Sep 18 14:41:37 pve kernel: br-782b7d1c0068: port 1(vethfd5a8a8) entered forwarding state

Sep 18 14:41:37 pve kernel: br-782b7d1c0068: port 1(vethfd5a8a8) entered disabled state

Sep 18 14:41:37 pve kernel: veth933d092: renamed from eth0

Sep 18 14:41:37 pve kernel: br-782b7d1c0068: port 1(vethfd5a8a8) entered disabled state

Sep 18 14:41:37 pve kernel: vethfd5a8a8 (unregistering): left allmulticast mode

Sep 18 14:41:37 pve kernel: vethfd5a8a8 (unregistering): left promiscuous mode

Sep 18 14:41:37 pve kernel: br-782b7d1c0068: port 1(vethfd5a8a8) entered disabled state

Sep 18 14:42:26 pve kernel: overlayfs: fs on '/var/lib/docker/overlay2/l/55HRTXOCPT747U6Q5VFRZECECW' does not support file handles, falling back to xino=off.

Sep 18 14:42:26 pve kernel: br-7e9924cc82cc: port 1(vethf1e5844) entered blocking state

Sep 18 14:42:26 pve kernel: br-7e9924cc82cc: port 1(vethf1e5844) entered disabled state

Sep 18 14:42:26 pve kernel: vethf1e5844: entered allmulticast mode

Sep 18 14:42:26 pve kernel: vethf1e5844: entered promiscuous mode

Sep 18 14:42:27 pve kernel: eth0: renamed from vetha82e83b

Sep 18 14:42:27 pve kernel: br-7e9924cc82cc: port 1(vethf1e5844) entered blocking state

Sep 18 14:42:27 pve kernel: br-7e9924cc82cc: port 1(vethf1e5844) entered forwarding state

Sep 18 14:42:31 pve kernel: br-7e9924cc82cc: port 1(vethf1e5844) entered disabled state

Sep 18 14:42:31 pve kernel: vetha82e83b: renamed from eth0

Sep 18 14:42:31 pve kernel: br-7e9924cc82cc: port 1(vethf1e5844) entered disabled state

Sep 18 14:42:31 pve kernel: vethf1e5844 (unregistering): left allmulticast mode

Sep 18 14:42:31 pve kernel: vethf1e5844 (unregistering): left promiscuous mode

Sep 18 14:42:31 pve kernel: br-7e9924cc82cc: port 1(vethf1e5844) entered disabled state

Sep 18 14:42:37 pve kernel: overlayfs: fs on '/var/lib/docker/overlay2/l/EI7S6775P2KFUYGKT3MAQIK57X' does not support file handles, falling back to xino=off.

Sep 18 14:42:37 pve kernel: overlayfs: fs on '/var/lib/docker/overlay2/l/MB7NIIN6SZZX3EFPOCL62OYM2E' does not support file handles, falling back to xino=off.

Sep 18 14:42:37 pve kernel: br-782b7d1c0068: port 1(vethaa647db) entered blocking state

Sep 18 14:42:37 pve kernel: br-782b7d1c0068: port 1(vethaa647db) entered disabled state

Sep 18 14:42:37 pve kernel: vethaa647db: entered allmulticast mode

Sep 18 14:42:37 pve kernel: vethaa647db: entered promiscuous mode

Sep 18 14:42:38 pve kernel: eth0: renamed from vethfd8fa10

Sep 18 14:42:38 pve kernel: br-782b7d1c0068: port 1(vethaa647db) entered blocking state

Sep 18 14:42:38 pve kernel: br-782b7d1c0068: port 1(vethaa647db) entered forwarding state

Sep 18 14:42:39 pve kernel: br-782b7d1c0068: port 1(vethaa647db) entered disabled state

Sep 18 14:42:39 pve kernel: vethfd8fa10: renamed from eth0

Sep 18 14:42:39 pve kernel: br-782b7d1c0068: port 1(vethaa647db) entered disabled state

Sep 18 14:42:39 pve kernel: vethaa647db (unregistering): left allmulticast mode

Sep 18 14:42:39 pve kernel: vethaa647db (unregistering): left promiscuous mode

Sep 18 14:42:39 pve kernel: br-782b7d1c0068: port 1(vethaa647db) entered disabled stateI've heard of kernel errors / problems with 6.8.12-1-pve could there be a problem? can i go back? I don't know which i had before?!

ok. just updated to 6.8.12-2 . same "lags" i am not sure if io delay is the problem or maybe also problems in my lxc with docker which provide everything via nginx reverse proxy and so on.

i am little bit frustated that i can point out the problem exactly

i am little bit frustated that i can point out the problem exactly

this was the last upgrade - any chance to get "back"?

Code:

Start-Date: 2024-09-17 11:32:57

Commandline: apt-get dist-upgrade

Install: proxmox-kernel-6.8.12-1-pve-signed:amd64 (6.8.12-1, automatic)

Upgrade: libcups2:amd64 (2.4.2-3+deb12u5, 2.4.2-3+deb12u7), pve-docs:amd64 (8.2.2, 8.2.3), libcurl4:amd64 (7.88.1-10+deb12u6, 7.88.1-10+deb12u7), initramfs-tools-core:amd64 (0.142, 0.142+deb12u1), udev:amd64 (252.26-1~deb12u2, 252.30-1~deb12u2), krb5-locales:amd64 (1.20.1-2+deb12u1, 1.20.1-2+deb12u2), python3.11:amd64 (3.11.2-6+deb12u2, 3.11.2-6+deb12u3), bind9-host:amd64 (1:9.18.24-1, 1:9.18.28-1~deb12u2), libgssapi-krb5-2:amd64 (1.20.1-2+deb12u1, 1.20.1-2+deb12u2), libcurl3-gnutls:amd64 (7.88.1-10+deb12u6, 7.88.1-10+deb12u7), pve-firmware:amd64 (3.12-1, 3.13-1), libpam-systemd:amd64 (252.26-1~deb12u2, 252.30-1~deb12u2), libaom3:amd64 (3.6.0-1, 3.6.0-1+deb12u1), pve-qemu-kvm:amd64 (9.0.0-3, 9.0.2-2), libsystemd0:amd64 (252.26-1~deb12u2, 252.30-1~deb12u2), libnss-systemd:amd64 (252.26-1~deb12u2, 252.30-1~deb12u2), libpve-guest-common-perl:amd64 (5.1.3, 5.1.4), proxmox-kernel-6.8:amd64 (6.8.8-2, 6.8.12-1), libpython3.11-minimal:amd64 (3.11.2-6+deb12u2, 3.11.2-6+deb12u3), libkrb5support0:amd64 (1.20.1-2+deb12u1, 1.20.1-2+deb12u2), systemd:amd64 (252.26-1~deb12u2, 252.30-1~deb12u2), libudev1:amd64 (252.26-1~deb12u2, 252.30-1~deb12u2), proxmox-backup-file-restore:amd64 (3.2.4-1, 3.2.7-1), libc6:amd64 (2.36-9+deb12u7, 2.36-9+deb12u8), locales:amd64 (2.36-9+deb12u7, 2.36-9+deb12u8), libssl3:amd64 (3.0.13-1~deb12u1, 3.0.14-1~deb12u2), libkrb5-3:amd64 (1.20.1-2+deb12u1, 1.20.1-2+deb12u2), ifupdown2:amd64 (3.2.0-1+pmx8, 3.2.0-1+pmx9), libpython3.11:amd64 (3.11.2-6+deb12u2, 3.11.2-6+deb12u3), qemu-server:amd64 (8.2.1, 8.2.4), bind9-dnsutils:amd64 (1:9.18.24-1, 1:9.18.28-1~deb12u2), base-files:amd64 (12.4+deb12u6, 12.4+deb12u7), libk5crypto3:amd64 (1.20.1-2+deb12u1, 1.20.1-2+deb12u2), libpython3.11-stdlib:amd64 (3.11.2-6+deb12u2, 3.11.2-6+deb12u3), proxmox-backup-client:amd64 (3.2.4-1, 3.2.7-1), proxmox-firewall:amd64 (0.4.2, 0.5.0), libpve-common-perl:amd64 (8.2.1, 8.2.2), libc-l10n:amd64 (2.36-9+deb12u7, 2.36-9+deb12u8), bind9-libs:amd64 (1:9.18.24-1, 1:9.18.28-1~deb12u2), python3.11-minimal:amd64 (3.11.2-6+deb12u2, 3.11.2-6+deb12u3), libc-bin:amd64 (2.36-9+deb12u7, 2.36-9+deb12u8), libsystemd-shared:amd64 (252.26-1~deb12u2, 252.30-1~deb12u2), initramfs-tools:amd64 (0.142, 0.142+deb12u1), systemd-sysv:amd64 (252.26-1~deb12u2, 252.30-1~deb12u2), curl:amd64 (7.88.1-10+deb12u6, 7.88.1-10+deb12u7), openssl:amd64 (3.0.13-1~deb12u1, 3.0.14-1~deb12u2), proxmox-termproxy:amd64 (1.0.1, 1.1.0)

End-Date: 2024-09-17 11:34:00