Hey y'all,

So let me preface by saying this is the first time I have dealt with ZFS and ZFS using proxmox. While waiting on 4 drives to arrive to created a RAIDz2 pool, I played around trying to create smaller pools just to get a hang for how it all works. However, now that the disks have arrived, i am trying to release and remove all disks from the ZFS pools so I can create the ZFS pool mentioned above and keep running into a strange error. Here is the current setup for my R720XD that is running proxmox.

1) i have 2 1TB SSDs that are installed simply as passthrough drives, one has proxmox actually installed on it and the other is empy waiting to be configured for storage

2) i have 8 SAS 1.2TB disks that came with the server. these drives were placed into 3 pools with various configurations, 1 had 3 disks, another had 3 and one disk was in a single disk array, one disk never appeared from the get go so I think this disk was fried upon arrival.

3) i have 4 SAS 1.2TB that i just received, these disk have not been set up yet

NOTE: these disks are ST1200MM0108 which are seagate Secure SED FIPS 140-2 Model disks, meaning they are encrypted. I think this may be related.

disks in the 1 and 3 categories above are perfectly fine and appear in proxmox, they also appear in fdisk. no issue here.

disks in the 2 category are acting very weird. Again, i had all these disks working in a ZFS pool but now that i have removed the pool they are throwing

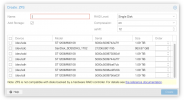

Here is a picture of the fdisk list output for one of the broken drives (they are all the same just different IDs and such):

Another error that seems to pop up on boot is

Ultimately what i am trying to do is see where i messed up or if these drives literally just died on me. the SMART status showed them as ok and healthy when i had them in the ZFS pool and using "smrtctl" on the disks shows their health as OK through the cli.

Anyone have any ideas? Im pretty much stuck and im about to throw these disks in the EOL pile but want to make sure before I do. What makes me think they are not dead broken disks is the fact that S.M.A.R.T doesnt pick up on it and i was succesfully using these disks HOURS before i went through with the destruction process.

Thanks in advance and please let me know what other info may be useful!

So let me preface by saying this is the first time I have dealt with ZFS and ZFS using proxmox. While waiting on 4 drives to arrive to created a RAIDz2 pool, I played around trying to create smaller pools just to get a hang for how it all works. However, now that the disks have arrived, i am trying to release and remove all disks from the ZFS pools so I can create the ZFS pool mentioned above and keep running into a strange error. Here is the current setup for my R720XD that is running proxmox.

1) i have 2 1TB SSDs that are installed simply as passthrough drives, one has proxmox actually installed on it and the other is empy waiting to be configured for storage

2) i have 8 SAS 1.2TB disks that came with the server. these drives were placed into 3 pools with various configurations, 1 had 3 disks, another had 3 and one disk was in a single disk array, one disk never appeared from the get go so I think this disk was fried upon arrival.

3) i have 4 SAS 1.2TB that i just received, these disk have not been set up yet

NOTE: these disks are ST1200MM0108 which are seagate Secure SED FIPS 140-2 Model disks, meaning they are encrypted. I think this may be related.

disks in the 1 and 3 categories above are perfectly fine and appear in proxmox, they also appear in fdisk. no issue here.

disks in the 2 category are acting very weird. Again, i had all these disks working in a ZFS pool but now that i have removed the pool they are throwing

fdisk: cannot open /dev/sdj: Input/output error when trying to access via fdisk. in total i had 6 drives working here so im not sure what happened to most of the disks. I have tried using dd to fill one of the disks with zeros and wipe the drive however this seemed to do nothing and the partitions remained and i had the same issue when i rebooted after the wipe. In addition, when i run "fdisk -l" i get "The backup GPT table is corrupt, but the primary appears OK, so that will be used." for the disks i am having a hard time getting to work. In total, 5 disks are showing the same error all of which were in a multi disk ZFS pool.Here is a picture of the fdisk list output for one of the broken drives (they are all the same just different IDs and such):

Another error that seems to pop up on boot is

pve kernel: blk_update_request: protection error, dev sdf, sector 2344225928 op 0x0:(READ) flags 0x80700 phys_seg 4 prio class 0 which i assume is related to the SED FIPS 140-2 classification. My thought is that i messed up and now have no access to these disks since they were encrypted by some HWID and now i can't access them. Ive never used secure drives so again im not sure whats happening here either.Ultimately what i am trying to do is see where i messed up or if these drives literally just died on me. the SMART status showed them as ok and healthy when i had them in the ZFS pool and using "smrtctl" on the disks shows their health as OK through the cli.

Anyone have any ideas? Im pretty much stuck and im about to throw these disks in the EOL pile but want to make sure before I do. What makes me think they are not dead broken disks is the fact that S.M.A.R.T doesnt pick up on it and i was succesfully using these disks HOURS before i went through with the destruction process.

Thanks in advance and please let me know what other info may be useful!

Last edited: