Hello,

We're in process of upgrading a 4 node cluster to PVE6, but we would like to know if it's possible to add a new PVE6 node to the cluster if we still have one node with 5.4. Here is our environment:

ENVIRONMENT:

node0: PVE 5.4 (with Corosync 3) -> with VMs running on local-lvm

node1: PVE 6.2 (already upgraded) -> NO VMs running

node2: PVE 6.2 (already upgraded) -> with VMs running on local-lvm

node3: PVE 6.2 (already upgraded) -> with VMs running on local-lvm

All nodes are working with HW RAID controller and local storage (LVM Thin).

Our upgrading procedure consists on moving out all VMs from a node before upgrading it, and our intention was to move VMs from node0 to node1 and finish the cluster upgrade by upgrading node0.

THE PROBLEM:

The problem is node1 has started to fail. Last week we saw these errors on syslog (without any other failure on the server). We sent the logs to our provider, and the techs on the datacenter changed the offending ECC DIMM:

After that, a couple of days ago, we saw two reboots overnight, during a backup. We checked if there was anything on the syslog before the reboot, and we only found those ^@ strange characters:

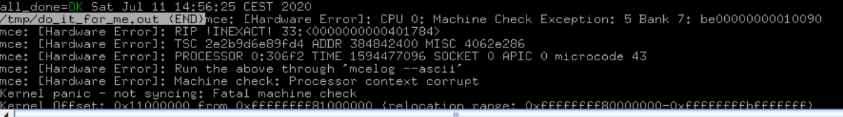

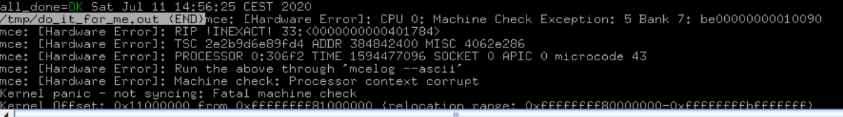

We did some memory tests while recording IPMI screen output, and the first one crashed with this error, followed with a reboot:

After that, we're unable to reproduce the issue (we're continously doing CPU and RAM tests and nothing crashes).

TL;DR - THE QUESTION:

Since our intention already was to replace node1 when the cluster upgrade was done (we don't trust this machine anymore because of other issues we had), is it possible to add a new server with PVE 6.2 to the cluster, even having one node still with PVE 5.4? Then, we could move VMs from node0 to this new node and upgrade node0.

If I connect to a node with PVE 6.2, I can click on Join Information button, so I assume it should work, but I would like to confirm with Proxmox staff if it's possible, before messing up anything.

Thanks in advance for your help!

Carles

We're in process of upgrading a 4 node cluster to PVE6, but we would like to know if it's possible to add a new PVE6 node to the cluster if we still have one node with 5.4. Here is our environment:

ENVIRONMENT:

node0: PVE 5.4 (with Corosync 3) -> with VMs running on local-lvm

node1: PVE 6.2 (already upgraded) -> NO VMs running

node2: PVE 6.2 (already upgraded) -> with VMs running on local-lvm

node3: PVE 6.2 (already upgraded) -> with VMs running on local-lvm

All nodes are working with HW RAID controller and local storage (LVM Thin).

Our upgrading procedure consists on moving out all VMs from a node before upgrading it, and our intention was to move VMs from node0 to node1 and finish the cluster upgrade by upgrading node0.

THE PROBLEM:

The problem is node1 has started to fail. Last week we saw these errors on syslog (without any other failure on the server). We sent the logs to our provider, and the techs on the datacenter changed the offending ECC DIMM:

Code:

Jul 05 20:23:41 node1 kernel: {4099}[Hardware Error]: Hardware error from APEI Generic Hardware Error Source: 0

Jul 05 20:23:41 node1 kernel: {4099}[Hardware Error]: It has been corrected by h/w and requires no further action

Jul 05 20:23:41 node1 kernel: {4099}[Hardware Error]: event severity: corrected

Jul 05 20:23:41 node1 kernel: {4099}[Hardware Error]: Error 0, type: corrected

Jul 05 20:23:41 node1 kernel: {4099}[Hardware Error]: fru_text: DIMM ??

Jul 05 20:23:41 node1 kernel: {4099}[Hardware Error]: section_type: memory error

Jul 05 20:23:41 node1 kernel: {4099}[Hardware Error]: error_status: 0x0000000000000400

Jul 05 20:23:41 node1 kernel: {4099}[Hardware Error]: node: 0

Jul 05 20:23:41 node1 kernel: ghes_edac: Internal error: Can't find EDAC structureAfter that, a couple of days ago, we saw two reboots overnight, during a backup. We checked if there was anything on the syslog before the reboot, and we only found those ^@ strange characters:

Code:

Jul 10 23:42:02 node1 systemd[1]: pvesr.service: Succeeded.

Jul 10 23:42:02 node1 systemd[1]: Started Proxmox VE replication runner.

Jul 10 23:43:00 node1 systemd[1]: Starting Proxmox VE replication runner...

Jul 10 23:43:01 node1 systemd[1]: pvesr.service: Succeeded.

Jul 10 23:43:01 node1 systemd[1]: Started Proxmox VE replication runner.

Jul 10 23:44:00 node1 systemd[1]: Starting Proxmox VE replication runner...

Jul 10 23:44:01 node1 pvesr[8417]: trying to acquire cfs lock 'file-replication_cfg' ...

Jul 10 23:44:02 node1 pvesr[8417]: trying to acquire cfs lock 'file-replication_cfg' ...

Jul 10 23:44:03 node1 systemd[1]: pvesr.service: Succeeded.

Jul 10 23:44:03 node1 systemd[1]: Started Proxmox VE replication runner.

Jul 10 23:45:00 node1 systemd[1]: Starting Proxmox VE replication runner...

Jul 10 23:45:01 node1 systemd[1]: pvesr.service: Succeeded.

Jul 10 23:45:01 node1 systemd[1]: Started Proxmox VE replication runner.

Jul 10 23:45:01 node1 CRON[8776]: (root) CMD (command -v debian-sa1 > /dev/null && debian-sa1 1 1)

^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@

^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@

^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@^@

^@^@Jul 10 23:49:51 node1 systemd-modules-load[568]: Inserted module 'vhost_net'

Jul 10 23:49:51 node1 systemd[1]: Starting Flush Journal to Persistent Storage...

Jul 10 23:49:51 node1 systemd[1]: Started Flush Journal to Persistent Storage.

Jul 10 23:49:51 node1 systemd[1]: Started udev Coldplug all Devices.

Jul 10 23:49:51 node1 systemd[1]: Starting Helper to synchronize boot up for ifupdown...

Jul 10 23:49:51 node1 systemd[1]: Starting udev Wait for Complete Device Initialization...

Jul 10 23:49:51 node1 systemd-udevd[641]: Using default interface naming scheme 'v240'.

Jul 10 23:49:51 node1 systemd-udevd[621]: Using default interface naming scheme 'v240'.

Jul 10 23:49:51 node1 systemd-udevd[641]: link_config: autonegotiation is unset or enabled, the speed and duplex are not writable.

Jul 10 23:49:51 node1 systemd-udevd[621]: link_config: autonegotiation is unset or enabled, the speed and duplex are not writable.

Jul 10 23:49:51 node1 systemd-udevd[664]: link_config: autonegotiation is unset or enabled, the speed and duplex are not writable.

Jul 10 23:49:51 node1 systemd[1]: Found device MR9271-4i swap-sda3.

Jul 10 23:49:51 node1 systemd[1]: Activating swap /dev/sda3...We did some memory tests while recording IPMI screen output, and the first one crashed with this error, followed with a reboot:

After that, we're unable to reproduce the issue (we're continously doing CPU and RAM tests and nothing crashes).

TL;DR - THE QUESTION:

Since our intention already was to replace node1 when the cluster upgrade was done (we don't trust this machine anymore because of other issues we had), is it possible to add a new server with PVE 6.2 to the cluster, even having one node still with PVE 5.4? Then, we could move VMs from node0 to this new node and upgrade node0.

If I connect to a node with PVE 6.2, I can click on Join Information button, so I assume it should work, but I would like to confirm with Proxmox staff if it's possible, before messing up anything.

Thanks in advance for your help!

Carles