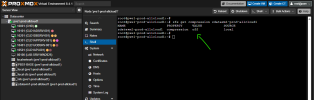

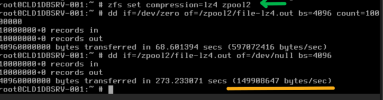

To my original post. Further testing has shown 8.4 has dreadful performance on the internal SSD (1TB consumer grade as its a small 3 node cluster system in the house) however it has worked fine for 6 months. It does not matter whether I format it ZFS or LVM-Thin. I get 40-60% IO issues and the server is slow to being unusable with just one VM on the disk.

However, the internal NVME has no performance issues when I move the VM onto this storage. All the IO issues go away.

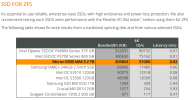

So today I rebuilt one of the nodes to Windows 2022 server so i could run the micros/crucial SSD diagnostics and it shows the drive as totally healthy. No firmware updates required.

As further test I installed Hyper V and spun up two servers on the same SSD. No issues whatsoever with performance.

So have boiled this issue down to something specific with proxmox, 8.2 and above running on my opiplex, 6 core, 32 gig home lab on crucial SSD. Unless anyone has an idea as to why this is suddenly an issue when the hardware is clearly fine, I guess ill just have to convert to hyper V (which I really dont want to do)