Hi there,

I am relatively new to Proxmox clustering. I am trying to setup a 2 node cluster with a shared (iscsi from a Truenas Scale).

This is a v 7.2.3 setup.

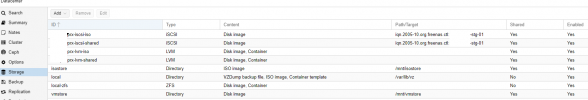

1. I added iscsi resources (100 MB for Quorum and 14 TB for VMs and ISO files) from the Truenas to both nodes under the Datacenter/Storage.

2. Created LVMs for both iscsi resources under the Datacenter/Storage

3. Created a Cluster on the first node and joined the second node to it.

What I'd like to find out is;

1. Why I don't have the /etc/pve/cluster.conf file under either node (there is corosync.conf under this location)

1. Can I built this cluster without a third node (raspberry pi or otherwise) and still maintain failover in an event of a node failure.

2. How to set the iscsi based (100 MB) disc for Quorum.

3. How to create directory for VMs and ISO files on the iscsi based disc (14 TB)

Any help would be greatly appreciated.

Thank you

I am relatively new to Proxmox clustering. I am trying to setup a 2 node cluster with a shared (iscsi from a Truenas Scale).

This is a v 7.2.3 setup.

1. I added iscsi resources (100 MB for Quorum and 14 TB for VMs and ISO files) from the Truenas to both nodes under the Datacenter/Storage.

2. Created LVMs for both iscsi resources under the Datacenter/Storage

3. Created a Cluster on the first node and joined the second node to it.

What I'd like to find out is;

1. Why I don't have the /etc/pve/cluster.conf file under either node (there is corosync.conf under this location)

1. Can I built this cluster without a third node (raspberry pi or otherwise) and still maintain failover in an event of a node failure.

2. How to set the iscsi based (100 MB) disc for Quorum.

3. How to create directory for VMs and ISO files on the iscsi based disc (14 TB)

Any help would be greatly appreciated.

Thank you