Hi everyone,

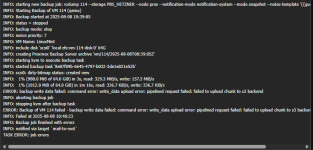

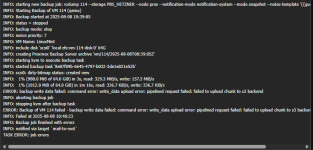

since upgrading to Proxmox Backup Server 4.0, I’ve started using the new S3 target support, which is absolutely great so far. However, I’m running into an issue with larger VMs:

My upload bandwidth is limited to around 10 Mbit/s, and for some larger backups, the upload process fails partway through. From what I understand, PBS uses a local cache for S3, which defaults to 25 GB.

Smaller CT runs great with the S3 Bucket.

My suspicion is that the cache fills up before the data can be uploaded to the S3 storage, causing the backup to abort.

My questions:

Thanks in advance,

Simon

since upgrading to Proxmox Backup Server 4.0, I’ve started using the new S3 target support, which is absolutely great so far. However, I’m running into an issue with larger VMs:

My upload bandwidth is limited to around 10 Mbit/s, and for some larger backups, the upload process fails partway through. From what I understand, PBS uses a local cache for S3, which defaults to 25 GB.

Smaller CT runs great with the S3 Bucket.

My suspicion is that the cache fills up before the data can be uploaded to the S3 storage, causing the backup to abort.

My questions:

- Is there a way to increase or configure the S3 cache size in PBS?

- Has anyone else experienced similar issues when uploading large backups with limited upstream bandwidth?

Thanks in advance,

Simon