Not sure how to go about resolving this. I believe it's a space constraint on my host drive, but both partitions show ample space so I'm not sure. Suggestions on how to resolve this?

System:

System:

Code:

CPU(s) 32 x AMD Ryzen 9 7950X 16-Core Processor (1 Socket)

Kernel Version Linux 6.14.8-2-pve (2025-07-22T10:04Z)

Boot Mode EFI

Manager Version pve-manager/9.0.3/025864202ebb6109

Code:

oot@pve:~# vgs

VG #PV #LV #SN Attr VSize VFree

pve 1 15 0 wz--n- <930.51g 920.00m

root@pve:~#

Code:

root@pve:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 9.1T 0 disk

└─sda1 8:1 0 9.1T 0 part

sdb 8:16 0 9.1T 0 disk

└─sdb1 8:17 0 9.1T 0 part

sdc 8:32 0 9.1T 0 disk

└─sdc1 8:33 0 9.1T 0 part

sdd 8:48 0 9.1T 0 disk

└─sdd1 8:49 0 9.1T 0 part

sde 8:64 0 9.1T 0 disk

└─sde1 8:65 0 9.1T 0 part

sdf 8:80 0 10.9T 0 disk

└─sdf1 8:81 0 10.9T 0 part

sdg 8:96 0 10.9T 0 disk

└─sdg1 8:97 0 10.9T 0 part

sdh 8:112 0 9.1T 0 disk

└─sdh1 8:113 0 9.1T 0 part

sdi 8:128 0 10.9T 0 disk

└─sdi1 8:129 0 10.9T 0 part

sdj 8:144 0 9.1T 0 disk

└─sdj1 8:145 0 9.1T 0 part

sdk 8:160 0 10.9T 0 disk

└─sdk1 8:161 0 10.9T 0 part

sdl 8:176 0 9.1T 0 disk

└─sdl1 8:177 0 9.1T 0 part

sdm 8:192 0 9.1T 0 disk

└─sdm1 8:193 0 9.1T 0 part

sdn 8:208 0 9.1T 0 disk

└─sdn1 8:209 0 9.1T 0 part

sdo 8:224 0 1.8T 0 disk

└─sdo1 8:225 0 1.8T 0 part

sdp 8:240 0 1.8T 0 disk

└─sdp1 8:241 0 1.8T 0 part

sdq 65:0 0 1.8T 0 disk

└─sdq1 65:1 0 1.8T 0 part

sdr 65:16 0 1.8T 0 disk

└─sdr1 65:17 0 1.8T 0 part

sds 65:32 0 223.6G 0 disk

├─sds1 65:33 0 223.6G 0 part

└─sds9 65:41 0 8M 0 part

sdt 65:48 0 223.6G 0 disk

├─sdt1 65:49 0 223.6G 0 part

└─sdt9 65:57 0 8M 0 part

nvme0n1 259:0 0 931.5G 0 disk

├─nvme0n1p1 259:1 0 1007K 0 part

├─nvme0n1p2 259:2 0 1G 0 part /boot/efi

└─nvme0n1p3 259:3 0 930.5G 0 part

├─pve-swap 252:0 0 8G 0 lvm [SWAP]

├─pve-root 252:1 0 103G 0 lvm /

├─pve-data_meta0 252:2 0 8.1G 1 lvm

└─pve-data_meta1 252:3 0 8.1G 1 lvm

root@pve:~#

Code:

root@pve:~# vgdisplay

--- Volume group ---

VG Name pve

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 80

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 15

Open LV 2

Max PV 0

Cur PV 1

Act PV 1

VG Size <930.51 GiB

PE Size 4.00 MiB

Total PE 238210

Alloc PE / Size 237980 / <929.61 GiB

Free PE / Size 230 / 920.00 MiB

VG UUID 1zhiwe-HoHT-fazi-Cfll-Cs8L-Gspc-yxgz6B

root@pve:~#

Code:

root@pve:~# du -Shx / | sort -rh | head -15

9.1G /root

793M /usr/bin

671M /var/lib/vz/template/iso

533M /var/cache/apt/archives

441M /usr/lib/x86_64-linux-gnu

416M /boot

311M /usr/share/kvm

309M /var/log/journal/b0590098690d4430a931d19c1d22d668

220M /var/lib/unifi/db/diagnostic.data

201M /var/lib/unifi/db/journal

174M /usr/lib

139M /usr/lib/jvm/java-21-openjdk-amd64/lib

100M /usr/lib/firmware/amdgpu

91M /var/cache/apt

88M /var/lib/apt/lists

root@pve:~#

Code:

oot@pve:~# journalctl -xb

Aug 06 17:33:29 pve kernel: Linux version 6.14.8-2-pve (build@proxmox) (gcc (Debian 14.2.0-19) 14.2.0, GNU ld (GNU Binutils for Debian) 2.44) #1 SMP PREEMPT_DYNAMIC PMX 6>

Aug 06 17:33:29 pve kernel: Command line: BOOT_IMAGE=/boot/vmlinuz-6.14.8-2-pve root=/dev/mapper/pve-root ro quiet

Aug 06 17:33:29 pve kernel: KERNEL supported cpus:

Aug 06 17:33:29 pve kernel: Intel GenuineIntel

Aug 06 17:33:29 pve kernel: AMD AuthenticAMD

Aug 06 17:33:29 pve kernel: Hygon HygonGenuine

Aug 06 17:33:29 pve kernel: Centaur CentaurHauls

Aug 06 17:33:29 pve kernel: zhaoxin Shanghai

Aug 06 17:33:29 pve kernel: BIOS-provided physical RAM map:

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x0000000000000000-0x000000000009ffff] usable

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x00000000000a0000-0x00000000000fffff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x0000000000100000-0x0000000009afefff] usable

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x0000000009aff000-0x0000000009ffffff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000000a000000-0x000000000a1fffff] usable

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000000a200000-0x000000000a211fff] ACPI NVS

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000000a212000-0x000000000affffff] usable

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000000b000000-0x000000000b020fff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000000b021000-0x000000008857efff] usable

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000008857f000-0x000000008e57efff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000008e57f000-0x000000008e67efff] ACPI data

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000008e67f000-0x000000009067efff] ACPI NVS

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000009067f000-0x00000000987fefff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x00000000987ff000-0x0000000099ff8fff] usable

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x0000000099ff9000-0x0000000099ffbfff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x0000000099ffc000-0x0000000099ffffff] usable

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000009a000000-0x000000009bffffff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000009d7f3000-0x000000009fffffff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x00000000e0000000-0x00000000efffffff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x00000000fd000000-0x00000000ffffffff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x0000000100000000-0x000000203de7ffff] usable

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000203eec0000-0x00000020801fffff] reserved

Aug 06 17:33:29 pve kernel: BIOS-e820: [mem 0x000000fd00000000-0x000000ffffffffff] reserved

Aug 06 17:33:29 pve kernel: NX (Execute Disable) protection: active

Aug 06 17:33:29 pve kernel: APIC: Static calls initialized

Aug 06 17:33:29 pve kernel: e820: update [mem 0x81c32018-0x81c88c57] usable ==> usable

Aug 06 17:33:29 pve kernel: e820: update [mem 0x81bdb018-0x81c31c57] usable ==> usable

Aug 06 17:33:29 pve kernel: e820: update [mem 0x81d47018-0x81d51e57] usable ==> usable

Aug 06 17:33:29 pve kernel: extended physical RAM map:

Aug 06 17:33:29 pve kernel: reserve setup_data: [mem 0x0000000000000000-0x000000000009ffff] usable

Aug 06 17:33:29 pve kernel: reserve setup_data: [mem 0x00000000000a0000-0x00000000000fffff] reserved

Aug 06 17:33:29 pve kernel: reserve setup_data: [mem 0x0000000000100000-0x0000000009afefff] usable

Aug 06 17:33:29 pve kernel: reserve setup_data: [mem 0x0000000009aff000-0x0000000009ffffff] reserved

Aug 06 17:33:29 pve kernel: reserve setup_data: [mem 0x000000000a000000-0x000000000a1fffff] usable

Aug 06 17:33:29 pve kernel: reserve setup_data: [mem 0x000000000a200000-0x000000000a211fff] ACPI NVS

Aug 06 17:33:29 pve kernel: reserve setup_data: [mem 0x000000000a212000-0x000000000affffff] usable

Aug 06 17:33:29 pve kernel: reserve setup_data: [mem 0x000000000b000000-0x000000000b020fff] reserved

...skipping...

░░ Subject: User manager start-up is now complete

░░ Defined-By: systemd

░░ Support: https://www.debian.org/support

░░

░░ The user manager instance for user 0 has been started. All services queued

░░ for starting have been started. Note that other services might still be starting

░░ up or be started at any later time.

░░

░░ Startup of the manager took 255140 microseconds.

Aug 06 18:20:15 pve systemd[1]: Started user@0.service - User Manager for UID 0.

░░ Subject: A start job for unit user@0.service has finished successfully

░░ Defined-By: systemd

░░ Support: https://www.debian.org/support

░░

░░ A start job for unit user@0.service has finished successfully.

░░

░░ The job identifier is 425.

Aug 06 18:20:15 pve systemd[1]: Started session-2.scope - Session 2 of User root.

░░ Subject: A start job for unit session-2.scope has finished successfully

░░ Defined-By: systemd

░░ Support: https://www.debian.org/support

░░

░░ A start job for unit session-2.scope has finished successfully.

░░

░░ The job identifier is 549.

Aug 06 18:20:15 pve login[21742]: ROOT LOGIN ON pts/0

Aug 06 18:20:15 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:20:25 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:20:35 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:20:44 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:20:55 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:21:05 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:21:15 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:21:24 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:21:35 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:21:45 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:21:55 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:22:04 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:22:15 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:22:25 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:22:35 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:22:44 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:22:55 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:23:05 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:23:15 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

Aug 06 18:23:24 pve pvestatd[3087]: activating LV 'pve/data' failed: Check of pool pve/data failed (status:64). Manual repair required!

ESC[3lines 4416-4461/4461 (END)

Code:

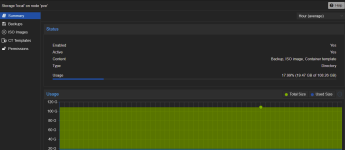

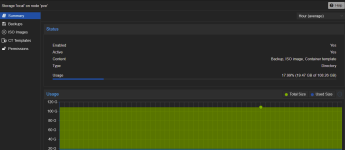

root@pve:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content iso,vztmpl,backup

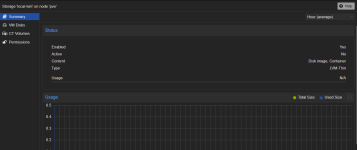

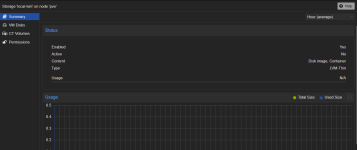

lvmthin: local-lvm

thinpool data

vgname pve

content images,rootdir

root@pve:~#

Code:

root@pve:~# pvs

PV VG Fmt Attr PSize PFree

/dev/nvme0n1p3 pve lvm2 a-- <930.51g 920.00m

root@pve:~#

Code:

root@pve:~# lvconvert --repair pve/data

Volume group "pve" has insufficient free space (230 extents): 2074 required.

root@pve:~#