Hello all!

Given are two PBS servers A and B from where A is configured to pull data from B.

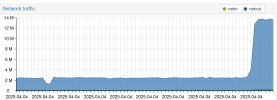

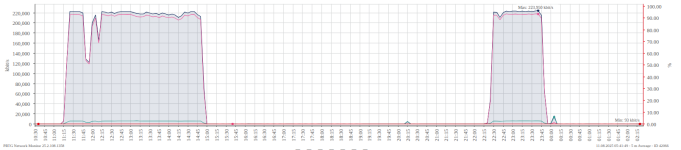

Both servers are connected via an IPsec VPN tunnel. Ping latency is around 10ms and available bandwidth to pull of 100Mbit/s.

Previously when I used Ve**am to copy backups, the link was always used at around maximum bandwidth.

Now with the PBS sync job, there are only around 20Mbit/s used for data transfer.

There is no BW limit configured.

I know that pulling chunks one by one is slow-ish and latency might play a role but does it really need to be so slow?

What can be done to improve performance? I'd like to use my link at full capacity.

Thoughts:

Multistream TCP or having multiple jobs can certainly be done but it might be hard to implement.

Have found that comment in the forum in an older post)

What is PBS actually using in the background to fetch data?

It looks like some king of HTTP/HTTPS requests to a custom port/api.

Is PBS making a new connection on each synchronized chunk?

If yes, can multiple requests get tunneled over the same TCP connection? HTTP Keepalive or even HTTP/2?

Establishing a new TCP connection for each chunk might take a long time (3 way handshake = 30ms).

Be happy and stay safe!

Cheers,

Bernhard

Given are two PBS servers A and B from where A is configured to pull data from B.

Both servers are connected via an IPsec VPN tunnel. Ping latency is around 10ms and available bandwidth to pull of 100Mbit/s.

Previously when I used Ve**am to copy backups, the link was always used at around maximum bandwidth.

Now with the PBS sync job, there are only around 20Mbit/s used for data transfer.

There is no BW limit configured.

I know that pulling chunks one by one is slow-ish and latency might play a role but does it really need to be so slow?

What can be done to improve performance? I'd like to use my link at full capacity.

Thoughts:

Multistream TCP or having multiple jobs can certainly be done but it might be hard to implement.

Have found that comment in the forum in an older post)

What is PBS actually using in the background to fetch data?

It looks like some king of HTTP/HTTPS requests to a custom port/api.

Is PBS making a new connection on each synchronized chunk?

If yes, can multiple requests get tunneled over the same TCP connection? HTTP Keepalive or even HTTP/2?

Establishing a new TCP connection for each chunk might take a long time (3 way handshake = 30ms).

Be happy and stay safe!

Cheers,

Bernhard