I have a two node cluster w/ qdevice. I have an ha group setup (mainly to set priority node) and using replication to keep data in sync on both devices.

I yanked the plug on the first device, and waited a few minutes. Everything failed over great, I was very impressed. Took a little longer than I expected for it to notice, but it did it all like a champ.

Then I plugged in node 1, and watched it fail back. It did this as well, all but one VM.

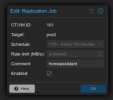

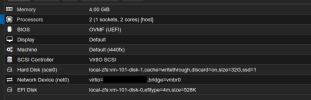

This VM failed (error below), and it would keep trying the migration over and over without success. I tried to stop it, I tried to shut it down, and do my own migration while it is shut down, but it ignored it all and just kept trying to migrate over and over. Eventually I rebooted that node, and all was well again.

Below is the final messages in the migration log for this VM.

I yanked the plug on the first device, and waited a few minutes. Everything failed over great, I was very impressed. Took a little longer than I expected for it to notice, but it did it all like a champ.

Then I plugged in node 1, and watched it fail back. It did this as well, all but one VM.

This VM failed (error below), and it would keep trying the migration over and over without success. I tried to stop it, I tried to shut it down, and do my own migration while it is shut down, but it ignored it all and just kept trying to migrate over and over. Eventually I rebooted that node, and all was well again.

Below is the final messages in the migration log for this VM.

Code:

drive-efidisk0: Completing block job...

drive-efidisk0: Completed successfully.

drive-scsi0: Completing block job...

drive-scsi0: Completed successfully.

drive-efidisk0: Cancelling block job

drive-scsi0: Cancelling block job

drive-efidisk0: Done.

WARN: drive-scsi0: Input/output error (io-status: ok)

drive-scsi0: Done.

2024-07-21 09:31:29 ERROR: online migrate failure - Failed to complete storage migration: block job (mirror) error: drive-efidisk0: Input/output error (io-status: ok)

2024-07-21 09:31:29 aborting phase 2 - cleanup resources

2024-07-21 09:31:29 migrate_cancel

2024-07-21 09:31:29 scsi0: removing block-dirty-bitmap 'repl_scsi0'

2024-07-21 09:31:29 efidisk0: removing block-dirty-bitmap 'repl_efidisk0'

2024-07-21 09:31:31 ERROR: migration finished with problems (duration 00:00:19)

TASK ERROR: migration problems