Hey all, I've been trying to pass the iGPU for this CPU to a VM (not CT) specifically for Jellyfin hardware encoding. I've tried every permutation of options I've stumbled across through the depths of page 10 search results and come up with nothing, so this is my plea for help.

The issue:

I recently acquired an Intel NUC 12 Enthusiast NUC12SNKi72 and after a doozy of a time getting Proxmox installed I'm finally ready to start moving some VMs from my older server, starting with my media stack.

The system:

It may actually be easier to list the things I haven't tried, but I'll stick with the closest to success I've gotten so far. Currently I have a Fedora 39 VM that's set up with the iGPU added as a PCI device with All Functions, ROM-Bar, and PCI-Express turned on. Inside the VM I can see `/dev/dri/card0` but not `/dev/dri/render128`. If the VM isn't running I see both of them on the Proxmox host (along with card1 and Render129. I'd prefer to get this running under Debian but even on an updated bookworm install with the same PCI Device added `/dev/dri` doesn't exist. I've tried installing the non-free driver in Debian to no avail and on Fedora I've tried RPM fusion but that didn't change anything notable.

Like I said I've gone pretty deep in search results and haven't found anything that's worked to get this fully set up in Debian or Fedora. I'm not really intereasted in the LXC route at this time either, which is the vast majority of what came up.

The only blacklisted modules I have are:

At this point I'm hoping I missed something silly and someone can straighten me out pretty quick. Any and all help would be super appreciated!

The issue:

I recently acquired an Intel NUC 12 Enthusiast NUC12SNKi72 and after a doozy of a time getting Proxmox installed I'm finally ready to start moving some VMs from my older server, starting with my media stack.

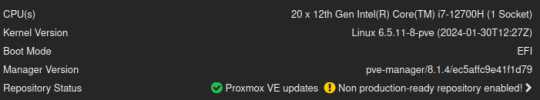

The system:

- i7-12700H

- M45201-501 motherboard

- 64GB DDR4

- PVE 8.1.4

- Kernels 6.5.13-1-pve and 6.5.11-8-pve

- systemd-boot (ZFS)

It may actually be easier to list the things I haven't tried, but I'll stick with the closest to success I've gotten so far. Currently I have a Fedora 39 VM that's set up with the iGPU added as a PCI device with All Functions, ROM-Bar, and PCI-Express turned on. Inside the VM I can see `/dev/dri/card0` but not `/dev/dri/render128`. If the VM isn't running I see both of them on the Proxmox host (along with card1 and Render129. I'd prefer to get this running under Debian but even on an updated bookworm install with the same PCI Device added `/dev/dri` doesn't exist. I've tried installing the non-free driver in Debian to no avail and on Fedora I've tried RPM fusion but that didn't change anything notable.

Like I said I've gone pretty deep in search results and haven't found anything that's worked to get this fully set up in Debian or Fedora. I'm not really intereasted in the LXC route at this time either, which is the vast majority of what came up.

The only blacklisted modules I have are:

Bash:

:~# grep -ir blacklist /etc/modprobe.d/

/etc/modprobe.d/pve-blacklist.conf:blacklist nvidiafb

/etc/modprobe.d/intel-microcode-blacklist.conf:blacklist microcodeAt this point I'm hoping I missed something silly and someone can straighten me out pretty quick. Any and all help would be super appreciated!