Hello,

I am not sure if I am correct in my assumptions or if something has broken.

these are the latest updates i have installed

this morning i booted all pve hosts in the cluster (HA and live migrations). after that one vm stopped responding. the screen showed the following:

a reboot or restore from backup showed the same result.

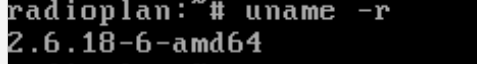

now i have to say that the vm is a very old one ;-)

a debian 4 with LSI53C895A controller and intel e1000 network card. virtio support was not available in the kernel at that time?

i don't need a solution for this!

i took the opportunity to replace the vm with one with a new operating system (and some pita things with the applications).

but i would like to know if this can be related to the last update(s).

regards

stefan

I am not sure if I am correct in my assumptions or if something has broken.

these are the latest updates i have installed

Code:

Start-Date: 2023-02-28 05:19:42

Commandline: apt-get -y dist-upgrade

Install: libslirp0:amd64 (4.4.0-1+deb11u2, automatic)

Upgrade: libcurl4:amd64 (7.74.0-1.3+deb11u5, 7.74.0-1.3+deb11u7), libcurl3-gnutls:amd64 (7.74.0-1.3+deb11u5, 7.74.0-1.3+deb11u7), pve-qemu-kvm:amd64 (7.1.0-4, 7.2.0-5), curl:amd64 (7.74.0-1.3+deb11u5, 7.74.0-1.3+deb11u7)

End-Date: 2023-02-28 05:19:48

Start-Date: 2023-03-07 07:14:52

Commandline: apt-get -y dist-upgrade

Upgrade: libproxmox-acme-perl:amd64 (1.4.3, 1.4.4), swtpm-libs:amd64 (0.8.0~bpo11+2, 0.8.0~bpo11+3), swtpm-tools:amd64 (0.8.0~bpo11+2, 0.8.0~bpo11+3), swtpm:amd64 (0.8.0~bpo11+2, 0.8.0~bpo11+3), lxc-pve:amd64 (5.0.2-1, 5.0.2-2), novnc-pve:amd64 (1.3.0-3, 1.4.0-1), qemu-server:amd64 (7.3-3, 7.3-4), libproxmox-acme-plugins:amd64 (1.4.3, 1.4.4), pve-i18n:amd64 (2.8-2, 2.8-3), pve-kernel-helper:amd64 (7.3-4, 7.3-5)

End-Date: 2023-03-07 07:15:52this morning i booted all pve hosts in the cluster (HA and live migrations). after that one vm stopped responding. the screen showed the following:

a reboot or restore from backup showed the same result.

now i have to say that the vm is a very old one ;-)

a debian 4 with LSI53C895A controller and intel e1000 network card. virtio support was not available in the kernel at that time?

i don't need a solution for this!

i took the opportunity to replace the vm with one with a new operating system (and some pita things with the applications).

but i would like to know if this can be related to the last update(s).

regards

stefan

Last edited: