Hi,

I've recently added a couple of new nodes to my system and now want to move the Ceph monitors to the new nodes, but am struggling.

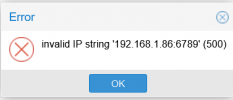

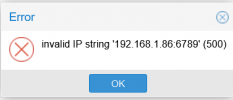

When I go to Ceph - Monitor in the Proxmox GUI, click Create and select the new node, it almost instantly give me the error message "Invalid IP string", followed by the IP of one of the currently active monitors - not always the same one.

I've tried to create the Ceph monitor on other nodes as well (both new and old), but always the same problem, and not always the same IP that shows up, but always an IP of a current Ceph monitor node. I've even removed a current monitor and tried to recreate it a few minutes later, but the same problem occurred (was running on 3 monitors, now on 2 - that test may not have been my smartest decision ever...

Having a good search online, I found this article https://www.mail-archive.com/pve-devel@lists.proxmox.com/msg03848.html that lists the same exact error message, but I'm not quite sure how this would relate to my problem.

I'm running Proxmox 7.1-10 with the latest patches.

Anyone got any ideas? Thanks in advance.

I've recently added a couple of new nodes to my system and now want to move the Ceph monitors to the new nodes, but am struggling.

When I go to Ceph - Monitor in the Proxmox GUI, click Create and select the new node, it almost instantly give me the error message "Invalid IP string", followed by the IP of one of the currently active monitors - not always the same one.

I've tried to create the Ceph monitor on other nodes as well (both new and old), but always the same problem, and not always the same IP that shows up, but always an IP of a current Ceph monitor node. I've even removed a current monitor and tried to recreate it a few minutes later, but the same problem occurred (was running on 3 monitors, now on 2 - that test may not have been my smartest decision ever...

Having a good search online, I found this article https://www.mail-archive.com/pve-devel@lists.proxmox.com/msg03848.html that lists the same exact error message, but I'm not quite sure how this would relate to my problem.

I'm running Proxmox 7.1-10 with the latest patches.

Anyone got any ideas? Thanks in advance.