We have a Windows Server 2025 VM that has hung or crashed a couple of times over the past few months, requiring a reboot of the VM to recover.

This is running on Proxmox VE 9.1.1.

What I've checked so far:

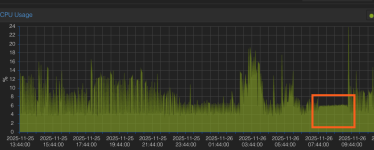

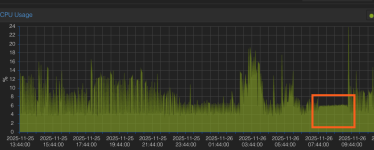

I did notice the CPU graph was reporting a strange, fairly flat amount of cpu usage during the time it was frozen / crashed.

Any pointers on where I should look to find what could be causing this would be appreciated.

This is running on Proxmox VE 9.1.1.

What I've checked so far:

- It seems to happen during a quiet period between 4am and 8am, with no scheduled events or backups occurring within an hour of the issue starting.

- No sign of any issues in Windows event logs, just a "recovered from unexpected shutdown" entry after the manual reboot.

- No log entries in journalctl near the time of the issue.

- No hardware or storage errors in Proxmox or iDRAC.

- The node hosting the VM is only using around 60% of its total memory.

- The VM is using local storage, and other VMs on the same storage are not having any issues.

I did notice the CPU graph was reporting a strange, fairly flat amount of cpu usage during the time it was frozen / crashed.

Any pointers on where I should look to find what could be causing this would be appreciated.