Hello,

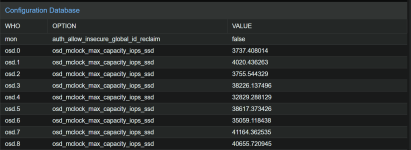

I’m running Proxmox VE 8.4** with Ceph (Squid / Reef) on 3 identical nodes. All OSDs are SATA SSDs using BlueStore and **direct HBA passthrough (no RAID). Yet the **`osd_mclock_max_capacity_iops_ssd`** values are different — some are locked at **~3.7k IOPS**, others at **~41k IOPS**.

This is causing **severe Apply/Commit latency spikes (37–70 ms)** on the low-IOPS OSDs, even under light load.

May i know it is any method to solve the latency and osd_mclock_max_capacity_iops_ssd problem? Thank you.

Parker

I’m running Proxmox VE 8.4** with Ceph (Squid / Reef) on 3 identical nodes. All OSDs are SATA SSDs using BlueStore and **direct HBA passthrough (no RAID). Yet the **`osd_mclock_max_capacity_iops_ssd`** values are different — some are locked at **~3.7k IOPS**, others at **~41k IOPS**.

This is causing **severe Apply/Commit latency spikes (37–70 ms)** on the low-IOPS OSDs, even under light load.

May i know it is any method to solve the latency and osd_mclock_max_capacity_iops_ssd problem? Thank you.

Parker