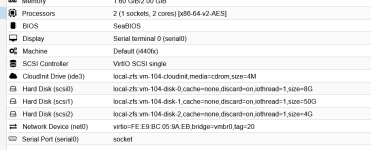

I use terraform to provision my VMs, and each is created with a cloudinit drive (cloudinit.png). They are each replicated to other nodes for HA.

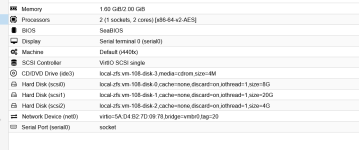

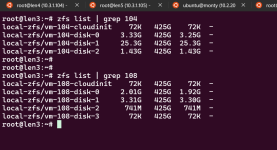

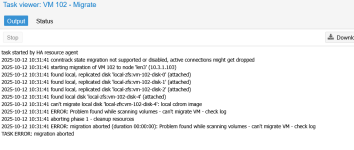

At some point during the life of the VM the cloudinit drives seem to change to cdrom drives in the UI (cloudinit-cdrom.png) and a new disk is created in zfs (cloudinit-zfs.png). While replication still works, when I attempt to migrate the VM to another node it fails because of the cdrom drive (cloudinit-error.png).

So I have to go to each VM, delete the cdrom drive and recreate the cloud init drive, which sometimes has to be deleted from zfs first.

It happens to all of my provisioned VMs

What is causing this, and how can i fix it?

At some point during the life of the VM the cloudinit drives seem to change to cdrom drives in the UI (cloudinit-cdrom.png) and a new disk is created in zfs (cloudinit-zfs.png). While replication still works, when I attempt to migrate the VM to another node it fails because of the cdrom drive (cloudinit-error.png).

So I have to go to each VM, delete the cdrom drive and recreate the cloud init drive, which sometimes has to be deleted from zfs first.

It happens to all of my provisioned VMs

What is causing this, and how can i fix it?