yea so, corosync doesnt need to be dedicated but it depends on your switches and nic. if they can make sure you always have low latency even if the bond is saturated than yes you dont need to seperate it.

i would however stress test this as i have seen switches dont do that exactly or even nic start congestion issues even when they shouldt have free bandwidth. thats why the common sentiment is to have a seperate nic for that. not that its always needed but its the most simple advice that will certainly always work no matter what.

about backup, well as i said, depends how saturated you are and how critical that network is.

best pratice on an unlimted budget ofc is a seperate transport network for migrations into your ceph storage and backups (write and restore)

this will guarantee that the normal network traffic of your host will be unaffected at all times no matter what you do

in practice this is usually overkill. still its nice to have and not have to think about.

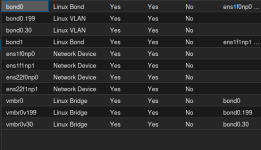

as for SDN, its a matter of taste and need there is also a better way if you dont wanna use SDN to use VLan

that would be by simply making the Bridge VLan aware and add the interface carry that Vlans

then you define the range the bridge should be able to handle

so in your exmaple it would be lets say vmbr0 should do anything

vmbr0 gets a bridge interface bond0 and is vlan aware

nothing else todo on each node other than keep the interfacename of vmbr0 identical.

in each VM you can then specify a vlan ID in each adapter which will be passed as native.

OR

you specify nothing in which case bond0 native is passed as native to the VM but also the VLans will so the VM could access each VLAN itself

with an OS that can handle that

ofc its all a matter of taste.

the SDN has simply the advantage that we can do several things at once right on the network level (like using DHCP, IPAM, DNS register etc)

you can then also use the proxmox firewall to manage inter vlan traffic (even tough thats pretty much useless in a VLAN SDN, thats more relevant in SDN networks where proxmox really does do some routing)

So it mainly depends on your needs. if youre like me and you use static assignment anyway and dont need the other features its pretty much potato potata.

In total SDN are probably cleaner, but VLAN Aware bridge is well.. less overhead. depends on your setup and needs.