I originally posted this troubleshooting message as part of this thread: https://forum.proxmox.com/threads/h...n-api-permissions-not-sure-what-to-do.169584/

However, that thread was more about how to set up remote sync at all. After thinking about it, I decided to break this out into a separate troubleshooting thread to make it easier to find for anyone having a similar issue.

I'm getting this notice on a when the task succeeds (TASK OK):

I've got about 300 GB of data on my local PBS that needs to sync to the remote. Clearly, I've misconfigured something. I'm not sure if it's the permissions or not.

I have a Local PBS User on my Local PBS with

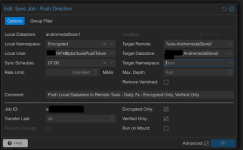

That all seems correct. It's possible I've misunderstood something, but I think the issue is probably in my Sync Job configuration.

Anybody see anything obvious that's missing?

I'm kind of wondering if I misunderstood how to set the permissions/path for the local PBS user.

It connects just fine to the local server, but doesn't find anything to push.

Thanks!

However, that thread was more about how to set up remote sync at all. After thinking about it, I decided to break this out into a separate troubleshooting thread to make it easier to find for anyone having a similar issue.

I'm getting this notice on a when the task succeeds (TASK OK):

Code:

2025-08-17T15:55:06-05:00: Starting datastore sync job 'Tuxis-AndromedaStore2:DB2685_AndromedaStore2:andromedaStore1:Encrypted:s-d348470b-04e8'

2025-08-17T15:55:06-05:00: sync datastore 'andromedaStore1' to 'Tuxis-AndromedaStore2/DB2685_AndromedaStore2'

2025-08-17T15:55:07-05:00: Summary: sync job found no new data to push

2025-08-17T15:55:07-05:00: sync job 'Tuxis-AndromedaStore2:DB2685_AndromedaStore2:andromedaStore1:Encrypted:s-d348470b-04e8' end

2025-08-17T15:55:07-05:00: queued notification (id=7bf63506-dc33-4041-b7a1-c45a074bc374)

2025-08-17T15:55:07-05:00: TASK OKI've got about 300 GB of data on my local PBS that needs to sync to the remote. Clearly, I've misconfigured something. I'm not sure if it's the permissions or not.

I have a Local PBS User on my Local PBS with

RemoteSyncPushOperator on path /remote.- This user only exists to do the push operation, and I have another local admin user that I use to manage the whole server.

- I know I could theoretically narrow the

/remotepath down to the actual datastore over there, but I've only got one namespace and one datastore over there, and I'm trying to keep the setup simple while I'm getting it working.

$PathToDatastoreOnRemotePBS.That all seems correct. It's possible I've misunderstood something, but I think the issue is probably in my Sync Job configuration.

Code:

root@andromeda0:/etc/proxmox-backup# batcat sync.cfg

───────┬────────────────────────────────────────────────────────────────────────────────────────────

│ File: sync.cfg

───────┼────────────────────────────────────────────────────────────────────────────────────────────

1 │ sync: s-d348470b-04e8

2 │ comment Push Local Datastore to Remote Tuxis - Daily 7a - Encrypted Only, Verified Only

3 │ encrypted-only true

4 │ ns Encrypted

5 │ owner $API_TOKEN

6 │ remote $RemoteName

7 │ remote-ns

8 │ remote-store $RemoteStore

9 │ schedule 07:00

11 │ store $LocalStore

12 │ sync-direction push

13 │ verified-only true

───────┴─────────────────────────────────────────────────────────────────────────────────────────────────Anybody see anything obvious that's missing?

I'm kind of wondering if I misunderstood how to set the permissions/path for the local PBS user.

It connects just fine to the local server, but doesn't find anything to push.

Thanks!