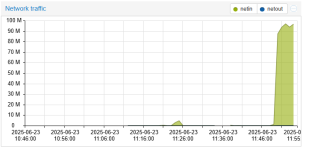

My 10G Nic cannot reach 10G with single thread. how can I fix this issue? This is point to point connection without switch.

This issue case when I import VM from ESXi will cap the limit to 1G only.

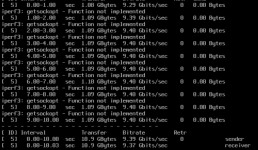

Single Thread

root@pve001:~# iperf3 -c 10.0.0.1

Connecting to host 10.0.0.1, port 5201

[ 5] local 10.0.0.21 port 40094 connected to 10.0.0.1 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 231 MBytes 1.94 Gbits/sec 0 153 KBytes

[ 5] 1.00-2.00 sec 237 MBytes 1.99 Gbits/sec 0 153 KBytes

[ 5] 2.00-3.00 sec 235 MBytes 1.97 Gbits/sec 0 153 KBytes

[ 5] 3.00-4.00 sec 236 MBytes 1.98 Gbits/sec 0 153 KBytes

[ 5] 4.00-5.00 sec 251 MBytes 2.11 Gbits/sec 0 153 KBytes

[ 5] 5.00-6.00 sec 250 MBytes 2.10 Gbits/sec 0 153 KBytes

[ 5] 6.00-7.00 sec 235 MBytes 1.97 Gbits/sec 0 153 KBytes

[ 5] 7.00-8.00 sec 242 MBytes 2.03 Gbits/sec 0 153 KBytes

[ 5] 8.00-9.00 sec 237 MBytes 1.99 Gbits/sec 0 153 KBytes

[ 5] 9.00-10.00 sec 238 MBytes 1.99 Gbits/sec 0 153 KByt

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.34 GBytes 2.01 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 2.34 GBytes 2.01 Gbits/sec receiver

Multi Thread

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 1.55 GBytes 1.33 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 1.55 GBytes 1.33 Gbits/sec receiver

[ 7] 0.00-10.00 sec 1.79 GBytes 1.54 Gbits/sec 0 sender

[ 7] 0.00-10.00 sec 1.79 GBytes 1.54 Gbits/sec receiver

[ 9] 0.00-10.00 sec 1.77 GBytes 1.52 Gbits/sec 0 sender

[ 9] 0.00-10.00 sec 1.77 GBytes 1.52 Gbits/sec receiver

[ 11] 0.00-10.00 sec 1.53 GBytes 1.31 Gbits/sec 0 sender

[ 11] 0.00-10.00 sec 1.53 GBytes 1.31 Gbits/sec receiver

[ 13] 0.00-10.00 sec 1.71 GBytes 1.47 Gbits/sec 0 sender

[ 13] 0.00-10.00 sec 1.71 GBytes 1.46 Gbits/sec receiver

[ 15] 0.00-10.00 sec 1.50 GBytes 1.29 Gbits/sec 0 sender

[ 15] 0.00-10.00 sec 1.50 GBytes 1.29 Gbits/sec receiver

[SUM] 0.00-10.00 sec 9.85 GBytes 8.46 Gbits/sec 0 sender

[SUM] 0.00-10.00 sec 9.84 GBytes 8.46 Gbits/sec receiver

root@pve001:~# dmesg | grep Broadcom

[ 3.690740] bnxt_en 0000:60:00.0 eth0: Broadcom BCM57504 NetXtreme-E 10Gb/25Gb/50Gb/100Gb/200Gb Ethernet found at mem 23ffff030000, node addr 8c:84:74:c2:bf:a0

[ 3.713955] bnxt_en 0000:60:00.1 eth1: Broadcom BCM57504 NetXtreme-E 10Gb/25Gb/50Gb/100Gb/200Gb Ethernet found at mem 23ffff020000, node addr 8c:84:74:c2:bf:a1

[ 3.738832] bnxt_en 0000:60:00.2 eth2: Broadcom BCM57504 NetXtreme-E 10Gb/25Gb/50Gb/100Gb/200Gb Ethernet found at mem 23ffff010000, node addr 8c:84:74:c2:bf:a2

[ 3.766209] bnxt_en 0000:60:00.3 eth3: Broadcom BCM57504 NetXtreme-E 10Gb/25Gb/50Gb/100Gb/200Gb Ethernet found at mem 23ffff000000, node addr 8c:84:74:c2:bf:a3

root@pve001:~# ethtool eno17395np0

Settings for eno17395np0:

Supported ports: [ FIBRE ]

Supported link modes: 25000baseSR/Full

10000baseSR/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: Yes

Supported FEC modes: RS BASER

Advertised link modes: Not reported

Advertised pause frame use: No

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 10000Mb/s

Lanes: 1

Duplex: Full

Auto-negotiation: off

Port: FIBRE

PHYAD: 0

Transceiver: internal

Supports Wake-on: g

Wake-on: d

Current message level: 0x00002081 (8321)

drv tx_err hw

Link detected: yes

root@pve001:~#

This issue case when I import VM from ESXi will cap the limit to 1G only.

Single Thread

root@pve001:~# iperf3 -c 10.0.0.1

Connecting to host 10.0.0.1, port 5201

[ 5] local 10.0.0.21 port 40094 connected to 10.0.0.1 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 231 MBytes 1.94 Gbits/sec 0 153 KBytes

[ 5] 1.00-2.00 sec 237 MBytes 1.99 Gbits/sec 0 153 KBytes

[ 5] 2.00-3.00 sec 235 MBytes 1.97 Gbits/sec 0 153 KBytes

[ 5] 3.00-4.00 sec 236 MBytes 1.98 Gbits/sec 0 153 KBytes

[ 5] 4.00-5.00 sec 251 MBytes 2.11 Gbits/sec 0 153 KBytes

[ 5] 5.00-6.00 sec 250 MBytes 2.10 Gbits/sec 0 153 KBytes

[ 5] 6.00-7.00 sec 235 MBytes 1.97 Gbits/sec 0 153 KBytes

[ 5] 7.00-8.00 sec 242 MBytes 2.03 Gbits/sec 0 153 KBytes

[ 5] 8.00-9.00 sec 237 MBytes 1.99 Gbits/sec 0 153 KBytes

[ 5] 9.00-10.00 sec 238 MBytes 1.99 Gbits/sec 0 153 KByt

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.34 GBytes 2.01 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 2.34 GBytes 2.01 Gbits/sec receiver

Multi Thread

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 1.55 GBytes 1.33 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 1.55 GBytes 1.33 Gbits/sec receiver

[ 7] 0.00-10.00 sec 1.79 GBytes 1.54 Gbits/sec 0 sender

[ 7] 0.00-10.00 sec 1.79 GBytes 1.54 Gbits/sec receiver

[ 9] 0.00-10.00 sec 1.77 GBytes 1.52 Gbits/sec 0 sender

[ 9] 0.00-10.00 sec 1.77 GBytes 1.52 Gbits/sec receiver

[ 11] 0.00-10.00 sec 1.53 GBytes 1.31 Gbits/sec 0 sender

[ 11] 0.00-10.00 sec 1.53 GBytes 1.31 Gbits/sec receiver

[ 13] 0.00-10.00 sec 1.71 GBytes 1.47 Gbits/sec 0 sender

[ 13] 0.00-10.00 sec 1.71 GBytes 1.46 Gbits/sec receiver

[ 15] 0.00-10.00 sec 1.50 GBytes 1.29 Gbits/sec 0 sender

[ 15] 0.00-10.00 sec 1.50 GBytes 1.29 Gbits/sec receiver

[SUM] 0.00-10.00 sec 9.85 GBytes 8.46 Gbits/sec 0 sender

[SUM] 0.00-10.00 sec 9.84 GBytes 8.46 Gbits/sec receiver

root@pve001:~# dmesg | grep Broadcom

[ 3.690740] bnxt_en 0000:60:00.0 eth0: Broadcom BCM57504 NetXtreme-E 10Gb/25Gb/50Gb/100Gb/200Gb Ethernet found at mem 23ffff030000, node addr 8c:84:74:c2:bf:a0

[ 3.713955] bnxt_en 0000:60:00.1 eth1: Broadcom BCM57504 NetXtreme-E 10Gb/25Gb/50Gb/100Gb/200Gb Ethernet found at mem 23ffff020000, node addr 8c:84:74:c2:bf:a1

[ 3.738832] bnxt_en 0000:60:00.2 eth2: Broadcom BCM57504 NetXtreme-E 10Gb/25Gb/50Gb/100Gb/200Gb Ethernet found at mem 23ffff010000, node addr 8c:84:74:c2:bf:a2

[ 3.766209] bnxt_en 0000:60:00.3 eth3: Broadcom BCM57504 NetXtreme-E 10Gb/25Gb/50Gb/100Gb/200Gb Ethernet found at mem 23ffff000000, node addr 8c:84:74:c2:bf:a3

root@pve001:~# ethtool eno17395np0

Settings for eno17395np0:

Supported ports: [ FIBRE ]

Supported link modes: 25000baseSR/Full

10000baseSR/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: Yes

Supported FEC modes: RS BASER

Advertised link modes: Not reported

Advertised pause frame use: No

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 10000Mb/s

Lanes: 1

Duplex: Full

Auto-negotiation: off

Port: FIBRE

PHYAD: 0

Transceiver: internal

Supports Wake-on: g

Wake-on: d

Current message level: 0x00002081 (8321)

drv tx_err hw

Link detected: yes

root@pve001:~#