Hello everyone! I am currently testing how we can get the best disk performance (IOPS) within a VM. I noticed that there is a hard limit within the VM, e.g. ~120K random write (with VirtIO SCSI single controller) and ~170k random write (with VirtIO block), more exact values are listed below. I was also able to narrow down the cause to the IO thread limitation, as all IO processes run in a single thread and this cannot be extended with the standard Qemu parameters. This means that the maximum speed scales with the single-core clock, at the time of testing this is at 100%. Normally you should be able to define several IO threads and queues for a VirtIO block devices https://blogs.oracle.com/linux/post/virtioblk-using-iothread-vq-mapping.

The question now is whether this option will soon be included in Proxmox? If you define the necessary parameters via the custom parameters in VM.conf (args), you only get the error message: “Property ‘virtio-blk-pci.num_queues’ not found”. It would be an extreme performance gain, as you almost reach the native speed, depending on how many threads you assign and perform additional CPU pinning.

It may be worth mentioning that I also tried the same tests with a stand-alone nvme with ext4 formatting to eliminate the zfs file system as root cause. The speed on the host was slightly faster in certain aspects, but the limitation within the VM was the same.

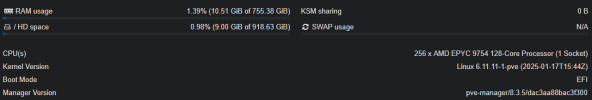

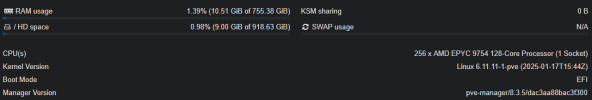

Host:

Storage Config:

ZFS vDev Mirror (Raid10): 4x Kioxia CD8P-R SIE (7.68TB)

Benchmark Results:

On Host ZFS:

Compression=lz4, ARC=all, Dedup=off,ashift=12,atime=off,recordsize=16K

Inside VM:

To keep it shorter I'll just attach the randread test for both storage controller types.

With VirtIO SCSI controller:

With VirtIO Block controller:

Inside VM on Ubuntu Host with QEMU/KVM installation and two iothreads:

The more iothreads you assign to the VirtIO block controller, the faster/better the performance within the VM.

The question now is whether this option will soon be included in Proxmox? If you define the necessary parameters via the custom parameters in VM.conf (args), you only get the error message: “Property ‘virtio-blk-pci.num_queues’ not found”. It would be an extreme performance gain, as you almost reach the native speed, depending on how many threads you assign and perform additional CPU pinning.

It may be worth mentioning that I also tried the same tests with a stand-alone nvme with ext4 formatting to eliminate the zfs file system as root cause. The speed on the host was slightly faster in certain aspects, but the limitation within the VM was the same.

Host:

Storage Config:

ZFS vDev Mirror (Raid10): 4x Kioxia CD8P-R SIE (7.68TB)

Benchmark Results:

On Host ZFS:

Compression=lz4, ARC=all, Dedup=off,ashift=12,atime=off,recordsize=16K

| Test Name | IOPS | MB/s |

| randread | 1425k | 5838 MB/s |

| randwrite | 284k | 1162 MB/s |

| seqread | 2121k | 8687 MB/s |

| seqwrite | 497k | 2035 MB/s |

Code:

RND Write:

fio --bs=4k --direct=1 --gtod_reduce=1 --ioengine=libaio --iodepth=32 --group_reporting --name=randwrite --numjobs=16 --ramp_time=10 --runtime=60 --rw=randwrite --size=256M --time_based --directory=/vdev-mirror/FIO/

RND Read:

fio --bs=4k --direct=1 --gtod_reduce=1 --ioengine=libaio --iodepth=32 --group_reporting --name=randread --numjobs=16 --ramp_time=10 --runtime=60 --rw=randread --size=256M --time_based --directory=/vdev-mirror/FIO/

SEQ Write:

fio --bs=4k --direct=1 --gtod_reduce=1 --ioengine=libaio --iodepth=32 --group_reporting --name=seqwrite --numjobs=16 --ramp_time=10 --runtime=60 --rw=write --size=256M --time_based --directory=/vdev-mirror/FIO/

SEQ Read:

fio --bs=4k --direct=1 --gtod_reduce=1 --ioengine=libaio --iodepth=32 --group_reporting --name=seqread --numjobs=16 --ramp_time=10 --runtime=60 --rw=read --size=256M --time_based --directory=/vdev-mirror/FIO/Inside VM:

- 32x vCPU

- Host CPU Type

- 32GB RAM

- iothread=true

- discard=true

- Raw disk on ZFS vdev mirror

- Host CPU Type

- 32GB RAM

- iothread=true

- discard=true

- Raw disk on ZFS vdev mirror

With VirtIO SCSI controller:

Code:

fio --bs=4k --direct=1 --gtod_reduce=1 --ioengine=libaio --iodepth=32 --group_reporting --name=randread --numjobs=16 --ramp_time=10 --runtime=60 --rw=randread --size=256M --time_based --directory=/home/FIO/

randread: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=32

...

fio-3.33

Starting 16 processes

Jobs: 16 (f=16): [r(16)][100.0%][r=466MiB/s][r=119k IOPS][eta 00m:00s]

randread: (groupid=0, jobs=16): err= 0: pid=1025: Wed Mar 19 15:24:45 2025

read: IOPS=123k, BW=481MiB/s (504MB/s)(28.2GiB/60010msec)

bw ( KiB/s): min=371629, max=656446, per=100.00%, avg=492956.03, stdev=3478.08, samples=1920

iops : min=92904, max=164106, avg=123236.11, stdev=869.51, samples=1920

cpu : usr=1.65%, sys=9.12%, ctx=4293969, majf=0, minf=588

IO depths : 1=0.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=100.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0%

issued rwts: total=7387878,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Code:

fio --bs=4k --direct=1 --gtod_reduce=1 --ioengine=libaio --iodepth=32 --group_reporting --name=randread --numjobs=16 --ramp_time=10 --runtime=60 --rw=randread --size=256M --time_based --directory=/mnt/mount2/

randread: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=32

...

fio-3.33

Starting 16 processes

Jobs: 16 (f=16): [r(16)][100.0%][r=653MiB/s][r=167k IOPS][eta 00m:00s]

randread: (groupid=0, jobs=16): err= 0: pid=979: Wed Mar 19 14:34:50 2025

read: IOPS=169k, BW=659MiB/s (691MB/s)(38.6GiB/60008msec)

bw ( KiB/s): min=667121, max=790884, per=100.00%, avg=675333.80, stdev=1131.55, samples=1916

iops : min=166778, max=197718, avg=168831.60, stdev=282.89, samples=1916

cpu : usr=0.68%, sys=2.02%, ctx=321241, majf=0, minf=587

IO depths : 1=0.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=100.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0%

issued rwts: total=10124405,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32Inside VM on Ubuntu Host with QEMU/KVM installation and two iothreads:

The more iothreads you assign to the VirtIO block controller, the faster/better the performance within the VM.

Code:

fio --bs=4k --direct=1 --gtod_reduce=1 --ioengine=libaio --iodepth=32 --group_reporting --name=randread --numjobs=16 --ramp_time=10 --runtime=60 --rw=randread --size=256M --time_based --directory=/home/FIO/

randread: IOPS=630k, BW=2460MiB/s (2579MB/s)(144GiB/60003msec)

Last edited: