silverstone's latest activity

-

Ssilverstone reacted to t.lamprecht's post in the thread Debian 13.1 LXC template fails to create/start (FIX) with

Like.

The check as it was previously was indeed not ideal, so we not only fixed the issue itself but also changed the check such that it cannot happen in this form again, as now PVE either supports a major Debian release or it doesn't, but no break...

Like.

The check as it was previously was indeed not ideal, so we not only fixed the issue itself but also changed the check such that it cannot happen in this form again, as now PVE either supports a major Debian release or it doesn't, but no break... -

Ssilverstone replied to the thread Debian 13.1 LXC template fails to create/start (FIX).I agree 100% :( .

-

Ssilverstone reacted to binaryanomaly's post in the thread Debian 13.1 LXC template fails to create/start (FIX) with

Like.

This exactly what happened. Upgraded to debian 13.1 and suddenly stopped running after restarting the container. Shouldn't happen, should maybe raise a warning but not blocking the start.

Like.

This exactly what happened. Upgraded to debian 13.1 and suddenly stopped running after restarting the container. Shouldn't happen, should maybe raise a warning but not blocking the start. -

Ssilverstone reacted to Impact's post in the thread Debian 13.1 LXC template fails to create/start (FIX) with

Like.

Since I have need for this myself I've created a temporary patch for this. You can apply it like this wget...

Like.

Since I have need for this myself I've created a temporary patch for this. You can apply it like this wget... -

Ssilverstone replied to the thread Debian 13.1 LXC template fails to create/start (FIX).To be fair Proxmox VE is far from the only one doing this. At least in this Case the Workaround is quite simple. And most of the Stuff gets fixed relatively quickly by the Proxmox VE Team. They also provide good Answers in most Cases, although I...

-

Ssilverstone reacted to binaryanomaly's post in the thread Debian 13.1 LXC template fails to create/start (FIX) with

Like.

This is not a very robust way of doing things Proxmox / @t.lamprecht ... Stuff like this should soft-fail and not suddenly completly prevent legitimate containers that just have been updated - from being launched with a not so clear error message.

Like.

This is not a very robust way of doing things Proxmox / @t.lamprecht ... Stuff like this should soft-fail and not suddenly completly prevent legitimate containers that just have been updated - from being launched with a not so clear error message. -

Ssilverstone replied to the thread [SOLVED] Issue NFS between host and VM.I am doing the reverse (NFS Export on the Proxmox VE Host to a Debian VM, Private Network in this Case otherwise NFS via Wireguard if remote Host) but I'd say that your /etc/exports seems way too simple ... Here is mine for Comparison that ONLY...

-

Ssilverstone reacted to tsv0's post in the thread Debian 13.1 LXC template fails to create/start (FIX) with

Like.

Hi All hit an issue with updated LXC to Debian 13.1 TASK ERROR: unable to create CT 200 - unsupported debian version '13.1' FIX: you need to edit /usr/share/perl5/PVE/LXC/Setup/Debian.pm on line 39 and change from die "unsupported...

Like.

Hi All hit an issue with updated LXC to Debian 13.1 TASK ERROR: unable to create CT 200 - unsupported debian version '13.1' FIX: you need to edit /usr/share/perl5/PVE/LXC/Setup/Debian.pm on line 39 and change from die "unsupported... -

Ssilverstone replied to the thread Debian 13.1 LXC template fails to create/start (FIX).Same Issue and yes, the Workaround works :) . I think that the Proxmox VE Team should just make a <= (current release + 1) Check or just filter the Content of /etc/os-release or /etc/debian_version to only keep the Major Version (13 in this...

-

Ssilverstone replied to the thread Podman in rootless mode on LXC container.I also came up with a Script & a Systemd Timer+Service which can be installed directly INSIDE the LXC Container and run Hourly to fix if those Permissions change due to System Updates...

-

Ssilverstone reacted to janus57's post in the thread [TUTORIAL] [High Availability] Watchdog reboots with

Like.

Hi, with 4 nodes if 2 are down all your cluster is down, because the quorum need over 50% vote, so you should always want an odd number (3/5/7/9 etc.) Best regards,

Like.

Hi, with 4 nodes if 2 are down all your cluster is down, because the quorum need over 50% vote, so you should always want an odd number (3/5/7/9 etc.) Best regards, -

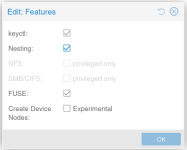

Ssilverstone replied to the thread Podman in rootless mode on LXC container.For the last couple of Errors, I added this to /etc/pve/lxc/101.conf: lxc.cgroup.devices.allow: c 10:200 rwm lxc.cgroup2.devices.allow: c 10:200 rwm lxc.mount.entry: /dev/net dev/net none bind,create=dir Also enabled FUSE in the Container...

-

Ssilverstone replied to the thread Podman in rootless mode on LXC container.Let's try to change that: root@pve:~# stat /rpool/data/subvol-101-disk-0/usr/sbin/newuidmap File: /rpool/data/subvol-101-disk-0/usr/sbin/newuidmap Size: 35288 Blocks: 42 IO Block: 35328 regular file Device: 0,60 Inode...

-

Ssilverstone replied to the thread Podman in rootless mode on LXC container.I wanted to give this another Try today on a new System ... Failing (again) :(. I followed your instructions to the Letter (for a Fedora LXC Container) but I'm always stuck with ERRO[0000] running `/usr/bin/newuidmap 569 0 1000 1 1 100000...

-

Ssilverstone replied to the thread [TUTORIAL] [High Availability] Watchdog reboots.Wouldn't it be better to just have second Switch and enable STP ? Or have it on a separate Parallel Subnet, that could also be another Possibility I guess.

-

Ssilverstone reacted to magingale's post in the thread [TUTORIAL] [High Availability] Watchdog reboots with

Like.

@silverstone the only difference between these test is enabling HA. My conclusion: CRM is rebooting the wrong node and causing a full cluster outage via watchdog fencing on the remaining node. Test number two with HA configured: pve-ha-crm is...

Like.

@silverstone the only difference between these test is enabling HA. My conclusion: CRM is rebooting the wrong node and causing a full cluster outage via watchdog fencing on the remaining node. Test number two with HA configured: pve-ha-crm is... -

Ssilverstone replied to the thread [TUTORIAL] [High Availability] Watchdog reboots.So what exactly is the difference between these 2 Tests and what you did yesterday, which (apparently) resulted in the "healthy" Node also rebooting ?

-

Ssilverstone replied to the thread Stuck immediately after Bootloader / start of Kernel Boot.Good Point :). It could be running low indeed. As an intermediary Step I think I could try to see if the Port is "alive" on both Systems (at separate Steps) to a Zyxel GS1910-24 Switch, and check if they work at all. If they do then it might...

-

Ssilverstone reacted to celemine1gig's post in the thread Stuck immediately after Bootloader / start of Kernel Boot with

Like.

Final idea for today, considering the board's age: Did you already check the voltage level of the CMOS battery? The evil range somewhere between 0-2V is always a good explanation for "stupid" or "weird" stuff going on. If it's nothing else, that...

Like.

Final idea for today, considering the board's age: Did you already check the voltage level of the CMOS battery? The evil range somewhere between 0-2V is always a good explanation for "stupid" or "weird" stuff going on. If it's nothing else, that... -

Ssilverstone replied to the thread [TUTORIAL] [High Availability] Watchdog reboots.Alright :). Never say never ;). If they have 100% reachability why is the other Node going down ;) ? You say you are certain it's the Watchdog, but does the Watchdog or the PVE Service give any indication as to why ? Is it communication...