RolandK's latest activity

-

RolandK replied to the thread Fehler mit Docker Container nach Linux update.>Das ist doch gar nicht der Punkt. Ich finde es völlig legitim, docker in lxcs zu benutzen, wenn man sich der Problematik bewusst ist und den >damit verbundenen Folgen (Ausfall von Diensten, troubleshooting etc) leben kann. Mein Eindruck ist...

RolandK replied to the thread Fehler mit Docker Container nach Linux update.>Das ist doch gar nicht der Punkt. Ich finde es völlig legitim, docker in lxcs zu benutzen, wenn man sich der Problematik bewusst ist und den >damit verbundenen Folgen (Ausfall von Diensten, troubleshooting etc) leben kann. Mein Eindruck ist... -

RolandK reacted to Falk R.'s post in the thread Fehler mit Docker Container nach Linux update with

RolandK reacted to Falk R.'s post in the thread Fehler mit Docker Container nach Linux update with Like.

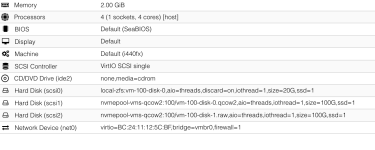

Also meine VM mit den Docker Containern ist gar nicht so verschwenderisch auf dem kleinen Atom Prozessor. Wenn der kleine Kernel zu viel ist, dann ist die Kiste etwas knapp bemessen. Warum soll ich eigentlich nur inkrementelle Backups vom LXC...

Like.

Also meine VM mit den Docker Containern ist gar nicht so verschwenderisch auf dem kleinen Atom Prozessor. Wenn der kleine Kernel zu viel ist, dann ist die Kiste etwas knapp bemessen. Warum soll ich eigentlich nur inkrementelle Backups vom LXC... -

RolandK replied to the thread special interest thread: qcow2 woes and interesting findings.i don't see performance regression . it's performance disadvantages of qcow2 on top of zfs are greatly exaggerated, imho. qcow2: # dd if=/dev/zero of=/dev/sdb bs=1024k status=progress count=10240 9954131968 bytes (10 GB, 9.3 GiB) copied, 12...

RolandK replied to the thread special interest thread: qcow2 woes and interesting findings.i don't see performance regression . it's performance disadvantages of qcow2 on top of zfs are greatly exaggerated, imho. qcow2: # dd if=/dev/zero of=/dev/sdb bs=1024k status=progress count=10240 9954131968 bytes (10 GB, 9.3 GiB) copied, 12... -

RolandK posted the thread special interest thread: qcow2 woes and interesting findings in Proxmox VE: Installation and configuration.this is not meant to to make users/customers uncertain about product quality , but whoever is deeper into using qcow2 may have a look at https://bugzilla.proxmox.com/show_bug.cgi?id=7012 , it's about a case where qcow2 performs pathologically...

RolandK posted the thread special interest thread: qcow2 woes and interesting findings in Proxmox VE: Installation and configuration.this is not meant to to make users/customers uncertain about product quality , but whoever is deeper into using qcow2 may have a look at https://bugzilla.proxmox.com/show_bug.cgi?id=7012 , it's about a case where qcow2 performs pathologically... -

RolandK reacted to fiona's post in the thread proxmox bugzilla open tickets reduction effort - how to start/proceed? with

RolandK reacted to fiona's post in the thread proxmox bugzilla open tickets reduction effort - how to start/proceed? with Like.

Hi, a large part of that is feature requests and that is not a bad thing in itself. Many things are easy to request, but a lot of effort to actually implement. There's a wide variety of uses cases and wishes. If they really are outdated, feel...

Like.

Hi, a large part of that is feature requests and that is not a bad thing in itself. Many things are easy to request, but a lot of effort to actually implement. There's a wide variety of uses cases and wishes. If they really are outdated, feel... -

RolandK reacted to pjottrr's post in the thread weird fileserver issues after upgrading to proxmox 9 with

RolandK reacted to pjottrr's post in the thread weird fileserver issues after upgrading to proxmox 9 with Like.

so i disabled ( removed) net 1 from broken server and the problem is gone, :) no more hiccups ... performance is a bit on the low side, but no full hiccups here , so i reinstated net 1 , and the problem returned this is how it looks when...

Like.

so i disabled ( removed) net 1 from broken server and the problem is gone, :) no more hiccups ... performance is a bit on the low side, but no full hiccups here , so i reinstated net 1 , and the problem returned this is how it looks when... -

RolandK replied to the thread weird fileserver issues after upgrading to proxmox 9.>i stil don't know why that 2nd network line causes is , and i don't think it is too interesting to know ? maybe asymetric routing ?

RolandK replied to the thread weird fileserver issues after upgrading to proxmox 9.>i stil don't know why that 2nd network line causes is , and i don't think it is too interesting to know ? maybe asymetric routing ? -

RolandK reacted to t.lamprecht's post in the thread Fehler mit Docker Container nach Linux update with

RolandK reacted to t.lamprecht's post in the thread Fehler mit Docker Container nach Linux update with Like.

Ich habe die Posts in einen eigenen Thread verschoben: https://forum.proxmox.com/threads/docker-env-file-in-docker-compose-debian-lxc-ct.175753/

Like.

Ich habe die Posts in einen eigenen Thread verschoben: https://forum.proxmox.com/threads/docker-env-file-in-docker-compose-debian-lxc-ct.175753/ -

RolandK replied to the thread Fehler mit Docker Container nach Linux update.sorry, aber können wir hier evtl bitte doch lieber beim thema bleiben?

RolandK replied to the thread Fehler mit Docker Container nach Linux update.sorry, aber können wir hier evtl bitte doch lieber beim thema bleiben? -

RolandK replied to the thread weird fileserver issues after upgrading to proxmox 9.i would first disable net1 temporarly in the old and then provide 4 cores and 2048mb to the new lxc comparing two different operating systems is hard enough - but they should have the same lxc/hardware ressources i guess the most prominent...

RolandK replied to the thread weird fileserver issues after upgrading to proxmox 9.i would first disable net1 temporarly in the old and then provide 4 cores and 2048mb to the new lxc comparing two different operating systems is hard enough - but they should have the same lxc/hardware ressources i guess the most prominent... -

RolandK replied to the thread weird fileserver issues after upgrading to proxmox 9.you mean you have a second lxc container which works without problems? those are both turnkey linux lxc containers, or what? the one with the issue has docker inside?

RolandK replied to the thread weird fileserver issues after upgrading to proxmox 9.you mean you have a second lxc container which works without problems? those are both turnkey linux lxc containers, or what? the one with the issue has docker inside? -

RolandK replied to the thread Use Tablet For Pointer option causing CPU usage on Linux.we had a ticket for this at https://bugzilla.proxmox.com/show_bug.cgi?id=3118, which i just closed. if anybody still sees this with any recent linux distro, please report. (i guess this exists, as bugs constantly (re)appear in linux) ;)

RolandK replied to the thread Use Tablet For Pointer option causing CPU usage on Linux.we had a ticket for this at https://bugzilla.proxmox.com/show_bug.cgi?id=3118, which i just closed. if anybody still sees this with any recent linux distro, please report. (i guess this exists, as bugs constantly (re)appear in linux) ;) -

RolandK replied to the thread Recommendations on KSM threshold with ballooning enabled.also mind https://bugzilla.proxmox.com/show_bug.cgi?id=4482 and https://bugzilla.proxmox.com/show_bug.cgi?id=3859

RolandK replied to the thread Recommendations on KSM threshold with ballooning enabled.also mind https://bugzilla.proxmox.com/show_bug.cgi?id=4482 and https://bugzilla.proxmox.com/show_bug.cgi?id=3859 -

RolandK reacted to leesteken's post in the thread Recommendations on KSM threshold with ballooning enabled with

RolandK reacted to leesteken's post in the thread Recommendations on KSM threshold with ballooning enabled with Like.

I think it's just a historic accident that they are both the same. Each technology might find 80% a logical default. On recent PVE versions, you can also change the ballooning threshold. When using ZFS where the cache memory counts as used...

Like.

I think it's just a historic accident that they are both the same. Each technology might find 80% a logical default. On recent PVE versions, you can also change the ballooning threshold. When using ZFS where the cache memory counts as used... -

RolandK reacted to Johannes S's post in the thread [SOLVED] Docker inside LXC (net.ipv4.ip_unprivileged_port_start error) with

RolandK reacted to Johannes S's post in the thread [SOLVED] Docker inside LXC (net.ipv4.ip_unprivileged_port_start error) with Like.

And a rather nasty one since it removes AppArmors additional security mechanism for the container. I can't believe people think, that this should be adopted as a fix in an update of ProxmoxVE. Maybe it's time to change the wording in the doc from...

Like.

And a rather nasty one since it removes AppArmors additional security mechanism for the container. I can't believe people think, that this should be adopted as a fix in an update of ProxmoxVE. Maybe it's time to change the wording in the doc from... -

RolandK replied to the thread Fehler mit Docker Container nach Linux update.für zukünftige "lessons learned" sowie das damit verbundene "copy&paste" um es für andere leichter rüberzubringen: Es ist ein bekanntes Problem, dass bei größeren Updates docker-Container in LXC-Containern immer mal wieder kaputt gehen. Beide...

RolandK replied to the thread Fehler mit Docker Container nach Linux update.für zukünftige "lessons learned" sowie das damit verbundene "copy&paste" um es für andere leichter rüberzubringen: Es ist ein bekanntes Problem, dass bei größeren Updates docker-Container in LXC-Containern immer mal wieder kaputt gehen. Beide... -

RolandK replied to the thread Poor GC/Verify performance on PBS.@floh8 , i agree that btrfs my be faster then zfs with small file / metadata access, but i would not consider that worth trying until proxmox verfiy didn't get optimization for better parallelism. you won't change your large xx terabytes...

RolandK replied to the thread Poor GC/Verify performance on PBS.@floh8 , i agree that btrfs my be faster then zfs with small file / metadata access, but i would not consider that worth trying until proxmox verfiy didn't get optimization for better parallelism. you won't change your large xx terabytes... -

RolandK replied to the thread Poor GC/Verify performance on PBS.@triggad , do you use the latest version of PBS ? there has been at least this enhancement which can increase gc speed a lot https://git.proxmox.com/?p=proxmox-backup.git;a=commit;h=03143eee0a59cf319be0052e139f7e20e124d572 Total GC runtime...

RolandK replied to the thread Poor GC/Verify performance on PBS.@triggad , do you use the latest version of PBS ? there has been at least this enhancement which can increase gc speed a lot https://git.proxmox.com/?p=proxmox-backup.git;a=commit;h=03143eee0a59cf319be0052e139f7e20e124d572 Total GC runtime... -

RolandK replied to the thread weird fileserver issues after upgrading to proxmox 9.could you post your lxc container configuration, your samba configuration from inside container and your storage.cfg from /etc/pve and the output of the "mount" command ?

RolandK replied to the thread weird fileserver issues after upgrading to proxmox 9.could you post your lxc container configuration, your samba configuration from inside container and your storage.cfg from /etc/pve and the output of the "mount" command ?