lifeboy's latest activity

-

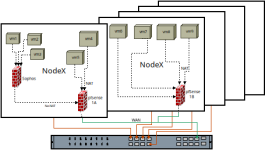

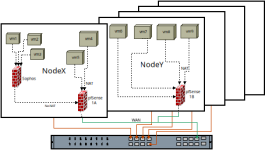

Llifeboy posted the thread Default gateway on VM is ignored in this configuration in Proxmox VE: Installation and configuration.We have set up a couple of nodes in the way the diagram indicates. All our VM's are on a bridged NIC that is also shared by the pfSense VM LAN port. Let's for example say they are on 192.168.100.0/24 for the VLAN in question. Since there...

-

Llifeboy replied to the thread acme.sh plugin version and troubleshooting.Anybody that has successfully used debug to figure out what's going wrong?

-

Llifeboy posted the thread acme.sh plugin version and troubleshooting in Proxmox VE: Installation and configuration.I'm running pve 8.4.16 and want to renew my LE certficate using dns_miab, but I get an error after the TXT record has been validated. "TASK ERROR: validating challenge...