jsterr's latest activity

-

jsterr replied to the thread Graphical Errors on PVE Webui (9.1.6).Does VM console work when you check 4-6 Machines ?

jsterr replied to the thread Graphical Errors on PVE Webui (9.1.6).Does VM console work when you check 4-6 Machines ? -

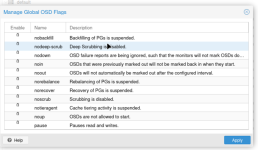

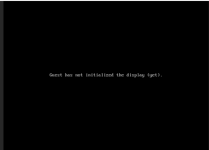

jsterr posted the thread Graphical Errors on PVE Webui (9.1.6) in Proxmox VE: Installation and configuration.Hello, on some training-vms I upgraded to PVE 9.1.6 and Im having some weird issues atm I cant track down. Im not sure if it is related to the 9.1.6 upgrade. * Ceph -> OSD -> Global-Flags: Ceph Global Flags Show Integers "0" or "1" instead of...

jsterr posted the thread Graphical Errors on PVE Webui (9.1.6) in Proxmox VE: Installation and configuration.Hello, on some training-vms I upgraded to PVE 9.1.6 and Im having some weird issues atm I cant track down. Im not sure if it is related to the 9.1.6 upgrade. * Ceph -> OSD -> Global-Flags: Ceph Global Flags Show Integers "0" or "1" instead of... -

jsterr reacted to Johannes S's post in the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr? with

jsterr reacted to Johannes S's post in the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr? with Like.

Wenn man zusätzlich auf dem offsite PBS per Firewall/Paketfilter eingehende Verbindungen dicht macht, hätte man sogar noch eine zusätzliche Sicherheit. Dann könnte ein Angreifer nicht mal bei fehlkonfigurierten Permissions damit was anfangen...

Like.

Wenn man zusätzlich auf dem offsite PBS per Firewall/Paketfilter eingehende Verbindungen dicht macht, hätte man sogar noch eine zusätzliche Sicherheit. Dann könnte ein Angreifer nicht mal bei fehlkonfigurierten Permissions damit was anfangen... -

jsterr reacted to Bu66as's post in the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr? with

jsterr reacted to Bu66as's post in the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr? with Like.

@wire2hire, interessanter Datenpunkt mit den 70 MB/s bei USB 3.1 — das widerspricht den 4 MB/s von @jsterr deutlich. @jsterr, ein paar Gedanken dazu: Woher kommen die 4 MB/s? Die 70 MB/s von wire2hire zeigen, dass PBS auf USB-HDDs grundsätzlich...

Like.

@wire2hire, interessanter Datenpunkt mit den 70 MB/s bei USB 3.1 — das widerspricht den 4 MB/s von @jsterr deutlich. @jsterr, ein paar Gedanken dazu: Woher kommen die 4 MB/s? Die 70 MB/s von wire2hire zeigen, dass PBS auf USB-HDDs grundsätzlich... -

jsterr reacted to IsThisThingOn's post in the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr? with

jsterr reacted to IsThisThingOn's post in the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr? with Like.

Das ist ein ziemlicher edge case, der sich mit PBS nicht umsetzten lässt. PBS kann keine traditionell inkrementellen Backups. Wenn du es also 1:1 wie bei Veeam umsetzten möchtest, wirst vermutlich scheitern. Wenn du offen bist das System zu...

Like.

Das ist ein ziemlicher edge case, der sich mit PBS nicht umsetzten lässt. PBS kann keine traditionell inkrementellen Backups. Wenn du es also 1:1 wie bei Veeam umsetzten möchtest, wirst vermutlich scheitern. Wenn du offen bist das System zu... -

jsterr replied to the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr?.Die Erstbefüllung würde mit 4 MB / s einfach zu lange dauern, daran hatte ich aber auch schon gedacht. Bezüglich Punkt 3) Offline Backup heißt für mich, kein Server mit den PBS-Pulls drauf sondern Backup ist komplett auf eigenem Medium und kann...

jsterr replied to the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr?.Die Erstbefüllung würde mit 4 MB / s einfach zu lange dauern, daran hatte ich aber auch schon gedacht. Bezüglich Punkt 3) Offline Backup heißt für mich, kein Server mit den PBS-Pulls drauf sondern Backup ist komplett auf eigenem Medium und kann... -

jsterr reacted to wire2hire's post in the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr? with

jsterr reacted to wire2hire's post in the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr? with Like.

Hey, wie kommst du auf die 4MB/s bei der usb Platte? Ich kann das hier nicht nachvollziehen. Da ist der synjob zu einer usb Platte ( 3.1) wesentlich schneller. Gerade geschaut, letzter sync sind es 80gb gewesen in durchschnittlich 70MB/s laut log...

Like.

Hey, wie kommst du auf die 4MB/s bei der usb Platte? Ich kann das hier nicht nachvollziehen. Da ist der synjob zu einer usb Platte ( 3.1) wesentlich schneller. Gerade geschaut, letzter sync sind es 80gb gewesen in durchschnittlich 70MB/s laut log... -

jsterr posted the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr? in Proxmox Backup Server (Deutsch/German).Hintergrund / Use-Case: Ein Kunde von mir möchte migrieren: von VMware + Veeam auf Proxmox VE + PBS und sucht nach einer sinnvollen Lösung für das Offline-Backup. Täglich soll via Copy-Job auf USB 9TB an Fullbacks transferiert werden, sodass auf...

jsterr posted the thread Offline USB Backup mit PBS – Removable Datastore zu langsam, welche Alternativen nutzt ihr? in Proxmox Backup Server (Deutsch/German).Hintergrund / Use-Case: Ein Kunde von mir möchte migrieren: von VMware + Veeam auf Proxmox VE + PBS und sucht nach einer sinnvollen Lösung für das Offline-Backup. Täglich soll via Copy-Job auf USB 9TB an Fullbacks transferiert werden, sodass auf... -

jsterr replied to the thread NUMA Nodes Per Socket Configuration.This is a additonal setting that can be set "NUMA node per L3 cache" but did you also try to set "Nume nodes per node" to a different value then the default? On my side its set to 1 at default. I am interested in knowing if more numa nodes is...

jsterr replied to the thread NUMA Nodes Per Socket Configuration.This is a additonal setting that can be set "NUMA node per L3 cache" but did you also try to set "Nume nodes per node" to a different value then the default? On my side its set to 1 at default. I am interested in knowing if more numa nodes is... -

jsterr posted the thread NUMA Nodes Per Socket Configuration in Proxmox VE: Installation and configuration.Should NUMA Nodes per Socket be set to 1/2/4 in BIOS? Whats the best configuration for a single socket amd-cpu? In VM Hardware I would enable NUMA. Or is it better to set NUMA-Nodes to 1 and not use numa in vm hardware? Has someone made some...

jsterr posted the thread NUMA Nodes Per Socket Configuration in Proxmox VE: Installation and configuration.Should NUMA Nodes per Socket be set to 1/2/4 in BIOS? Whats the best configuration for a single socket amd-cpu? In VM Hardware I would enable NUMA. Or is it better to set NUMA-Nodes to 1 and not use numa in vm hardware? Has someone made some... -

jsterr replied to the thread Ceph 20.2 Tentacle Release Available as test preview and Ceph 18.2 Reef soon to be fully EOL.Its visible when you install a new proxmox node with ceph, there you can select it already.

jsterr replied to the thread Ceph 20.2 Tentacle Release Available as test preview and Ceph 18.2 Reef soon to be fully EOL.Its visible when you install a new proxmox node with ceph, there you can select it already. -

jsterr replied to the thread Ceph 20.2 Tentacle Release Available as test preview and Ceph 18.2 Reef soon to be fully EOL.Seems like tentacle has landed in no-subscription now (noticed it today) :-)

jsterr replied to the thread Ceph 20.2 Tentacle Release Available as test preview and Ceph 18.2 Reef soon to be fully EOL.Seems like tentacle has landed in no-subscription now (noticed it today) :-) -

jsterr reacted to RoCE-geek's post in the thread Redhat VirtIO developers would like to coordinate with Proxmox devs re: "[vioscsi] Reset to device ... system unresponsive" with

jsterr reacted to RoCE-geek's post in the thread Redhat VirtIO developers would like to coordinate with Proxmox devs re: "[vioscsi] Reset to device ... system unresponsive" with Like.

The resolving commit for mentioned vioscsi (and viostor) bug was merged 21 Jan 2026 into virtio master (commit cade4cb, corresponding tag mm315). So if the to-be-released version will be tagged as >= mm315, the patch will be there. As of me...

Like.

The resolving commit for mentioned vioscsi (and viostor) bug was merged 21 Jan 2026 into virtio master (commit cade4cb, corresponding tag mm315). So if the to-be-released version will be tagged as >= mm315, the patch will be there. As of me... -

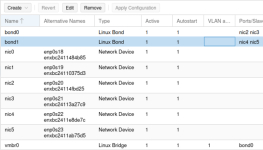

jsterr replied to the thread Proxmox 9.1 and vlans stopped working..Nothing has changed regarding this afaik. You need switchport with multiple vlans on it (vlan trunk), then create a vmbr0 with a bridged port (that connects to your switchport that(s) with the vlans on it). Then USE the sdn, as its the most...

jsterr replied to the thread Proxmox 9.1 and vlans stopped working..Nothing has changed regarding this afaik. You need switchport with multiple vlans on it (vlan trunk), then create a vmbr0 with a bridged port (that connects to your switchport that(s) with the vlans on it). Then USE the sdn, as its the most... -

jsterr reacted to t.lamprecht's post in the thread Call for Evidence: Share your Proxmox experience on European Open Digital Ecosystems initiative (deadline: 3 February 2026) with

jsterr reacted to t.lamprecht's post in the thread Call for Evidence: Share your Proxmox experience on European Open Digital Ecosystems initiative (deadline: 3 February 2026) with Like.

Dear Proxmox-Community, we are asking for your support. The European Commission has opened a Call for Evidence on the initiative European Open Digital Ecosystems, an initiative that will support EU ambitions to secure technological sovereignty...

Like.

Dear Proxmox-Community, we are asking for your support. The European Commission has opened a Call for Evidence on the initiative European Open Digital Ecosystems, an initiative that will support EU ambitions to secure technological sovereignty... -

jsterr replied to the thread Ceph 20.2 Tentacle Release Available as test preview and Ceph 18.2 Reef soon to be fully EOL.Crimson is not included in pveceph yet.

jsterr replied to the thread Ceph 20.2 Tentacle Release Available as test preview and Ceph 18.2 Reef soon to be fully EOL.Crimson is not included in pveceph yet. -

jsterr replied to the thread Cannot log in via GUI, ssh does not respond.I personally would look for journalctl -u pveproxy.service limiting with --since to the time-frame where you had that error. If it was 5 minutes ago for example it is: journalctl -u pveproxy.service --since "5 minutes ago"

jsterr replied to the thread Cannot log in via GUI, ssh does not respond.I personally would look for journalctl -u pveproxy.service limiting with --since to the time-frame where you had that error. If it was 5 minutes ago for example it is: journalctl -u pveproxy.service --since "5 minutes ago"