fstrankowski's latest activity

-

fstrankowski replied to the thread Trixie Updates Question.What exactly does fail where? You have the pve-headers which let you compile modules all by yourself and blacklist existing ones. The reason that (in most cases) this step is taken very rarely is that you have to recompile everything once...

fstrankowski replied to the thread Trixie Updates Question.What exactly does fail where? You have the pve-headers which let you compile modules all by yourself and blacklist existing ones. The reason that (in most cases) this step is taken very rarely is that you have to recompile everything once... -

fstrankowski replied to the thread authentication failure.Besides what @bbgeek17 said, i'd suggest you either setup Proxmox Managment Side to only be accessible via VPN - or - if you know Linux, you can just close port 8006 and tunnel it through SSH and thus only need SSH open via firewall. You can...

fstrankowski replied to the thread authentication failure.Besides what @bbgeek17 said, i'd suggest you either setup Proxmox Managment Side to only be accessible via VPN - or - if you know Linux, you can just close port 8006 and tunnel it through SSH and thus only need SSH open via firewall. You can... -

fstrankowski replied to the thread Problem moving PVE from one motherboard to another.Could you please make a video of the boot process? Did the previous board had some sort of hardware-raid enabled without you knowing by any chance?

fstrankowski replied to the thread Problem moving PVE from one motherboard to another.Could you please make a video of the boot process? Did the previous board had some sort of hardware-raid enabled without you knowing by any chance? -

fstrankowski replied to the thread Adding Proxmox VE 9 nodes to an existing PVE 8.3.2 cluster.I'd highly discourage anyone doing that on a production cluster. @SteveITS was correct, its wise to update, upgrade and then dist-upgrade to bring the whole platform to the same major and minor.

fstrankowski replied to the thread Adding Proxmox VE 9 nodes to an existing PVE 8.3.2 cluster.I'd highly discourage anyone doing that on a production cluster. @SteveITS was correct, its wise to update, upgrade and then dist-upgrade to bring the whole platform to the same major and minor. -

fstrankowski reacted to EllerholdAG's post in the thread ZFS Pool full even though VM limited to 50% of the pools size with

fstrankowski reacted to EllerholdAG's post in the thread ZFS Pool full even though VM limited to 50% of the pools size with Like.

This is - at least - surprising to me. You have a 500 GB disk on a zpool with 900 GB capacity. Youre using no snapshots, which could take up additional space. So whats eating the 399 GB here? Write amplification refers to each little change in...

Like.

This is - at least - surprising to me. You have a 500 GB disk on a zpool with 900 GB capacity. Youre using no snapshots, which could take up additional space. So whats eating the 399 GB here? Write amplification refers to each little change in... -

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.Thanks to everyone contributing so far! Summary to this point: - Original volume for VM 11000 uses 300GB+ beyond its allocated volsize on a non-raidz, single disc pool (nearly factor 2x) - Creating new test-volumes and filling them with...

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.Thanks to everyone contributing so far! Summary to this point: - Original volume for VM 11000 uses 300GB+ beyond its allocated volsize on a non-raidz, single disc pool (nearly factor 2x) - Creating new test-volumes and filling them with... -

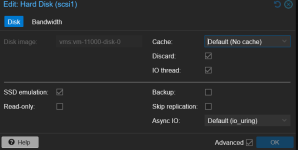

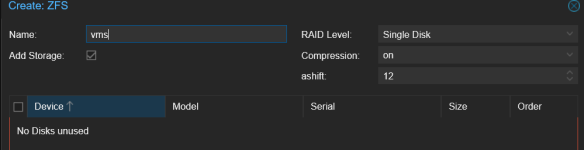

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.I'm 100% there with you. I create the disk in the most boring way possible: Standard settings, single disc, didnt change a single setting. I could (theoretically) delete the volume and recreate it later down the road when i finished...

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.I'm 100% there with you. I create the disk in the most boring way possible: Standard settings, single disc, didnt change a single setting. I could (theoretically) delete the volume and recreate it later down the road when i finished... -

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.Just to complete my mental distress, i've tried to verify the behaviour: [root@ ~]# zfs get -p used,logicalused,compressratio,copies,volblocksize vms/vm-11000-disk-0 NAME PROPERTY VALUE SOURCE vms/vm-11000-disk-0 used...

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.Just to complete my mental distress, i've tried to verify the behaviour: [root@ ~]# zfs get -p used,logicalused,compressratio,copies,volblocksize vms/vm-11000-disk-0 NAME PROPERTY VALUE SOURCE vms/vm-11000-disk-0 used... -

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.Highly appreciated your time aaron (as always!). You may be served: [root@ ~]# zfs get all vms NAME PROPERTY VALUE SOURCE vms type filesystem - vms creation Tue May 20...

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.Highly appreciated your time aaron (as always!). You may be served: [root@ ~]# zfs get all vms NAME PROPERTY VALUE SOURCE vms type filesystem - vms creation Tue May 20... -

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.VM gets trimmed once every day. All filesystems are trimmed. Your wish may be fullfilled: [root@ ~]# zpool status -v pool: rpool state: ONLINE scan: scrub repaired 0B in 00:00:29 with 0 errors on Sun Dec 14 00:24:30 2025 config...

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.VM gets trimmed once every day. All filesystems are trimmed. Your wish may be fullfilled: [root@ ~]# zpool status -v pool: rpool state: ONLINE scan: scrub repaired 0B in 00:00:29 with 0 errors on Sun Dec 14 00:24:30 2025 config... -

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.I was thinking the exact same and i'm still not certain if i figured out the correct reason just yet. Used to be 545G throughout the whole time. Then i filled the partition up and it just kept growing nonstop.

fstrankowski replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.I was thinking the exact same and i'm still not certain if i figured out the correct reason just yet. Used to be 545G throughout the whole time. Then i filled the partition up and it just kept growing nonstop. -

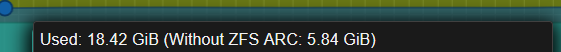

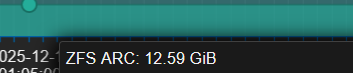

fstrankowski replied to the thread Web UI reports critical memory usage, just caching.Looks totally fine to me. You're using roughly 60GB without L2ARC, rest is L2ARC usage (~ 170GB) + some remaining free GB (~ 20). I see absolutely nothing wrong here. The graphs have different color. If you hover over these graphs it will tell...

fstrankowski replied to the thread Web UI reports critical memory usage, just caching.Looks totally fine to me. You're using roughly 60GB without L2ARC, rest is L2ARC usage (~ 170GB) + some remaining free GB (~ 20). I see absolutely nothing wrong here. The graphs have different color. If you hover over these graphs it will tell... -

fstrankowski posted the thread ZFS Pool full even though VM limited to 50% of the pools size in Proxmox VE: Installation and configuration.Heya! I've just had one of my VMs stalled because of a Proxmox "io-error". Well, seems the pool usage is 100%. [root@~]# zfs get -p volsize,used,logicalused,compressratio vms/vm-11000-disk-0 NAME PROPERTY VALUE SOURCE...

fstrankowski posted the thread ZFS Pool full even though VM limited to 50% of the pools size in Proxmox VE: Installation and configuration.Heya! I've just had one of my VMs stalled because of a Proxmox "io-error". Well, seems the pool usage is 100%. [root@~]# zfs get -p volsize,used,logicalused,compressratio vms/vm-11000-disk-0 NAME PROPERTY VALUE SOURCE... -

fstrankowski replied to the thread Proxmox / Ceph / Backups & Replica Policy.If you have a pull request for Proxmox please be so kind to link it here so i can review/improve it before there is a chance Proxmox will merge it. I'd add the option into the CEPH pool configuration UI because its linked on a per-pool-basis and...

fstrankowski replied to the thread Proxmox / Ceph / Backups & Replica Policy.If you have a pull request for Proxmox please be so kind to link it here so i can review/improve it before there is a chance Proxmox will merge it. I'd add the option into the CEPH pool configuration UI because its linked on a per-pool-basis and... -

fstrankowski replied to the thread Proxmox / Ceph / Backups & Replica Policy.rbd config pool set POOLNAME rbd_read_from_replica_policy localize Regarding the post mortem: I had to delay my work on that because i have to deal with lots of other stuff with higher inhouse priority at the moment. Hopefully i'll be able to...

fstrankowski replied to the thread Proxmox / Ceph / Backups & Replica Policy.rbd config pool set POOLNAME rbd_read_from_replica_policy localize Regarding the post mortem: I had to delay my work on that because i have to deal with lots of other stuff with higher inhouse priority at the moment. Hopefully i'll be able to... -

fstrankowski reacted to Ernie95's post in the thread [SOLVED] Issue with AppArmor : bad configuration with

fstrankowski reacted to Ernie95's post in the thread [SOLVED] Issue with AppArmor : bad configuration with Like.

Solved : no new error 'denied' in log.

Like.

Solved : no new error 'denied' in log.