fdcastel's latest activity

-

F64K volblocksize is the sweet spot for Windows VMs execpt database or file servers. Pure AD controllers should also use zvols with 16K blocksize. MS SQL server for example: 1x vssd with 64k for the OS installation only. 2nd vssd for SQL data...

-

Fyes, but probably it mainly depends on controller. controller can inform you about optimal format. yours is ok with both (512e = 4kn = best). example of difference from my system: # nvme id-ns /dev/nvme3n1 -H | grep '^LBA Format' LBA Format 0 ...

-

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..Update: I rebuilt the VMs using Virtio SCSI, and performance again reached roughly double that of Virtio Block (matching the results seen when the drives were formatted with 512-byte blocks). This indicates that switching the NVMe block size...

-

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..Thank you, @ucholak, for sharing your experience and expertise -- I really appreciated it. You gave me a glimpse of hope, but unfortunately it faded rather quickly :) I reformatted my NVMe drives to 4K using: nvme format /dev/nvme2n1...

-

FI think this is maybe bug or analyze of older state (blk missing queues?). In beginning of chapter, you have: From my study and usage: scsi translates scsi commands to virtio (virtqueues) layer (overhead, ~10-15%), and has NOW only...

-

Fonly checking: is it also formatted like this. or better for 4kN? for example: nvme id-ns /dev/nvmeXn0 -H | grep LBA nvme format /dev/nvmeXn0 -l 3

-

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..But for now, no matter what I try, Hyper-V often delivers about 20%~25% better random I/O performance than Proxmox, and this has a direct impact on my application’s database response times. And, believe me, I tried EVERYTHING...

-

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..Thanks, @ucholak! I wasn't aware of this command. It appears it's not: # nvme list Node Generic SN Model Namespace Usage Format...

-

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..I didn't even try. According to Proxmox VE documentation: The VirtIO Block controller, often just called VirtIO or virtio-blk, is an older type of paravirtualized controller. It has been superseded by the VirtIO SCSI Controller, in terms of...

-

Fcache=none leaves the cache of the storage system enabled, use directsync instead. See here for comparision of caching modes https://pve.proxmox.com/wiki/Performance_Tweaks#Disk_Cache

-

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..No. Both servers are configured identically: 2x1.92 TB drives (mirrored, "RAID 1") for the operating system, and another 2x1.92 TB drives (mirrored, "RAID 1") for the VMs.

-

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..- why identical tests on identical hardware are producing significantly different results? - why the Hyper-V benchmarks seem to align more closely with the manufacturer’s published performance? (It might simply be coincidence) - why Hyper-V...

-

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..@spirit I’m available to run any additional tests you’d like. These systems are up solely for testing, and I can rebuild them as needed.

-

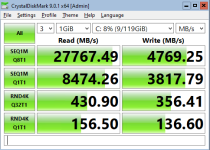

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..I believe @spirit has nailed the issue of RND4K Q32T1 performance: Windows guest on Proxmox: During the RND4K Q32T1 test, cpu went to 12% (100% of 1 core on a 8-vcpu) Windows guest on Hyper-V Server: During the RND4K Q32T1 test, CPU...

-

Ffdcastel replied to the thread Feature request: VM configuration toggle for 4k block device sector size.Thank you, @spirit, for the valuable insights. I'm doing some tests now. But I believe you’ve nailed the issue. Please let's continue on https://forum.proxmox.com/threads/proxmox-x-hyper-v-storage-performance.177355/ I'll post the results there.

-

Ffdcastel replied to the thread Feature request: VM configuration toggle for 4k block device sector size.I’m embarrassed to admit that I just realized I’ve hijacked this thread. I’ve opened a new one here: https://forum.proxmox.com/threads/proxmox-x-hyper-v-storage-performance.177355/

-

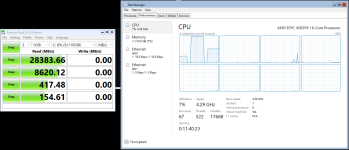

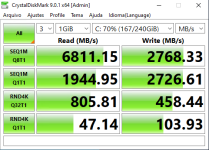

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..At first glance, Proxmox appears to offer substantial improvements over the old setup, with a few important observations: 1) According to Samsung’s official specifications this model is rated for 6800 MB/s sequential read and 2700 MB/s...

-

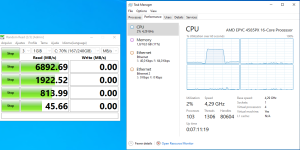

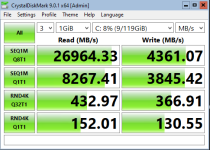

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..For reference, the existing Hyper-V Server deployment (on identical hardware) yields the following results: C:\> fsutil fsinfo sectorInfo C: LogicalBytesPerSector : 512 PhysicalBytesPerSectorForAtomicity ...

-

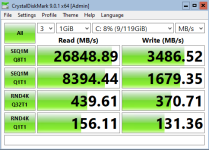

Ffdcastel posted the thread Proxmox x Hyper-V storage performance. in Proxmox VE: Installation and configuration.I’m evaluating Proxmox for potential use in a professional environment to host Windows VMs. Current production setup runs on Microsoft Hyper-V Server. Results follow: 1) Using --scsi0 "$VM_STORAGE:$VM_DISKSIZE,discard=on,iothread=1,ssd=1"...

-

Ffdcastel replied to the thread Feature request: VM configuration toggle for 4k block device sector size.Exactly. Results of Post #3 come from running CrystalDiskMark on guest VMs hosted on Proxmox 9.1.1. This was done on a test system with no other load. Results in Post #5 come from running CrystalDiskMark on guest VMs hosted on Hyper-V Server...