ertanerbek's latest activity

-

Eertanerbek replied to the thread Proxmox 9.1.1 FC Storage via LVM.Disable the fstrim service on Proxmox and do not select the discard option in the virtual machines.

-

Eertanerbek replied to the thread Win 10 VDI w/GPU Passthru - Desktop Lag/Jitter Troubleshooting.Thank you for your feedback, after two years problem continue but seems ok with intel-hda driver..

-

Eertanerbek reacted to garlicknots's post in the thread Win 10 VDI w/GPU Passthru - Desktop Lag/Jitter Troubleshooting with

Like.

Somehow I managed to get rid of ich9 PCIe host ports as well on one of my machines. Can't successfully recreate and don't know if it's worth doing but thought it was kind of interesting I've almost completely eliminated the stutter, but it's not...

Like.

Somehow I managed to get rid of ich9 PCIe host ports as well on one of my machines. Can't successfully recreate and don't know if it's worth doing but thought it was kind of interesting I've almost completely eliminated the stutter, but it's not... -

Eertanerbek reacted to bbgeek17's post in the thread [TUTORIAL] Understanding QCOW2 Risks with QEMU cache=none in Proxmox with

Like.

Hi @Kurgan, Based on a quick review, LVM-thin appears to offer better durability characteristics than QCOW2 for a few reasons: LVM-thin stores its metadata in a B-tree and applies updates transactionaly (though not via a traditional journal)...

Like.

Hi @Kurgan, Based on a quick review, LVM-thin appears to offer better durability characteristics than QCOW2 for a few reasons: LVM-thin stores its metadata in a B-tree and applies updates transactionaly (though not via a traditional journal)... -

EThank you for providing additional information. We will review and digest. We do not use either LVM or QCOW in our integration with PVE, so we have limited exposure with these technologies in some of our legacy customer environments. Our most...

-

Eertanerbek replied to the thread Proxmox 9.1.1 FC Storage via LVM.The exact cause of this problem is the discard operation. Scenario 1: The guest resides on any source disk structure. When I try to clone this guest into an LVM setup in qcow format, whether the SSD and DISCARD feature on the disk are enabled or...

-

EHi @ertanerbek, we’re mostly on the same page: Fibre Channel is far from dead in large enterprise environments. That said, investing in legacy entry SANs (for example an HPE MSA or older Dell ME models), or even trying to repurpose them, purely...

-

Eertanerbek replied to the thread Proxmox 9.1.1 FC Storage via LVM.Yes, you are right. For this reason, instead of LVM, perhaps OCFS2 or GFS2 — since it is integrated with Corosync — could be better options that may be supported in the future compared to LVM.

-

EThe lock is managed by proxmox code directly. (through pmxcfs/corosync). You can't delete 2 lvm volumes at the same time.

-

ETo be honest, I’m not sure whether your issue is specifically caused by the fact that you're using FC. But what I can say is that, on my side, with iSCSI, I don’t have any locking problems at all.

-

Eertanerbek replied to the thread Proxmox 9.1.1 FC Storage via LVM.Hello @tiboo86 Thank you very much for your feedback and for this extensive sharing. I hope both myself and many others will benefit from it. However, this is an IPSAN system, and the issue I am experiencing is on the FC SAN side. Let’s...

-

EHi @ertanerbek, No problem — here is our full setup in detail. We are running a three-node Proxmox cluster, and each node has two dedicated network interfaces for iSCSI. These two NICs are configured as separate iSCSI interfaces using...

-

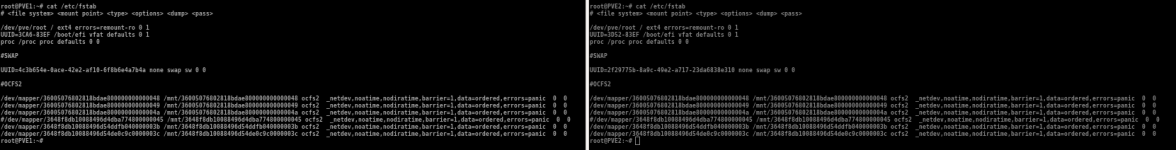

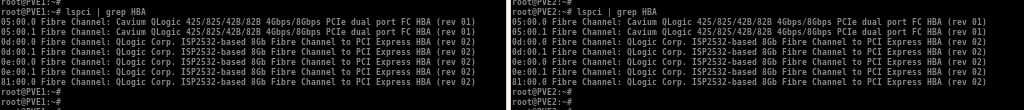

Eertanerbek replied to the thread Proxmox 9.1.1 FC Storage via LVM.I am sharing my multipath configuration and multipath output also storage file, I’m also using OCFS2, and it’s almost perfect. In fact, OCFS2 itself is excellent, but Proxmox forces me to use its own lock mechanism. At the operating system...

-

Eertanerbek replied to the thread Proxmox 9.1.1 FC Storage via LVM.Hi Tibo, If possible, could you share everything? If you have a successful implementation, it could also help others who face issues in the future. By the way, why did you have to tweak the queue-depth and kernel parameters? Do we really need...

-

EHi @ertanerbek, I’m running a three-node Proxmox VE 9.1.1 cluster connected to a Huawei Dorado 5000, but using iSCSI + Linux Multipath + LVM (shared). In my setup, I haven’t encountered any problems during simultaneous “Move Storage”...

-

Eertanerbek replied to the thread Proxmox 9.1.1 FC Storage via LVM.RDM, TCP Offload, RoCE, NVMe-OF, NVMe-oF + RDMA, and Serv-IO (for Ethernet) are all excellent technologies with many benefits. They reduce CPU load and lower access times. However, no matter what they achieve, the real issue is not the connection...

-

EWell, different approaches are beeing worked on, nvme-oF that tries to reduce latency

-

EEach disk is block storage, what I mean is the direct usage of block storage from an application side. We will see in 10years.

-

Eyes - true - I assume ~80% of VMWare customers are riding this dead horse also with VMFS/VMDK :) I mean still a valid approach would be if Proxmox as company would hire/pay some core/veteran developers of OCFS2 or 3rd party to integrate it...

-

Eertanerbek replied to the thread Proxmox 9.1.1 FC Storage via LVM.Most of my 25-year professional career has been spent working with storage devices. A large portion of that involved projects at the government level. I can confidently say that the SAN storage architecture cannot simply disappear. Even today...