damo2929's latest activity

-

Ddamo2929 replied to the thread How to enable 1G hugepages?.you need to either use 2MB pages or 1GB pages not mox like your trying to do. huge pages will only be used on VMs you have set to use huge pages with else they will use standard 4k pages. huge page allocation also needs to take into account numa...

-

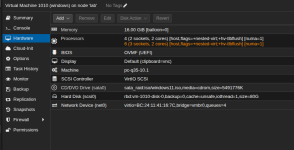

Ddamo2929 posted the thread Issues with hot plug processors in Proxmox VE: Installation and configuration.hi guys search didn't bring me a result for this but am having issues with ram and CPU hot plug. in version 9.1.4. the proxmox UI doesn't apply them, despite hot plug being enabled, it doesn't appear to work on any VM regardless of guest OS. can...