bbgeek17's latest activity

-

bbgeek17 replied to the thread Need to backup broken Proxmox without ethernet.The backup tries to start the VM in suspended state so that the disks can be properly activated. Given your installation is already borked, you can remove the network card from your VM config to bypass this error, if you wanted to get the last...

bbgeek17 replied to the thread Need to backup broken Proxmox without ethernet.The backup tries to start the VM in suspended state so that the disks can be properly activated. Given your installation is already borked, you can remove the network card from your VM config to bypass this error, if you wanted to get the last... -

bbgeek17 replied to the thread Custom Cloud Init Snippet - Not working.You can program the cloudinit file to write and execute a script that would detect the interface name and create correct network file in place, instead of relying on CI for that part of the config. You can create/modify your template to not...

bbgeek17 replied to the thread Custom Cloud Init Snippet - Not working.You can program the cloudinit file to write and execute a script that would detect the interface name and create correct network file in place, instead of relying on CI for that part of the config. You can create/modify your template to not... -

bbgeek17 replied to the thread Need to backup broken Proxmox without ethernet.If you are already planning to use a different installation disk, what stops you from connecting the disk that contains the VM data now as secondary, and then copying/importing it? How to do that depends on your storage configuration. It may be...

bbgeek17 replied to the thread Need to backup broken Proxmox without ethernet.If you are already planning to use a different installation disk, what stops you from connecting the disk that contains the VM data now as secondary, and then copying/importing it? How to do that depends on your storage configuration. It may be... -

bbgeek17 replied to the thread Custom Cloud Init Snippet - Not working.Here is the documentation: https://cloudinit.readthedocs.io/en/latest/reference/network-config-format-v1.html#mac-address-mac-address This may be the answer you are looking for: Specifying a MAC Address is optional. At least one of these...

bbgeek17 replied to the thread Custom Cloud Init Snippet - Not working.Here is the documentation: https://cloudinit.readthedocs.io/en/latest/reference/network-config-format-v1.html#mac-address-mac-address This may be the answer you are looking for: Specifying a MAC Address is optional. At least one of these... -

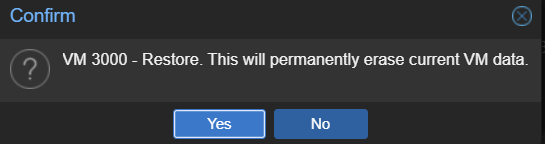

bbgeek17 replied to the thread Restoring from backup removes existing snapshots.I understand that you've been burned and can see where you are coming from. That said, user education through pop-up warnings tends to be ignored most of the time. There are many variables at play: automated scheduled backups, usage of CLI or...

bbgeek17 replied to the thread Restoring from backup removes existing snapshots.I understand that you've been burned and can see where you are coming from. That said, user education through pop-up warnings tends to be ignored most of the time. There are many variables at play: automated scheduled backups, usage of CLI or... -

bbgeek17 replied to the thread Restoring from backup removes existing snapshots.Hi @shapez0r , I am getting: Have you not received this alert? Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

bbgeek17 replied to the thread Restoring from backup removes existing snapshots.Hi @shapez0r , I am getting: Have you not received this alert? Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox -

bbgeek17 replied to the thread iSCSI under performance.I could be wrong, its been a while since I had to deal with these. You are correct that there is a link for cache sync and other HA traffic. I just don't recall it being used for user data path. Blockbridge : Ultra low latency all-NVME shared...

bbgeek17 replied to the thread iSCSI under performance.I could be wrong, its been a while since I had to deal with these. You are correct that there is a link for cache sync and other HA traffic. I just don't recall it being used for user data path. Blockbridge : Ultra low latency all-NVME shared... -

bbgeek17 replied to the thread iSCSI under performance.You are correct that it’s a bad idea. However, there is no internal data path between the controllers. The disks are dual-ported and connected to both storage processors simultaneously, but only one processor can own and handle I/O at a time. If...

bbgeek17 replied to the thread iSCSI under performance.You are correct that it’s a bad idea. However, there is no internal data path between the controllers. The disks are dual-ported and connected to both storage processors simultaneously, but only one processor can own and handle I/O at a time. If... -

bbgeek17 replied to the thread PVE installation.Hi @zync, welcome to the forum. A good test to try is install vanilla Debian - do you have a similar challenge? Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

bbgeek17 replied to the thread PVE installation.Hi @zync, welcome to the forum. A good test to try is install vanilla Debian - do you have a similar challenge? Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox -

bbgeek17 replied to the thread Adding storage to Datacenter.Hi @heytmi , welcome to the forum. Your question is more related to PVE part of the forum than PDM I recommend opening a thread there. Do include as much relevant technical information as possible. For example: lsblk fdisk -l pvs vgs lvs pvesm...

bbgeek17 replied to the thread Adding storage to Datacenter.Hi @heytmi , welcome to the forum. Your question is more related to PVE part of the forum than PDM I recommend opening a thread there. Do include as much relevant technical information as possible. For example: lsblk fdisk -l pvs vgs lvs pvesm... -

bbgeek17 replied to the thread Proxmox with 48 nodes.Hi @Nathan Stratton and all, You need clear guidance here: do not do that unless you have a very compelling reason to. a) Your hardware is discontinued and past the end of service, which significantly increases the likelihood of component...

bbgeek17 replied to the thread Proxmox with 48 nodes.Hi @Nathan Stratton and all, You need clear guidance here: do not do that unless you have a very compelling reason to. a) Your hardware is discontinued and past the end of service, which significantly increases the likelihood of component... -

bbgeek17 replied to the thread Bulk migration of servers from VMWare to Proxmox.The PVE endorsed methods are described here: https://www.proxmox.com/en/services/training-courses/videos/proxmox-virtual-environment/proxmox-ve-import-wizard-for-vmware https://pve.proxmox.com/wiki/Migrate_to_Proxmox_VE...

bbgeek17 replied to the thread Bulk migration of servers from VMWare to Proxmox.The PVE endorsed methods are described here: https://www.proxmox.com/en/services/training-courses/videos/proxmox-virtual-environment/proxmox-ve-import-wizard-for-vmware https://pve.proxmox.com/wiki/Migrate_to_Proxmox_VE... -

bbgeek17 replied to the thread Bulk migration of servers from VMWare to Proxmox.Hi @imsuyog, welcome to the forum. With any migration path, you will need to invest some time and effort into testing how appropriate it is for your data. The migration will most likely involve data transfer, probably over an API, and may...

bbgeek17 replied to the thread Bulk migration of servers from VMWare to Proxmox.Hi @imsuyog, welcome to the forum. With any migration path, you will need to invest some time and effort into testing how appropriate it is for your data. The migration will most likely involve data transfer, probably over an API, and may... -

bbgeek17 replied to the thread cloud-init brain dump.Did you check the CI log after boot? Have you monitored the console output during the boot? (qm start xxx && qm monitor xxx). You presented you sanitized CI config but did not present the actual VM config. Keep in mind that according to ...

bbgeek17 replied to the thread cloud-init brain dump.Did you check the CI log after boot? Have you monitored the console output during the boot? (qm start xxx && qm monitor xxx). You presented you sanitized CI config but did not present the actual VM config. Keep in mind that according to ... -

bbgeek17 replied to the thread [SOLVED] open-iscsi-service failed to start after update to Proxmox 9.0.10 (6.14.11-3-pve).Did you only have VMs active on node1 when you restarted the nodes last time? Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

bbgeek17 replied to the thread [SOLVED] open-iscsi-service failed to start after update to Proxmox 9.0.10 (6.14.11-3-pve).Did you only have VMs active on node1 when you restarted the nodes last time? Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox -

bbgeek17 reacted to leesteken's post in the thread Could we have a 'lab' subscription license? with

bbgeek17 reacted to leesteken's post in the thread Could we have a 'lab' subscription license? with Like.

Turns out that Proxmox explicitly does not want donations: https://forum.proxmox.com/threads/donation-to-proxmox-how-to-apologies-if-this-create-noise.173232/post-806352 . There have been threads before about additional (cheaper) subscription...

Like.

Turns out that Proxmox explicitly does not want donations: https://forum.proxmox.com/threads/donation-to-proxmox-how-to-apologies-if-this-create-noise.173232/post-806352 . There have been threads before about additional (cheaper) subscription... -

bbgeek17 replied to the thread Curl not working on new install.May be your ISP is running openwrt on PI... Dust out tcpdump and start capturing and comparing traces :) Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

bbgeek17 replied to the thread Curl not working on new install.May be your ISP is running openwrt on PI... Dust out tcpdump and start capturing and comparing traces :) Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox -

bbgeek17 replied to the thread Curl not working on new install.This is a good read: https://forum.proxmox.com/threads/temporary-failure-in-name-resolution-help-needed.164020/ I think the summary is: DNS resolution failure that affected only PVE and only when used with internal router. Caused by new version...

bbgeek17 replied to the thread Curl not working on new install.This is a good read: https://forum.proxmox.com/threads/temporary-failure-in-name-resolution-help-needed.164020/ I think the summary is: DNS resolution failure that affected only PVE and only when used with internal router. Caused by new version... -

bbgeek17 replied to the thread Curl not working on new install.Are there VLANs involved? Have you tested MTU max size between the internal hosts on the switch? Does your switch implement some sort of "smart" filtering? The best approach is to keep reducing the number of variables and complexity of the test...

bbgeek17 replied to the thread Curl not working on new install.Are there VLANs involved? Have you tested MTU max size between the internal hosts on the switch? Does your switch implement some sort of "smart" filtering? The best approach is to keep reducing the number of variables and complexity of the test...