I’d like to chronicle my PVE 8to9 upgrade experience, which due to the holidays only began yesterday. Maybe others will gain insight from this, or be able to shed further insight to me on how to correct things / do better etc.

Firstly, let me start by thanking all members of this forum, for their continuous invaluable input, but mostly I’d like to thank the absolutely awesome Proxmox team, for their great product & their timely care & attention to detail.

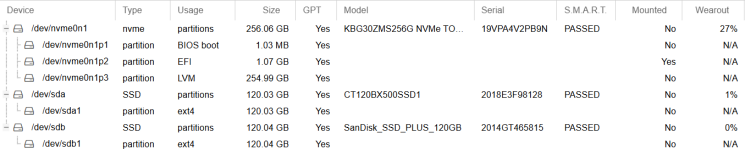

Let me start off by saying, that I started the update on a home-node single server (non-cluster) so as to get my hands wet! It runs on a non-zfs plain LVM setup (NVMe), plus another single SSD as ext4 setup as a Directory storage in PVE – fully updated on no-subscription.

I then proceeded to power down the server &

Opened the wiki (https://pve.proxmox.com/wiki/Upgrade_from_8_to_9) in a browser.

Running the

– I stopped this VM.

– These settings are for IPV4/6 forwarding (used in an LXC) so I decided to store this file as a backup for later use, so I issued:

– I chose to ignore this, I’ve never bothered with microcode if it wasn’t necessary (at home).

I then proceeded to change to the correct apt sources for Trixie etc. messing for the first time with the new

Then in my SSH session I opened a

I received a choice option of keeping the current version or updating to a newer version on the following:

I Chose Yes to all the above (get the newer version) as I have copies of all those changes there & can easily recreate – as I eventually did.

I then again ran

I researched this a little & then did (hopefully the right choice, but probably not?):

Then came the moment of truth! Yes the dreaded reboot was issued & yup it failed – booted into BIOS! At that moment I kicked myself—dreading a complete reimaging of the host drive (

I then decided that if I will anyway have to eventually reimage the host drive, I may as well get the PVE9 Iso & try installing to get a feel of the land. After booting the server with the PVE9 Iso – another thought struck me of trying the

I then ran:

Removed the ISO - & yes REBOOTED SUCCESS! So I finally have a running PVE9 node at home.

I then proceeded to change the settings in the above mentioned files as required (chrony, nut etc.).

All went successfully except for CUPS which absolutely flooded the journal/system log with:

With a pointer from the above wiki, & a lot of messing around, I eventually edited

This seems to have quieted down the logs, although it seems they remain noisy if a print job is sent but the printer is turned off. I’ll have to look into that sometime.

I tested all my VMs & LXCs running & all seems well. I then performed backups on all as a test & also (as a test) restored a Debian server Cloud-init template (VM) & did a full clone which worked flawlessly (I was pleasantly slightly surprised!).

The only exception is a Tailscale LXC (on Alpine) that I have, that did boot fine but would not work as a Subnet router / accept-routes (used to access other clients on the network, see https://tailscale.com/kb/1019/subnets ). This is probably a Debian Trixie issue with the

I subsequently spun up a fresh Debian 12 server VM (from above cloud-init template VM) & installed Tailscale on it & it worked first time. I usually prefer LXCs for these lighter tasks (my above original Alpine LXC for Tailscale, which has worked for years, backs up to about 20MB!) but I’ll have to make do for the present.

In general, I find PVE9 more snappier in the GUI vs PVE8, including a faster boot, and can confirm that the package/core temps of the host are lower, also mirrored by an average of 18w vs 20w power used (as monitored from Home Assistant).

So again kudos to the awesome team – for this magnificent (not uneventful) ride.

On a sidenote: when running:

I’m not sure why the upgrade-installer should not automatically do this, & also remove all older kernels before 6.14.x which as far as I know cannot be run on PVE9 anyway. Maybe there is a way of reverting back to PVE8 (with a pve9to8 script) !

!

Firstly, let me start by thanking all members of this forum, for their continuous invaluable input, but mostly I’d like to thank the absolutely awesome Proxmox team, for their great product & their timely care & attention to detail.

Let me start off by saying, that I started the update on a home-node single server (non-cluster) so as to get my hands wet! It runs on a non-zfs plain LVM setup (NVMe), plus another single SSD as ext4 setup as a Directory storage in PVE – fully updated on no-subscription.

I then proceeded to power down the server &

dd image the main host drive (I always do this on any major updates) – for as you know there is no in-house full PVE host backup, which should be mandatory for such an upgrade.Opened the wiki (https://pve.proxmox.com/wiki/Upgrade_from_8_to_9) in a browser.

Running the

pve8to9 --full produced 3 warnings & no failures:WARN: 1 running guest(s) detected - consider migrating or stopping them.– I stopped this VM.

WARN: Deprecated config '/etc/sysctl.conf' contains settings - move them to a dedicated file in '/etc/sysctl.d/'. – These settings are for IPV4/6 forwarding (used in an LXC) so I decided to store this file as a backup for later use, so I issued:

mv /etc/sysctl.conf /etc/sysctl.conf.bak (this may have been a bad choice – see below on the LXC issue).WARN: The matching CPU microcode package 'intel-microcode' could not be found! Consider installing it to receive the latest security and bug fixes for your CPU. Ensure you enable the 'non-free-firmware' component in the apt sources and run: apt install intel-microcode– I chose to ignore this, I’ve never bothered with microcode if it wasn’t necessary (at home).

I then proceeded to change to the correct apt sources for Trixie etc. messing for the first time with the new

deb822 format. (I actually like it – instead of those 1 liners!). As sources on a system (a number of years old) get rather convoluted & generally messy – I did some general overdue housekeeping here. Checked apt update & policy – all good - eventually.Then in my SSH session I opened a

tmux terminal (in case I loose connection during the upgrade – this I do by nature when using any potential lengthy command that has potential of going south midway!), & began the apt dist-upgrade which chugged along nicely.I received a choice option of keeping the current version or updating to a newer version on the following:

/etc/chrony/chrony.conf/etc/nut/nut.conf/etc/nut/upsmon.conf/etc/nut/ups.conf/etc/smartd.conf/etc/nut/upsd.conf/etc/nut/upsd.users/etc/lvm/lvm.conf (never made changes – but I’m guessing Proxmox may have, see the above wiki).I Chose Yes to all the above (get the newer version) as I have copies of all those changes there & can easily recreate – as I eventually did.

I then again ran

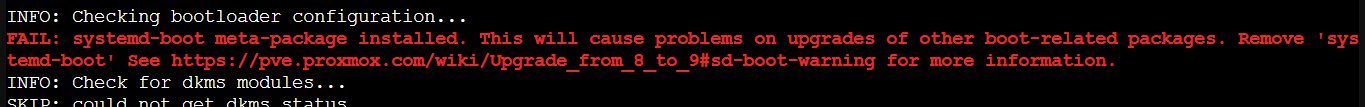

pve8to9 –full & received this:FAIL: systemd-boot meta-package installed this will cause issues on upgrades of boot-related packages. Install 'systemd-boot-efi' and 'systemd-boot-tools' explicitly and remove 'systemd-boot'I researched this a little & then did (hopefully the right choice, but probably not?):

apt remove systemd-boot & checking that the other 2 packages are installed (they were).Then came the moment of truth! Yes the dreaded reboot was issued & yup it failed – booted into BIOS! At that moment I kicked myself—dreading a complete reimaging of the host drive (

dd above).I then decided that if I will anyway have to eventually reimage the host drive, I may as well get the PVE9 Iso & try installing to get a feel of the land. After booting the server with the PVE9 Iso – another thought struck me of trying the

rescue-mode in Advanced options – to see if I could coax some life into my server. Surprise, surprise it fully booted including the Home Assistant VM set to run at boot!I then ran:

Code:

umount /boot/efi

proxmox-boot-tool reinitRemoved the ISO - & yes REBOOTED SUCCESS! So I finally have a running PVE9 node at home.

I then proceeded to change the settings in the above mentioned files as required (chrony, nut etc.).

All went successfully except for CUPS which absolutely flooded the journal/system log with:

Code:

kernel: audit: type=1400 audit(1755543458.357:3138): apparmor="DENIED" operation="create" class="net" info="failed protocol match" error=-13 profile="/usr/sbin/cupsd" pid=17322 comm="cupsd" family="unix" sock_type="stream" protocol=0 requested="create" denied="create" addr=noneWith a pointer from the above wiki, & a lot of messing around, I eventually edited

/etc/apparmor.d/usr.sbin.cupsd & /etc/apparmor.d/usr.sbin.cups-browsed with abi <abi/3.0>, at the beginning & then issued apparmor_parser -r for both these profiles.This seems to have quieted down the logs, although it seems they remain noisy if a print job is sent but the printer is turned off. I’ll have to look into that sometime.

I tested all my VMs & LXCs running & all seems well. I then performed backups on all as a test & also (as a test) restored a Debian server Cloud-init template (VM) & did a full clone which worked flawlessly (I was pleasantly slightly surprised!).

The only exception is a Tailscale LXC (on Alpine) that I have, that did boot fine but would not work as a Subnet router / accept-routes (used to access other clients on the network, see https://tailscale.com/kb/1019/subnets ). This is probably a Debian Trixie issue with the

sysctl.conf being removed. I then tried cp sysctl.conf.bak sysctl.d/99-sysctl.conf & a lot of other messing, and even though it appears that IPV4/6 forwarding is active on the host, it still would not work.I subsequently spun up a fresh Debian 12 server VM (from above cloud-init template VM) & installed Tailscale on it & it worked first time. I usually prefer LXCs for these lighter tasks (my above original Alpine LXC for Tailscale, which has worked for years, backs up to about 20MB!) but I’ll have to make do for the present.

In general, I find PVE9 more snappier in the GUI vs PVE8, including a faster boot, and can confirm that the package/core temps of the host are lower, also mirrored by an average of 18w vs 20w power used (as monitored from Home Assistant).

So again kudos to the awesome team – for this magnificent (not uneventful) ride.

On a sidenote: when running:

Code:

~# apt autoremove --dry-run

REMOVING:

libabsl20220623 libpython3.11 mokutil

libavif15 libqpdf29 proxmox-kernel-6.8.12-12-pve-signed

libdav1d6 librav1e0 shim-helpers-amd64-signed

libpaper1 libsvtav1enc1 shim-signed

libpoppler-cpp0v5 libutempter0 shim-signed-common

libpoppler126 libx265-199 shim-unsigned

Summary:

Upgrading: 0, Installing: 0, Removing: 18, Not Upgrading: 0

Remv libabsl20220623 [20220623.1-1+deb12u2]

Remv libavif15 [0.11.1-1+deb12u1]

Remv libdav1d6 [1.0.0-2+deb12u1]

Remv libpaper1 [1.1.29]

Remv libpoppler-cpp0v5 [22.12.0-2+deb12u1]

Remv libpoppler126 [22.12.0-2+deb12u1]

Remv libpython3.11 [3.11.2-6+deb12u6]

Remv libqpdf29 [11.3.0-1+deb12u1]

Remv librav1e0 [0.5.1-6]

Remv libsvtav1enc1 [1.4.1+dfsg-1]

Remv libutempter0 [1.2.1-4]

Remv libx265-199 [3.5-2+b1]

Remv shim-signed [1.47+pmx1+15.8-1+pmx1]

Remv shim-signed-common [1.47+pmx1+15.8-1+pmx1]

Remv mokutil [0.7.2-1]

Remv proxmox-kernel-6.8.12-12-pve-signed [6.8.12-12]

Remv shim-helpers-amd64-signed [1+15.8+1+pmx1]

Remv shim-unsigned [15.8-1+pmx1]I’m not sure why the upgrade-installer should not automatically do this, & also remove all older kernels before 6.14.x which as far as I know cannot be run on PVE9 anyway. Maybe there is a way of reverting back to PVE8 (with a pve9to8 script)

Last edited: