ENVIRONMENT: We are having 20MB/s networking connection on our private cloud in the datacenter. The severs are Supermicro these are connected with a MELLANOX TOR Switch and Mellanox Cards (Ethernet) and have both on board NIC cards ports (10 GBPS x2) and Mellanox Card ports (50GBPS x2). The Server to server data transfer is meeting the expected speeds. (Test can be provided if required). We have installed Proxmox VE on bare metal.

Our operating needs are VMs (both Linux & Windows) for running applications and also databases. Some of these VMs are connected and communicate with each other.

For testing we have created and shall have three operating environments

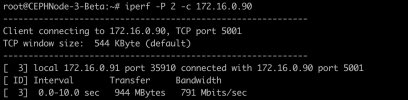

We have also test Ceph Cluster created. (This is Ceph on Ubuntu). It consists of Ceph Master and three Ceph nodes for Pilot.

ISSUES;

When we try to download anything within proxmox server or any VM's, the download speed is around 1.5MB/s only. Could you please help us in enhancing the download speed? There is a latency when we execute commands between VMs.

Question 1: Will it help to improve the performance if we do the following?

Enable DPDK on Ubuntu

Enable Open v switch and communicate directly with the hardware

Enable SR IOV

Question 1A: If yes then what are the points that we need to keep in mind while configuration and the change in the settings that need to be made in the firmware / Proxmox VE on Debian and in Ubuntu running the VMs.

Question 2: How should we setup the NIC cards interfaces to get optimum configuration and throughput in line with the hardware speeds listed above under environment?

Question 3: We propose to use NVME for production Environment. What is the best practice when we add them to the PROXMOX Server and the configuration

changes that we need to keep in mind while doing so.

Thanks for all the help as we are new to PROXMOX and need the august community to provide guidance

Our operating needs are VMs (both Linux & Windows) for running applications and also databases. Some of these VMs are connected and communicate with each other.

For testing we have created and shall have three operating environments

- Unit Testing – We call this staging

- Pre-production – We call this beta.

- Production - Live environment.

We have also test Ceph Cluster created. (This is Ceph on Ubuntu). It consists of Ceph Master and three Ceph nodes for Pilot.

ISSUES;

When we try to download anything within proxmox server or any VM's, the download speed is around 1.5MB/s only. Could you please help us in enhancing the download speed? There is a latency when we execute commands between VMs.

Question 1: Will it help to improve the performance if we do the following?

Enable DPDK on Ubuntu

Enable Open v switch and communicate directly with the hardware

Enable SR IOV

Question 1A: If yes then what are the points that we need to keep in mind while configuration and the change in the settings that need to be made in the firmware / Proxmox VE on Debian and in Ubuntu running the VMs.

Question 2: How should we setup the NIC cards interfaces to get optimum configuration and throughput in line with the hardware speeds listed above under environment?

Question 3: We propose to use NVME for production Environment. What is the best practice when we add them to the PROXMOX Server and the configuration

changes that we need to keep in mind while doing so.

Thanks for all the help as we are new to PROXMOX and need the august community to provide guidance