Hello all,

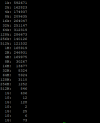

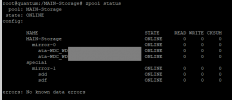

I would like to configure a metadata special device for my ZFS mirror pool (2x HDD's, 18TB each) but I am not entirely sure of the steps. I've found some documentation online but I am still hesitant as I don't want to mess things up & then re-build the mirror. I do have data backed up, it's just a lot of terabytes and it'll take days :X So here I am, asking for advice from someone who's done it before / knows how to do this in a proper way.

I purchased 2x WD RED SSD's (500GB) which I'd like to have in a mirror config and use as metadata special device for my HDD mirror pool.

I don't mind setting the "special_small_blocks" parameter to a bit higher value, actually, I would like to have it around 2MB, not sure if that's smart? (just below the size of average smartphone picture size).

I would greatly appreciate if someone would be kind enough to spare few mins and advise on what commands to use & how to set this up.

Thanks in advance!

I would like to configure a metadata special device for my ZFS mirror pool (2x HDD's, 18TB each) but I am not entirely sure of the steps. I've found some documentation online but I am still hesitant as I don't want to mess things up & then re-build the mirror. I do have data backed up, it's just a lot of terabytes and it'll take days :X So here I am, asking for advice from someone who's done it before / knows how to do this in a proper way.

I purchased 2x WD RED SSD's (500GB) which I'd like to have in a mirror config and use as metadata special device for my HDD mirror pool.

I don't mind setting the "special_small_blocks" parameter to a bit higher value, actually, I would like to have it around 2MB, not sure if that's smart? (just below the size of average smartphone picture size).

I would greatly appreciate if someone would be kind enough to spare few mins and advise on what commands to use & how to set this up.

Thanks in advance!