Seems a recurring problem, and did not find helpful solution so far.

There are hundreds of cases you might need to mount CephFS inside your LXCs.

In my case, I need to store PostgreSQL WAL archives for PITR. Performances are not important. I have a 3 nodes PVE cluster.

I tested :

So I'm back to square one.

How can we access a CephFS from LXC, still keeping the LXC snapshots and LXC migrations ?

Or is it just not possible ?

Thanks,

There are hundreds of cases you might need to mount CephFS inside your LXCs.

In my case, I need to store PostgreSQL WAL archives for PITR. Performances are not important. I have a 3 nodes PVE cluster.

I tested :

- Standard cephfs mount. Of course it does not work since it cannot access the cephfs kernel driver from LXC.

- FUSE, doesn't work neither: `fuse: device not found, try 'modprobe fuse' first` despite activating the 'FUSE' feature for the LXC from the Proxmox interface.

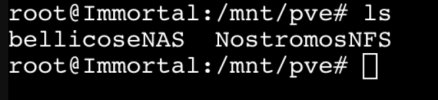

- Mount the CephFS on the PVE hosts, and bind mount into the LXC by issuing a command like :

pct set 105 -mp0 /mnt/pve/cephfs,mp=/mnt/cephfs

The CephFS is accessible from LXC, but Snapshots are not working any more, nor the LXC migrations :

migration aborted (duration 00:00:00): cannot migrate local bind mount point 'mp0'

So I'm back to square one.

How can we access a CephFS from LXC, still keeping the LXC snapshots and LXC migrations ?

Or is it just not possible ?

Thanks,

Last edited: