Sorry, I have to correct.

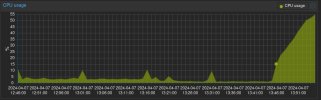

The backup of the VM happened yesterday at 22:30 and afterwards it worked fine until 04:05. Last journal entries:

So it seems the hanging was not caused by PBS, at least not directly.

It was the only VM that failed on a pbs backup. Nothing special, just the VM used the most on this cluster.

The backup of the VM happened yesterday at 22:30 and afterwards it worked fine until 04:05. Last journal entries:

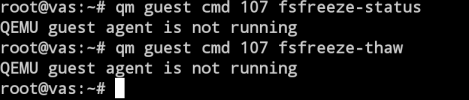

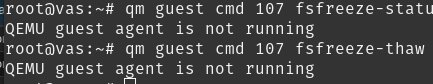

Code:

...

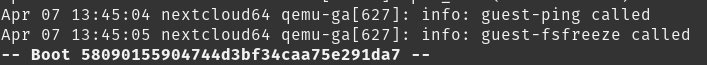

Mar 19 04:05:02 nextcloud64 qemu-ga[621]: info: guest-ping called

Mar 19 04:05:03 nextcloud64 qemu-ga[621]: info: guest-fsfreeze called

Mar 19 04:05:08 nextcloud64 qemu-ga[621]: info: guest-ping called

Mar 19 04:05:09 nextcloud64 qemu-ga[621]: info: guest-fsfreeze called

(vm stop & start by me)

-- Boot a6539a246aee4e9d8bd2ab2c7b1cec86 --

Mar 19 07:02:37 nextcloud64 kernel: Linux version 6.1.0-18-amd64 (debian-kernel@lists.debian.org) (gcc-12 (Debian 12.2.0-14) 12.2.0, GNU ld (GNU Binutils for Debian) 2.40) #1 SMP PREEMPT_DYNAMIC Debian 6.1.76-1 (2024-02-01)

...So it seems the hanging was not caused by PBS, at least not directly.

Is there anything special about this VM compared to other VMs? Did the same VM already fail multiple times?

It was the only VM that failed on a pbs backup. Nothing special, just the VM used the most on this cluster.

Last edited: